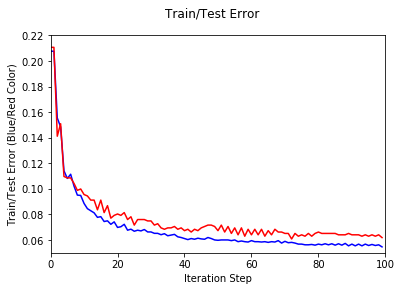

Implementation of AdaBoost with "optimal" decision stumps on the training data. After each round, the following gets computed:

- Current training error for the weighted linear combination predictor at this round

- Current testing error for the weighted linear combination predictor at this round

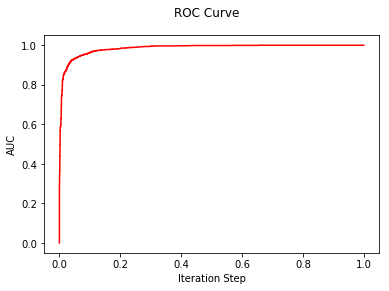

- Current test AUC for the weighted linear combination predictor at this round

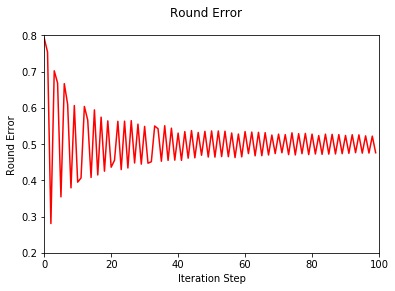

- The local "round" error for the decision stump returned

Python 3

from Datasets import spambase

filename = "data/Spambase dataset/spambase.data"

train_X, train_y, test_X, test_y = spambase(filename)from AdaBoost import AdaBoost

model = AdaBoost(iterations = 100)model.fit(train_X, train_y, test_X, test_y)model.plot_train_test_error()model.plot_ROC_curve()model.plot_round_error()Train error, Test error and Test AUC after every 25 iterations:

- Round 0 : Train_err: 0.20760869565217388 Test_err: 0.21064060803474483 AUC: 0.748974795114

- Round 25 : Train_err: 0.06766304347826091 Test_err: 0.07600434310532034 AUC: 0.978207515077

- Round 50 : Train_err: 0.060054347826086985 Test_err: 0.07057546145494031 AUC: 0.982347610948

- Round 75 : Train_err: 0.056793478260869557 Test_err: 0.06297502714440828 AUC: 0.984188340807

- Round 100 : Train_err: 0.05461956521739131 Test_err: 0.061889250814332275 AUC: 0.985246946034

Graph of Train/Test Error, ROC Curve, Round Error vs number of iterations: