The repository contains the official implementation for the paper "DPMesh: Exploiting Diffusion Prior for Occluded Human Mesh Recovery" (CVPR 2024).

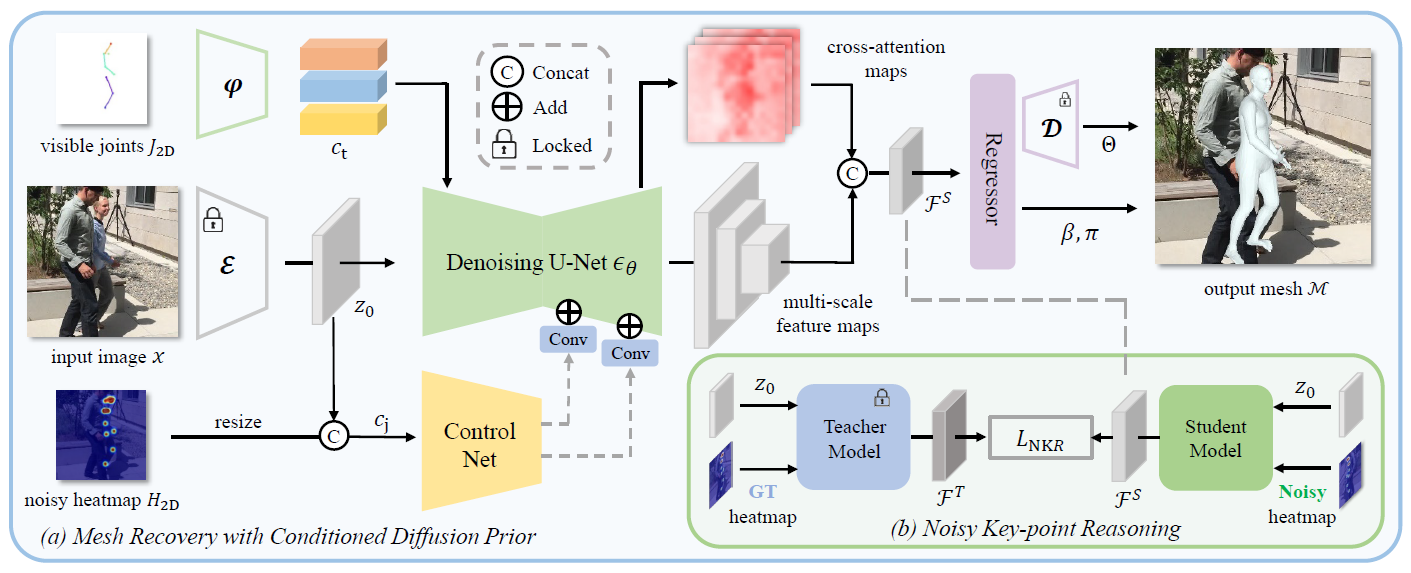

DPMesh is an innovative framework for occluded human Mesh recovery that capitalizes on the profound Diffusion Prior about object structure and spatial relationships embedded in a pre-trained text-to-image diffusion model.

- Release model and inference code.

- Release code for training dataloader .

We recommend you to use an Anaconda virtual environment. If you have installed Anaconda, run the following commands to create and activate a virtual environment.

conda env create -f environment.yaml

conda activate dpmesh

pip install git+https://github.com/cloneofsimo/lora.gitWe prepare the data in a samilar way as 3DCrowdNet & JOTR. Please refer to here for dataset, SMPL model, VPoser model.

For 3DPW-OC and 3DPW-PC, we apply the same input key-points annotations as JOTR. Please refer to 3DPW-OC & 3DPW-PC.

For evaluation only, you can just prepare 3DPW dataset (images and annotations) and the joint regressors, we provide the directory structure below.

|-- common

| |-- utils

| | |-- human_model_files

| | |-- smplpytorch

|-- data

| |-- J_regressor_extra.npy

| |-- annotations

| | |-- crowdpose.pkl

| | |-- muco.pkl

| | |-- human36m.pkl

| | |-- mscoco.pkl

| |-- 3DPW

| | |-- 3DPW_latest_test.json

| | |-- 3DPW_oc.json

| | |-- 3DPW_pc.json

| | |-- 3DPW_validation_crowd_hhrnet_result.json

| | |-- imageFiles

| | |-- sequenceFiles

| |-- CrowdPose

| | |-- images

| | |-- annotations

| |-- MuCo

| | |-- images

| | |-- annotations

| |-- Human36M

| | |-- images

| | |-- annotations

| | |-- J_regressor_h36m_correct.npy

| |-- MSCOCO

| | |-- images

| | |-- annotations

| | |-- J_regressor_coco_hip_smpl.npy

Please download our pretrained checkpoints from this link and put them under ./checkpoints. The file directory should be:

|-- checkpoints

|--|-- 3dpw_best_ckpt.pth.tar

|--|-- 3dpw-oc_best_ckpt.pth.tar

|--|-- 3dpw-pc_best_ckpt.pth.tar

You can evaluate DPMesh use following commands:

CUDA_VISIBLE_DEVICES=0 \

torchrun \

--master_port 29591 \

--nproc_per_node 1 \

eval.py \

--cfg ./configs/main_train.yml \

--exp_id="main_train" \

--distributed \The evaluation process can be done with one Nvidia GeForce RTX 4090 GPU (24GB VRAM). You can use more GPUs by specifying the GPU ids.

You can render the video with the human mesh on it. First of all, you need to install some requirements for rendering. Here are some tips from OSX

1、Install oemesa follow https://pyrender.readthedocs.io/en/latest/install/

2、Reinstall the specific pyopengl fork: https://github.com/mmatl/pyopengl

3、Set opengl's backend to egl or osmesa via os.environ["PYOPENGL_PLATFORM"] = "egl"

Then you may follow these steps below to pre-process your video data.

- Create a directory in

./demoand save your video in./demo/testvideo/testvideo.mp4|-- demo |--|-- testvideo |--|--|-- annotations |--|--|-- images |--|--|-- renderimgs |--|--|-- testvideo.mp4 - Split your video into image frames (using ffmpeg) and save the images in

./demo/testvideo/images - Using the off-the-shelf detectors like AlphaPose to fetch the keypoints of each person in each image, saving the

.pklresults in./demo/testvideo/annotations. You can use whatever detectors you like, but please pay attention to the key-points format. Here we use Openpose format, which has 17 key-points of each person. - Setting the

renderimg = 'testvideo'inconfig.pyand choose the pre-trained model to resume (like 3dpw_best_ckpt.pth.tar).

Now you can run render.py to render the human mesh:

CUDA_VISIBLE_DEVICES=0 \

torchrun \

--master_port 29591 \

--nproc_per_node 1 \

render.py \

--cfg ./configs/main_train.yml \

--exp_id="render" \

--distributed \Finally you can combine the rendered images in ./demo/testvideo/renderimgs together into a video (you can also use ffmpeg).

Instead of computing the 3D joints coordinates during training process, we prepare these annotations before and save them into .pkl files, please refer to this link for our annotations.

Now you can run train.py to train our model:

CUDA_VISIBLE_DEVICES=0,1,2,3,4,5,6,7 \

torchrun \

--master_port 29592 \

--nproc_per_node 8 \

train.py \

--cfg ./configs/main_train.yml \

--exp_id="train" \

--distributed \Furthermore, if you want to train from the scratch to evaluate the potential of diffusion priors, you can uncomment the codes in ./common/vpd/vpdencoder_useattn.py, line 38. You can download the OpenPose-ControlNet model from control_v11p_sd15_openpose.pth.

Enjoy it!

We would like to express our sincere thanks to the author of JOTR for the clear code base and quick response to our issues.

We also thank ControlNet, VPD and LoRA, for our code is partially borrowing from them.

- If you find an error as below, please refer to this link for help.

RuntimeError: Subtraction, the `-` operator, with a bool tensor is not supported. If you are trying to invert a mask, use the `~` or `logical_not()` operator instead.@inproceedings{zhu2024dpmesh,

title={DPMesh: Exploiting Diffusion Prior for Occluded Human Mesh Recovery},

author={Zhu, Yixuan and Li, Ao and Tang, Yansong and Zhao, Wenliang and Zhou, Jie and Lu, Jiwen},

booktitle={Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition},

pages={1101--1110},

year={2024}

}

This code is distributed under an MIT LICENSE.