mkdir ~/proxys

docker run --rm -t -v ~/proxys:/opt/scraper_app/scraper/proxys rattydave/proxy-scraper-checker

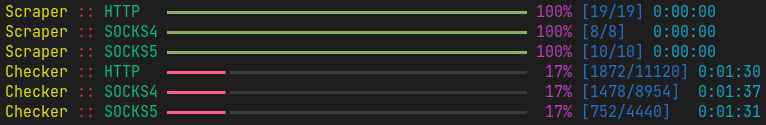

HTTP, SOCKS4, SOCKS5 proxies scraper and checker.

- Asynchronous.

- Uses regex to search for proxies (ip:port format) on a web page, which allows you to pull out proxies even from json without making any changes to the code.

- Supports determining the geolocation of the proxy exit node.

- Can determine if a proxy is anonymous.

For a version that uses Python's built-in logging instead of rich, see the simple-output branch.

- Make sure

Pythonversion is 3.7 or higher. - Install dependencies from

requirements.txt(python -m pip install -U -r requirements.txt).- If you want to improve the performance, you can also install extra dependencies. See aiohttp documentation.

- Edit

config.pyaccording to your preference. - Run

main.py.

When the script finishes running, the following folders will be created (this behavior can be changed in the config):

proxies- proxies with any anonymity level.proxies_anonymous- anonymous proxies.proxies_geolocation- same asproxies, but including exit-node's geolocation.proxies_geolocation_anonymous- same asproxies_anonymous, but including exit-node's geolocation.

Geolocation format is ip:port::Country::Region::City.