Different from classic adversarial examples that are configured to has a small L_p norm distance to the normal examples, a localized adversarial patch attacker can arbitrarily modify the pixel values within a small region.

The attack algorithm is similar to those for the classic L_p adversarial example attack. You define a loss function and then optimize your perturbation to attain attack objective. The only difference is that now 1) you can only optimize over pixels within a small region, 2) but within that region, the pixel values are be arbitrary as long as they are valid pixels.

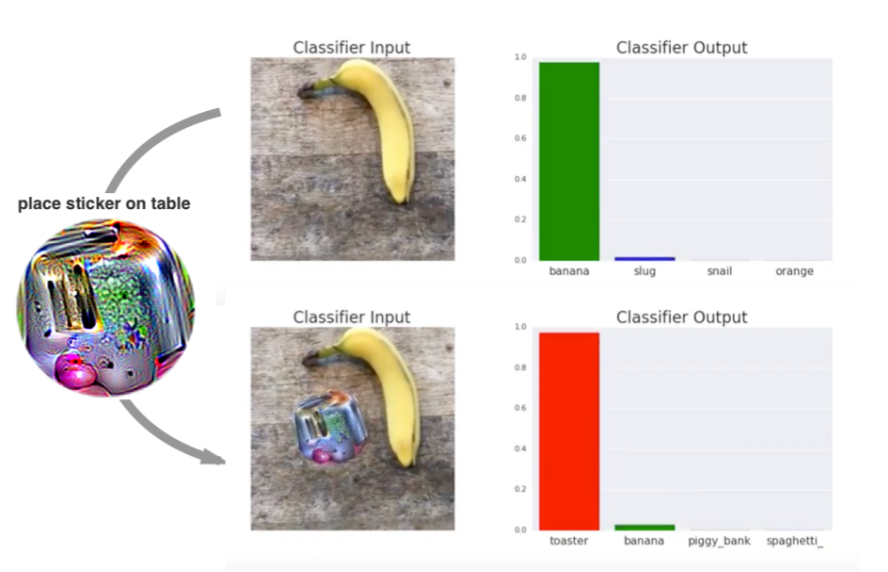

Example of localized adversarial patch attack (image from Brown et al.):

It can be realized in the physical world!

Since all perturbations are within a small region, we can print and attach the patch in our physical world. This type of attack impose a real-world threat on ML systems!

Note: not all existing physically-realizable attacks are in the category of patch attacks, but the localized patch attack is (one of) the simplest and the most popular physical attacks.

- Test-time attacks/defenses (not consider localized backdoor triggers)

- 2D computer vision tasks (e.g., image classification, object detection, image segmentation)

- Localized attacks (not consider other physical attacks that are more "global", e.g., some stop sign attacks which require changing the entire stop sign background)

- More on defenses: I try to provide a comprehensive list of defense papers while the attack papers might be incomprehensive

- Empirical defense: defenses that are heuristic-based and have little security guarantee against an adaptive attacker

- Provably robust defenses / certifiably robust defenses / certified defenses: we can prove the robustness for certain certified images. The robustness guarantee holds for any adaptive white-box attacker within the threat model

I am still developing this paper list (I haven't added notes for all papers). If you want to contribute to the paper list, add your paper, correct any of my comments, or share any of your suggestions, feel free to reach out :)

arXiv 1712; NeurIPS workshop 2017

- The first paper that introduces the concept of adversarial patch attacks

- Demonstrate a universal physical world attack

arXiv 1801; ICML 2018

- Seems to be a concurrent work (?) as "Adversarial Patch"

- Digital domain attack

arXiv 2004; ECCV 2020

- a black-box attack via reinforcement learning

Sprinter 2021

- data independent attack; attack via increasing the magnitude of feature values

arXiv 2102

- use 3D modeling to enhance physical-world patch attack

arXiv 2104

- add stickers to face to fool face recognition system

arXiv 2106; CVPR 2021

- focus on transferability

arXiv 2106; an old version is available at arXiv 2009

- generate small (inconspicuous) and localized perturbations

arXiv 2108

- consider physical-world patch attack in the 3-D space (images are taken from different angles)

https://arxiv.org/pdf/2106.09222.pdf

(go back to table of contents)

CVPR workshop 2018

- The first empirical defense. Use saliency map to detect and mask adversarial patches.

arXiv 1807; WACV 2019

- An empirical defense. Use pixel gradient to detect patch and smooth in the suspected regions.

arXiv 1909, ICLR 2020

- Empirical defense via adversarial training

- Interestingly show that adversarial training for patch attack does not hurt model clean accuracy

- Only works on small images

ICLR 2020

The first certified defense.

- Show that previous two empirical defenses (DW and LGS) are broken against an adaptive attacker

- Adapt IBP (Interval Bound Propagation) for certified defense

- Evaluate robustness against different shapes

- Very expensive; only works for CIFAR-10

IEEE S&P Workshop on Deep Learning Security 2020

- Certified defense; clip BagNet features

- Efficient

arXiv 1812; IEEE S&P Workshop on Deep Learning Security 2020

- Empirical defense that leverages the universality of the attack (inapplicable to non-universal attacks)

arXiv 2002, NeurIPS 2020

-

Certified defense; adapt ideas of randomized smoothing for

$L_0$ adversary - Majority voting on predictions made from cropped pixel patches

- Scale to ImageNet but expansive

arXiv 2002

- empirical defense

arXiv 2004; ACNS workshop 2020

- Certified defense for detecting an attack

- Apply masks to the different locations of the input image and check inconsistency in masked predictions

- Too expansive to scale to ImageNet (?)

PatchGuard: A Provably Robust Defense against Adversarial Patches via Small Receptive Fields and Masking

arXiv 2005; USENIX Security 2021

- Certified defense framework with two general principles: small receptive field to bound the number of corrupted features and secure aggregation for final robust prediction

- BagNet for small receptive fields; robust masking for secure aggregation, which detects and masks malicious feature values

- Efficient; SOTA performance (in terms of both clean accuracy and provable robust accuracy)

- Subsumes several existing and follow-up papers

- Not parameter-free

arXiv 2005, ECCV workshop 2020

- empirical defense via adversarial training (in which the patch location is being optimized)

arXiv 2009; ACCV 2020

Available on ICLR open review in 10/2020; ICLR 2021

- Certified defense

- BagNet to bound the number of corrupted features; Heaviside step function & majority voting for secure aggregation

- SOTA performance on CIFAR-10

- Efficient, evaluate on different patch shapes

Available on ICLR open review in 10/2020

- Certified defense

- Randomized image cropping + majority voting

- only probabilistic certified robustness

Robustness Out of the Box: Compositional Representations Naturally Defend Against Black-Box Patch Attacks

arXiv 2012

- empirical defense; directly use CompNet to defend against black-box patch attack (evaluated with PatchAttack)

CISS 2021

- An empirical defense against black-box patch attacks

- A direct application of CompNet

arXiv 2104; ICLR workshop 2021

- Certified defense for detecting an attack

- A hybrid of PatchGuard and Minority Report

- SOTA provable robust accuracy (for attack detection) and clean accuracy on ImageNet

arXiv 2105

- An empirical defense that use the magnitude and variance of the feature map values to detect an attack

- focus more on the universal attack (both localized patch and global perturbations)

Turning Your Strength against You: Detecting and Mitigating Robust and Universal Adversarial Patch Attack

arXiv 2108

- empirical defense; use universality

arXiv 2108

- Certified defense that is compatible with any state-of-the-art image classifier

- huge improvements in clean accuracy and certified robust accuracy (its clean accuracy is close to SOTA image classifier)

(go back to table of contents)

TODO

see Table 2 of the PatchCleanser paper for a comprehensive comparison

arXiv 1806; AAAI workshop 2019

- The first (?) patch attack against object detector

arXiv 1904; CVPR workshop 2019

- using a rigid board printed with adversarial perturbations to evade detection of person

arXiv 1906

- interestingly show that a physical-world patch at the background (far away from the victim objects) can have malicious effect

arXiv 1909

CCS 2019

arXiv 1910; ECCV 2020

- use a non-rigid T-shirt to evade person detection

arXiv 1910; ECCV 2020

- wear an ugly T-shirt to evade person detection

arXiv 1912

IEEE IoT-J 2020

arXiv 2008

arXiv 2010; IJCNN 2020

arXiv 2010; CIKM workshop

arXiv 2010

arXiv 2010

arXiv 2103; ICME 2021

arXiv 2105

Evaluating the Robustness of Semantic Segmentation for Autonomous Driving against Real-World Adversarial Patch Attacks

arXiv 2108

https://arxiv.org/abs/1802.06430

(go back to table of contents)

arXiv 1910; CVPR workshop 2020

- The first empirical defense, adding a regularization loss to constrain the use of spatial information

- only experiment on YOLOv2 and small datasets like PASCAL VOC

arXiv 210; ICML 2021 workshop

arXiv 2102

- The first certified defense for patch hiding attack

- Adapt robust image classifiers for robust object detection

- Provable robustness at a negligible cost of clean performance

arXiv 2103

- Empirical defense via adding adversarial patches and a "patch" class during the training

arXiv 2106

- Two empirical defenses for patch hiding attack

- Feed small image region to the detector; grows the region with some heuristics; detect an attack when YOLO detects objects in a smaller region but miss objects in a larger expanded region.