2School of Artificial Intelligence, University of Chinese Academy of Sciences

⭐ If LoRA-IR is helpful to your projects, please help star this repo. Thanks! 🤗

- 2024.10.20: Release training&inference code, pre-trained models of Setting Ⅰ.

- 2024.10.20: This repo is created.

-

Clone this repo and navigate to LoRA-IR folder

git clone https://github.com/shallowdream204/LoRA-IR.git cd LoRA-IR -

Create Conda Environment and Install Package

conda create -n lorair python=3.11 -y conda activate lorair conda install pytorch==2.3.1 torchvision==0.18.1 torchaudio==2.3.1 pytorch-cuda=12.1 -c pytorch -c nvidia pip3 install -r requirements.txt python3 setup.py develop --no_cuda_ext

Training and Testing instructions for different settings are provided in their respective directories. Here is a summary table containing hyperlinks for easy navigation:

| Setting | Training Instructions | Evaluation Instructions | Pre-trained Models |

|---|---|---|---|

| Setting Ⅰ | Link | Link | Download |

The provided code and pre-trained weights are licensed under the Apache 2.0 license.

This code is based on NAFNet and BasicSR. Some code are brought from loralib, LLaVA and Restormer. We thank the authors for their awesome work.

If you have any questions, please feel free to reach me out at shallowdream555@gmail.com.

If you find our work useful for your research, please consider citing our paper:

@article{ai2024lora,

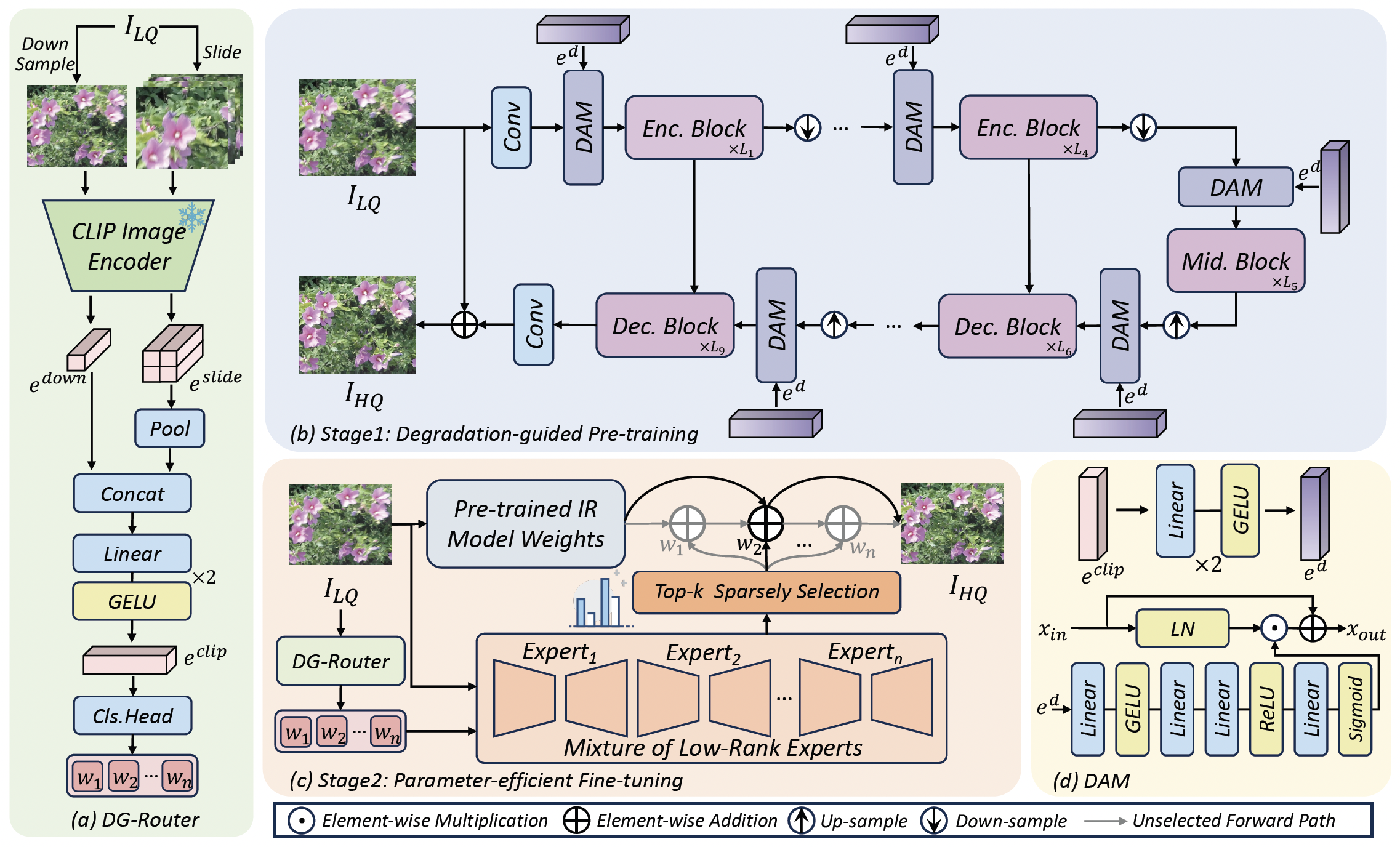

title={LoRA-IR: Taming Low-Rank Experts for Efficient All-in-One Image Restoration},

author={Ai, Yuang and Huang, Huaibo and He, Ran},

journal={arXiv preprint arXiv:2410.15385},

year={2024}

}