An example that showcases the benefit of running AI inside Redis.

This repository contains the backend web app built with Flask, front end built with Angular (compiled to native JS) and the model files required for the chatbot to work. Follow below steps to bring the chatbot up.

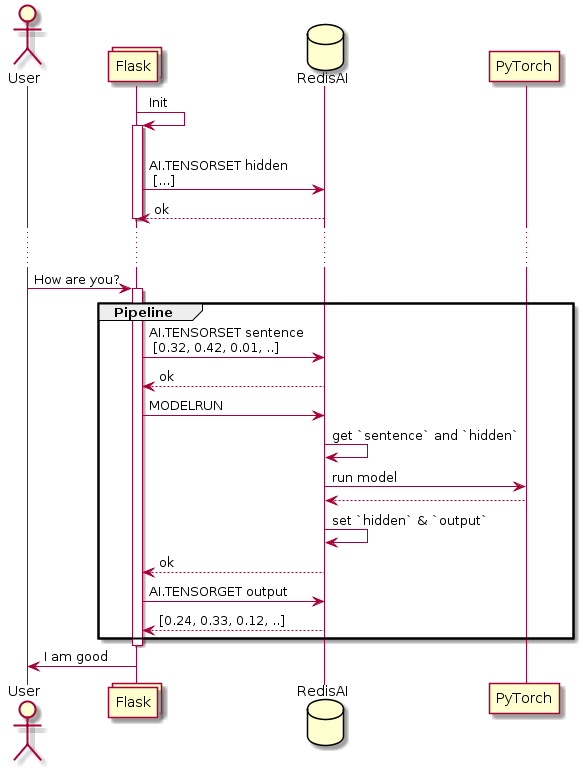

When the flask application starts, it will set an initial hidden tensor. This hidden tensor represents the intermediate state of the conversation. On each new message received, an sentence tensor and the hidden tensor are passed through the model, which in turn produces an output and overrides the hidden tensor with the new intermediate state.

(Note this diagram is simplified, the full flow can be followed here)

- Docker

- Docker-compose

$ git clone git@github.com:RedisAI/ChatBotDemo.git

$ cd ChatBotDemo

$ docker-compose up

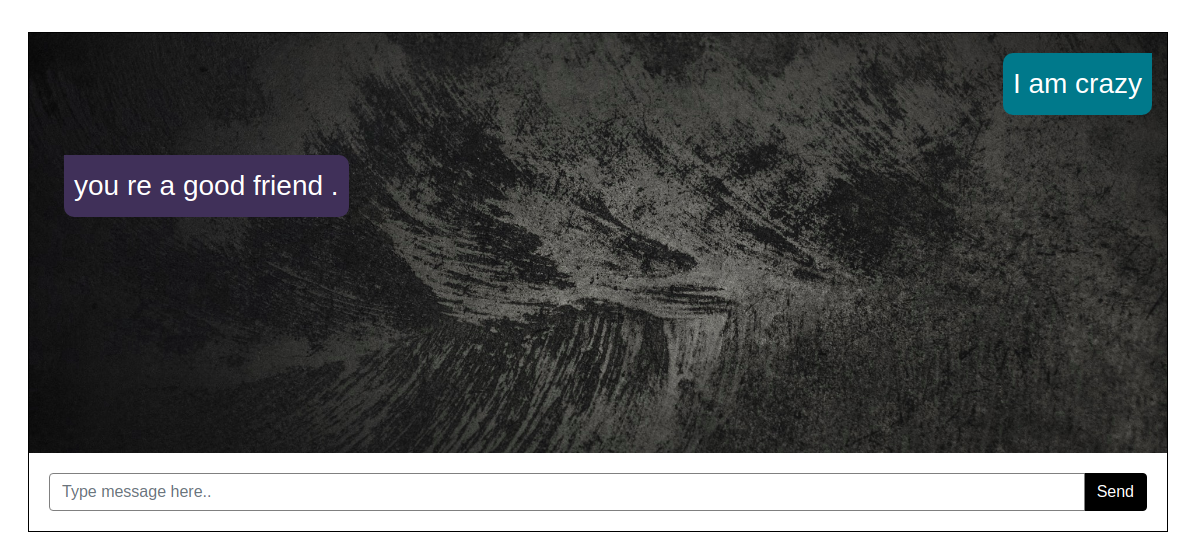

Try out the API (we have only one API endpoint -> /chat which accepts message as the JSON key with your message as value) using curl as shown below.

curl http://localhost:5000/chat -H "Content-Type: application/json" -d '{"message": "I am crazy"}'

Open a second terminal and inspect the keys:

$ redis-cli

127.0.0.1:6379> keys *

1) "d_output"

2) "decoder"

3) "hidden"

4) "encoder"

5) "e_output"

6) "d_input"

7) "sentence"

127.0.0.1:6379> type hidden

AI_TENSOR

Open a browser and point it to http://localhost:5000.