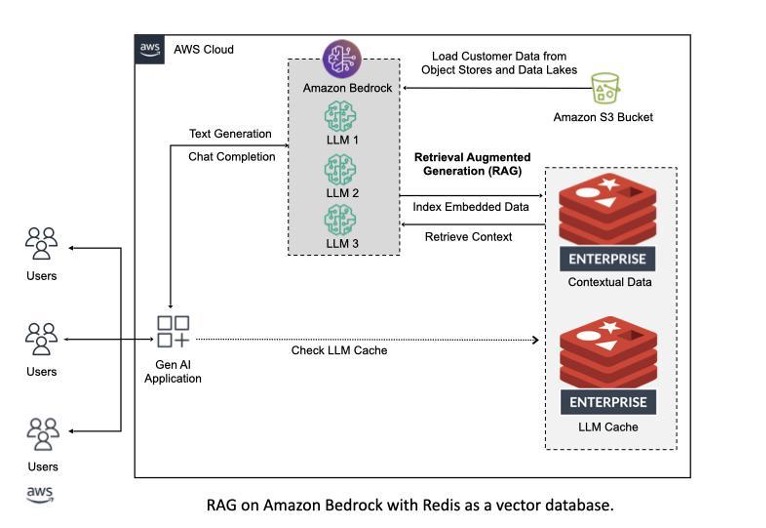

💪🏼 Introducing the preview integration of Amazon Bedrock and Redis Enterprise Cloud: a game-changer for building LLM applications. This collaboration offers a robust, scalable, and efficient solution for developers, streamlining the use of LLMs with Redis as a vector database. Dive into our new reference architecture to harness the full potential of Retrieval-Augmented Generation (RAG) in your LLM agents.

🧠 The above reference architecture highlights Agents for Amazon Bedrock and Redis Enterprise Cloud as the knowledge base for RAG as well as the LLM Cache. The Bedrock integration handles the following for customers:

- Loading raw source documents from Amazon S3

- Chunking and creating vector embeddings with a chosen LLM

- Storing and indexing embeddings within Redis Enterprise Cloud as a vector database

- Performing semantic search to extract relevant context from the vector database during RAG

⚡ Additionally, Redis should be utilized as an LLM Cache to improve throughput of responses while cutting down on costs.

Use the official Redis Cloud docs to get started, or for a more step-by-step experience, follow the guides below:

To setup Redis Enterprise Cloud as your vector database via the AWS Marketplace, please follow the instructions here.

To add your deployed database credentials to AWS Secrets Manager, please follow along here.

To create your Bedrock vector index in Redis, please follow the instructions here.

COMING SOON

Go ahead and get a taste of AWS Bedrock by running a few examples yourself. These will continuously update once the integration is live and as community developers add more examples.

Having issues with the integration? Get in touch with a Redis expert today. Report other issues on the github repo here by opening an issue.