Repository for benchmarking different systems using programs from the Computer Language Benchmarks Game.

This project contains the following directories:

- bencher C-binary which runs and times benchmark programs (see Benchmark Procedure)

- benchmarks Files related to benchmark programs, split into directories of different benchmark types (see Selecting Programs, Compiling, Benchmarking)

- cargo Empty cargo project used to compile dependencies (see Rust)

- output Will contain the bencher binary as well as files to diff program output against (see Benchmark Procedure)

- plots Scripts related to making plots using gnuplot (see Plotting)

- scripts Various utility scripts (see Selecting Programs, Rust, Benchmark Procedure, iPerf)

- tmp Mounting directory for a tmpfs file-system to hold program output during benchmark runs (see Benchmark Procedure)

These benchmarks exist to evaluate the performance of the HiFive Unleashed developer board for the Freedom U540 SoC. A Raspberry Pi 4B is used as a baseline for the measurements.

The Freedom U540 SoC has 4 RV64GC application cores and 1 RV64IMAC management core. Each application core contains 32 KiB each of instruction and data cache, while the management core uses 16 KiB instruction cache and 8 KiB of tightly integrated memory. 2 MB of L2 cache then connect to 8 GB of DDR4 ram. All of these memory layers also use ECC.

Operating system and programs are stored on a Micro-SD card.

The BCM2711 on the Raspberry Pi has 4 ARM Cortex-A72 cores. They each contain 48 KiB of instruction cache and 32 KiB of data cache. 1 MB of L2 cache then connects to 4 GB of LPDDR4 ram.

Operating system and programs are stored on a Micro-SD card.

Some files in this repository are a result of executing the following steps. These files are currently configured for the HiFive Freedom Unleashed and the RaspberryPi 4B.

Programs are selected from the extracted zip of all programs using script/extract.lua.

- Edit the

dirstable to change how benchmark names are mapped - Edit the

c_excludeandrs_excludetables to blacklist patterns that may not work in your testing environments (usually include / use statements) - Run

lua script/extract.lua <path-to-benchmarks-directory> <path-to-extracted-zip>/*/*

To setup Rust compilation run: lua script/update_cargo.sh

This will setup the cargo directory, which contains an empty rust binary project. Compiling this project will download and compile all dependencies collected before.

The Makefile will automatically compile this project for all target platforms as needed, as well as storing the correct rustc parameters. This allows running all Rust program builds in parallel.

The default make target will compile all benchmarks for your current system. This one should be run in parallel: make -j <number-of-cores>. Individual files can be requested by running make benchmarks/<type>/<number>.<lang>.run.

The Makefile is currently set-up to cross-compile Rust sources for the following targets:

riscv64gc-unknown-linux-gnuarmv7-unknown-linux-gnueabihf

On systems which correspond to these, the Makefile will look for $(uname -m).run.tar.gz in the project root and extract executables as required.

Tarballs can either be requested individually by running make <target-uname-m>.run.tar.gz or for all available targets at once using the cross make target. This step can also run in parallel (see Compiling).

Benchmarks are usually run using the bench make target (should not be parallelized). They can also be requested individually by running make benchmarks/<type>/<number>.<lang>.bm

The make target bench-test is available to run all benchmarks with reduced inputs, which allows testing all program binaries for functionality.

The Makefile also contains facilities to disable vectorization during compilation. This was intended to allow fair comparison to platforms that do not support such instructions (for example RISC-V). However, the current efforts to turn of vectorization did not result in a significant change in benchmark runtime.

By default, the Makefile contains the variable SIMD, which can be unset for platforms that do not support vector instructions.

If this variable is set, the Makefile will duplicate all the target programs with an additional .simd suffix. During compilation, files with the .run.simd suffix will be compiled using the regular argument set, while compilation for files just ending in .run will have an additional -fno-tree-vectorize argument.

The benchmarking implementation will change the bencher command for .simd files to include an if statement which prevents benchmarking in case the flag did not change the resulting binary executable.

The bencher binary is responsible for most of the benchmark procedure. It will go through the following steps for each program:

- Ensure proper CPU scaling setup

- For

userspacegovernor: denotes target frequency - For

performancegovernor: denotes max frequency - Other governors result in an error

- Run the benchmark 5 times

- Run the program

- Pin to CPU 1 using

sched_setaffinity- Usesetrlimitfor timeout if applicable - Use pipe to deliver input data if applicable - Mapstdoutof program to buffer file in tmpfs (created in Makefile) - Get runtime and resource data

- Use

clock_gettimefor precise timing - Usewait4(getrusage) for additional information - Write data in CSV format - Check output against baseline (in

outputdirectory, created in Makefile) - Textual diff - Numerical diff (with absolute error) - Planned: Binary diff

This repository also contains utilities to facilitate a comparison between ethox-iperf and iPerf3. The setup is intended for testing between two nodes, which are directly connected via a switch.

To get the measurements, use ./scripts/iperf-helper.sh <ethox-iperf-executable> <device> <target-ip> benchmarks/iperf-<target-name>.log [client]. Run two instances at the same time, but only one having the client argument. Always start the instance without the client argument first. <target-name> needs to correspond to the directory names used in the Plotting Setup.

Example

- Node 1:

./scripts/iperf-helper.sh "sudo ./iperf3" eth0 192.168.0.102/24 benchmarks/iperf-node2.log - Node 2:

./scripts/iperf-helper.sh ./iperf3 eth1 192.168.0.101/24 benchmarks/iperf-node1.log client

The scripts in the plots directory process and plot the raw data collected in benchmarking.

The script plots/prepare.lua will work with benchmark results in the format <platform>/<type>/<number>.<lang>.bm and iPerf logs as <platform>/iperf-<target-name>.log.

These can be set up by extracting the tarballs generated from make pack (in the project root) inside plots/<platform-name>. The platform name is arbitrary, but will show up in the plots.

The default make target (in the plots directory) will then generate plots for the total column

The plots/prepare.lua script should be called from the plots directory using lua prepare.lua <column> */*/*.bm. It performs the following steps:

- Collect values in the requested column

- Normalize to 1 GHz

- Calculate geometric mean and standard deviation per program

- Store in

output/data/<type>.dat

- Calculate geometric mean and minimum per benchmark type

- Store in

output/data/combined.dat

Geometric mean is used, as it works correctly with normalized values (see Flemming and Wallace).

The script plots/all.plt will create PDF plots for all benchmark types (currently hard-coded), as well as output/plots/average.pdf and output/plots/fastest.pdf from output/data/combined.dat and output/plots/iperf.pdf from output/data/iperf.dat.

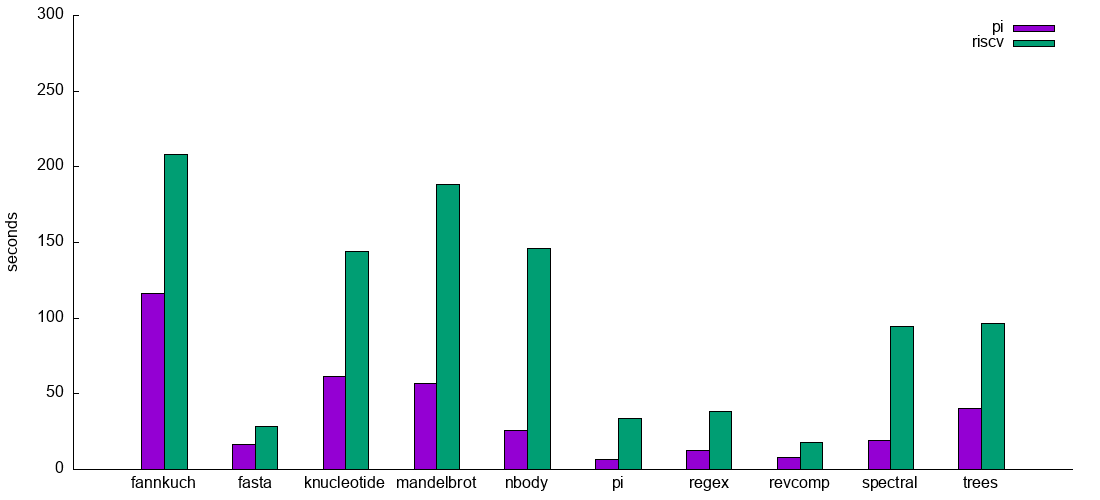

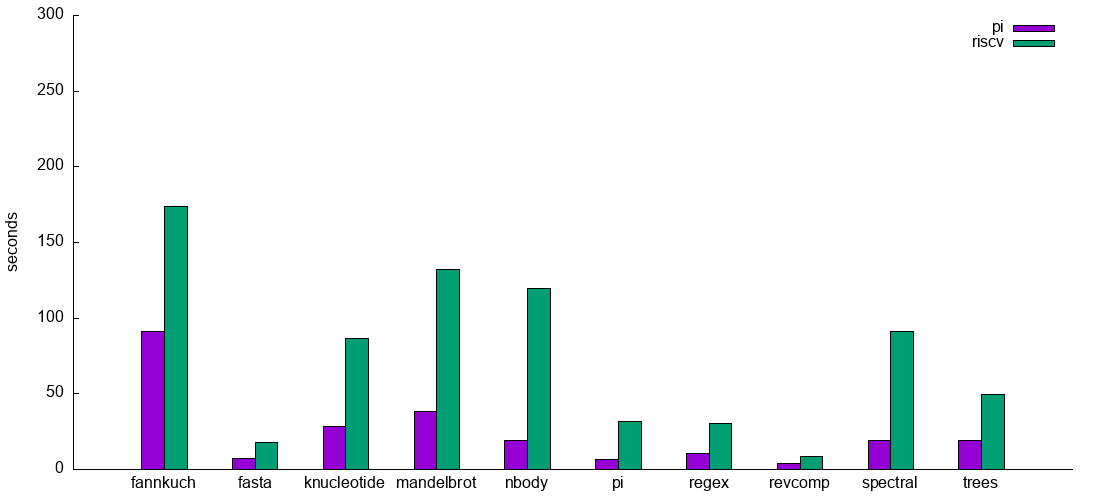

The following plots show benchmark results for the HiFive Freedom Unleashed (riscv) and the Raspberry Pi 4 Model B (pi). The compiler versions are 9.1.0 for the HiFive and 8.3.0 for the Pi.

The raw data can be found in the plots branch.