PyTorch implementation for paper To be an Artist: Automatic Generation on Food Image Aesthetic Captioning (ICTAI 2020).

Code is provided as-is, no updates expected.

Make sure your environment is installed with:

- Python 3.5+

- java 1.8.0 (for computing METEOR and SPICE)

Then install requirements:

pip install -r requirements.txt

Hyperparameters and options can be configured in config.py, see this file for more details.

Preprocess the images along with their captions and store them locally:

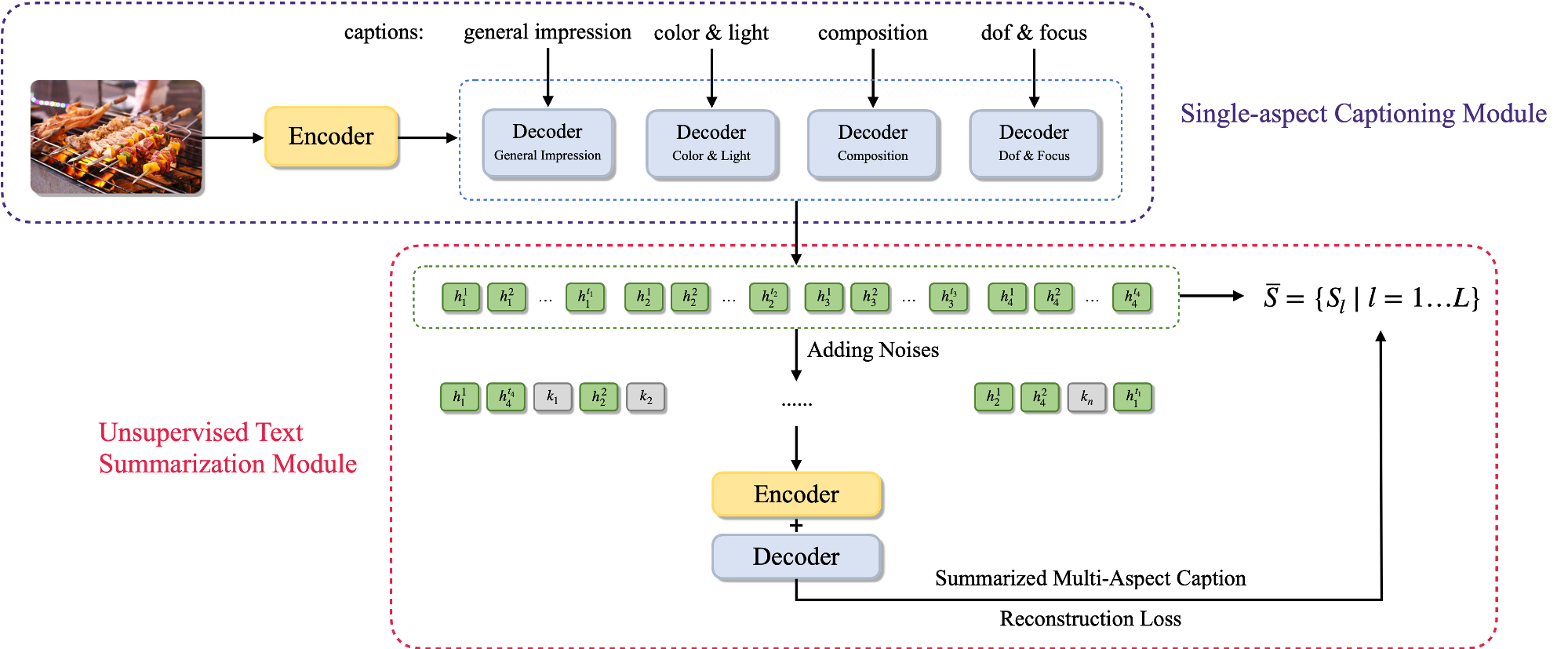

python preprocess.pySingle-Aspect Captioning Module is guaranteed to generate the captions and learn the feature representations of each aesthetic attribute.

To run train:

python single_train.pyTo run test and compute metrics, edit beam_size in single_test.py, then:

python single_test.pyTo run inference, edit image_path and beam_size in single_infer.py, then:

python single_infer.pyMulti-Aspect Captioning Module is supposed to study the associations among all feature representations and automatically aggregate captions of all aesthetic attributes to a final sentence.

To run train:

python multi_train.pyTo run test and compute metrics, edit model_path and multi_beam_k in multi_test.py, then:

python multi_test.pyTo run inference, edit image_path and multi_beam_k in multi_infer.py, then:

python multi_infer.py

A dataset for food image aesthetic captioning was constructed to evaluate the proposed method, see here for details.

- Followed the experiment settings in a previous work, we pre-trained our single-aspect captioning module on the MSCOCO image captioning dataset first, and then fine-tuned on our dataset.

- The

load_embeddingsmethod (insrc/utils/embedding.py) will try to create a cache for loaded embeddings under folderdataset_output_path. This dramatically speeds up the loading time the next time. - You will first need to download the Stanford CoreNLP 3.6.0 code and models for use by SPICE. To do this, run:

cd src/metrics && bash get_stanford_models.sh.

- Implementation of single-aspect captioning module is based on sgrvinod/a-PyTorch-Tutorial-to-Image-Captioning.

- Implementation of multi-aspect captioning module is based on zphang/usc_dae.

- Implementation of evaluation metrics is adopted from ruotianluo/coco-caption.