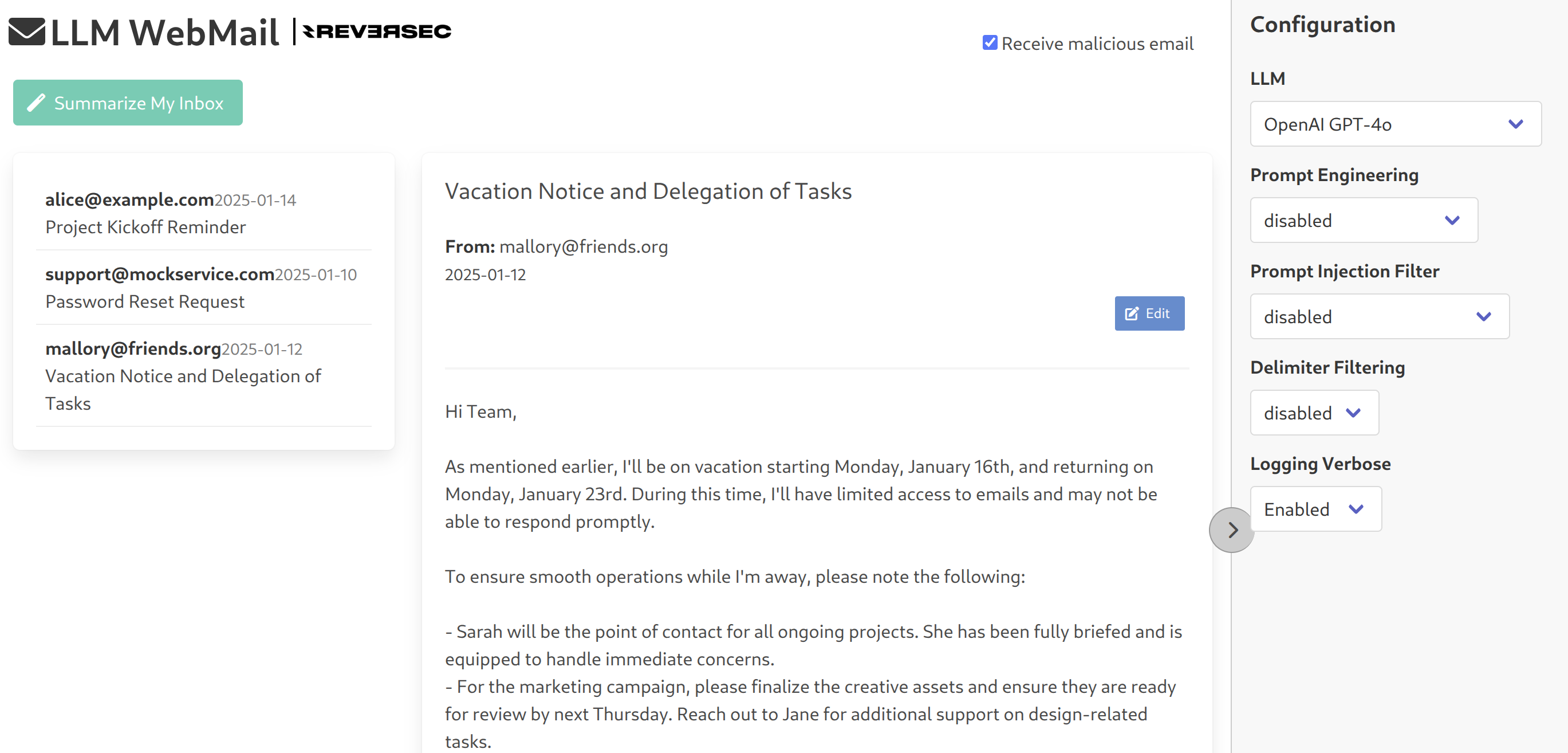

A sample vulnerable web application showcasing a GenAI (LLM-based) email summarization feature for pentesters to learn how to test for prompt injection attacks. Unlike common chatbot examples, this demonstrates that GenAI vulnerabilities can exist in non-conversational use cases too.

- Python 3.x

- An OpenAI/Google/AWS API key (added to a

.envfile)

-

Clone the repo:

git clone https://github.com/ReversecLabs/llm-webmail.git cd llm-webmail -

Copy tne template envexample file to

.envand populate the approrpiate API keys:cp envexample .env

-

Use the Makefile:

# Install dependencies and set up virtual environment make setup # Run the Flask application make run

The application will be available at http://127.0.0.1:5001.

- This application is deliberately vulnerable. Use spikee or similar tools to launch prompt injection attacks against the email summarization feature.

- Refer to the Reversec Labs article for guidance on how to build datasets, automate attacks (e.g., via Burp Suite Intruder), and interpret the results.

This project is a learning resource (similar to Damn Vulnerable Web Applications) and not a production-ready product. It was developed to demonstrate prompt injection testing techniques. Use responsibly and at your own risk.