Implements Reformer: The Efficient Transformer in pytorch. (Work in progress)

- Tested with Python 3.7.5, Pytorch 1.4.0.

- This code is built upon the pytorch-lightning framework.

pip install -r requirements.txt

- If you want to modify

trainer.pyormodel\model.py, it is recommended that you familiarize with youself thepytorch-lightninglibrary beforehand. - A custom copy task & music dataset has been implemented under

datasets\dataloader.py. Modify as needed. - A config yaml file must be placed under

config. See provided yaml files for basic framework.

python3 trainer.py -c \path\to\config\yaml -n [name of run] -b [batch size] -f [fast dev run] -v [version number]- The

-fflag is used for debugging; only one batch of training, validation, and testing will be calculated. - The

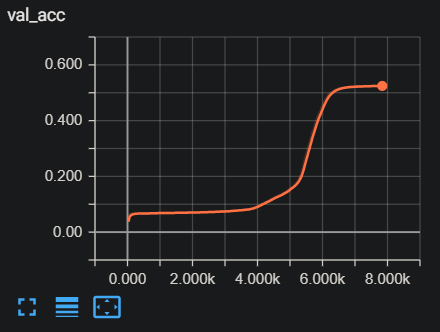

-vflag is used for resuming from checkpoints; leave empty for new version. - A toy copy task of length 32, vocab 128 converges around ~6k steps using a batch size of 1024, learning rate of 1e-3 and Adam. The checkpoint is located under

checkpoints\.

- A complete checkpoint folder must be placed under

logs\. Use the entire folder pytorch-lightning automatically saves.

- A corresponding version number must be provided with a

-vflag. - Run the code with the

-sflag set toTrue. This will generate 1 sample undersample\, if using the music dataset.

- Implement general framework of Reformer

- Rewrite using pytorch-lightning framework

- Implement Label Smoothing

- Implement LSH attention

- Implement reversible layer

- Implement autoregressive sampling

- Implement various datasets

- June Young Yi @ MINDsLab Inc. (julianyi1@snu.ac.kr, julianyi1@mindslab.ai)

MIT License

- The general structure of this code is based on The Annotated Transformer, albeit heavily modified.

- I am aware that reformer-lm exists. However, I was frustrated with the original trax implementation that the authors provided, and decided to rewrite the entire thing from the ground up. Naturally, expect bugs everywhere.

- Thanks to MINDsLab for providing training resources.