🥇SOTA Document Image Enhancement - A Layer-Wise Tokens-to-Token Transformer Network for Improved Historical Document Image Enhancement

The official PyTorch code for the project A Layer-Wise Tokens-to-Token Transformer Network for Improved Historical Document Image Enhancement.

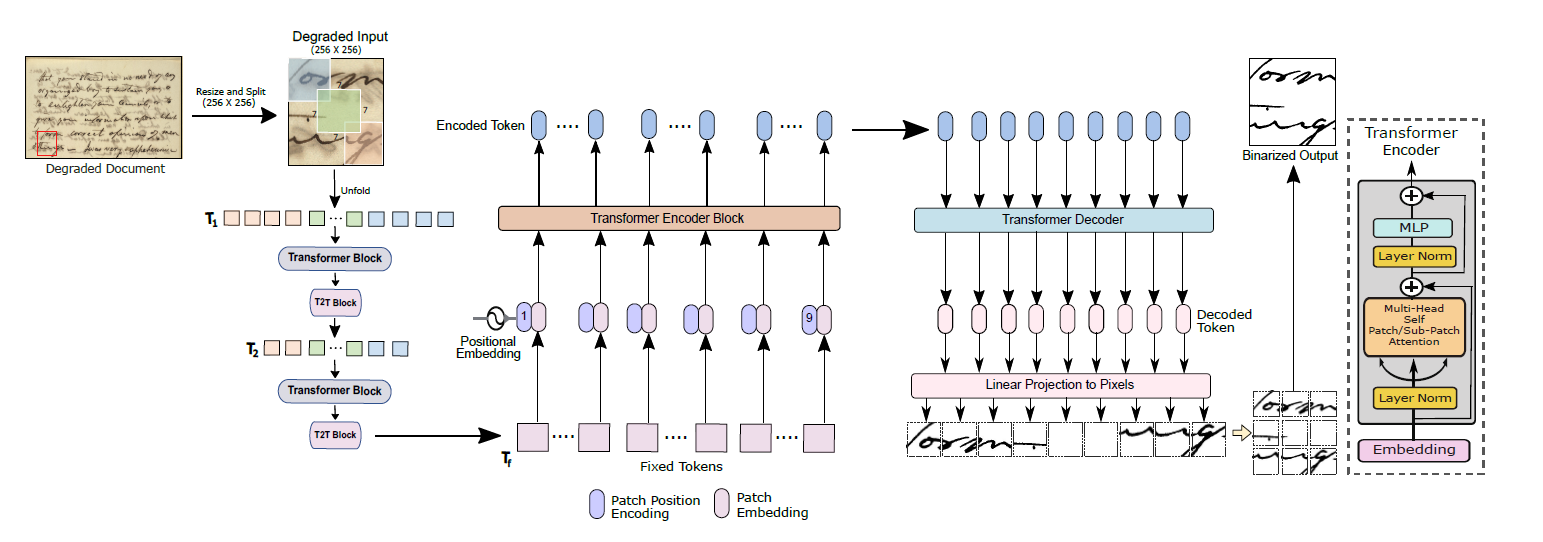

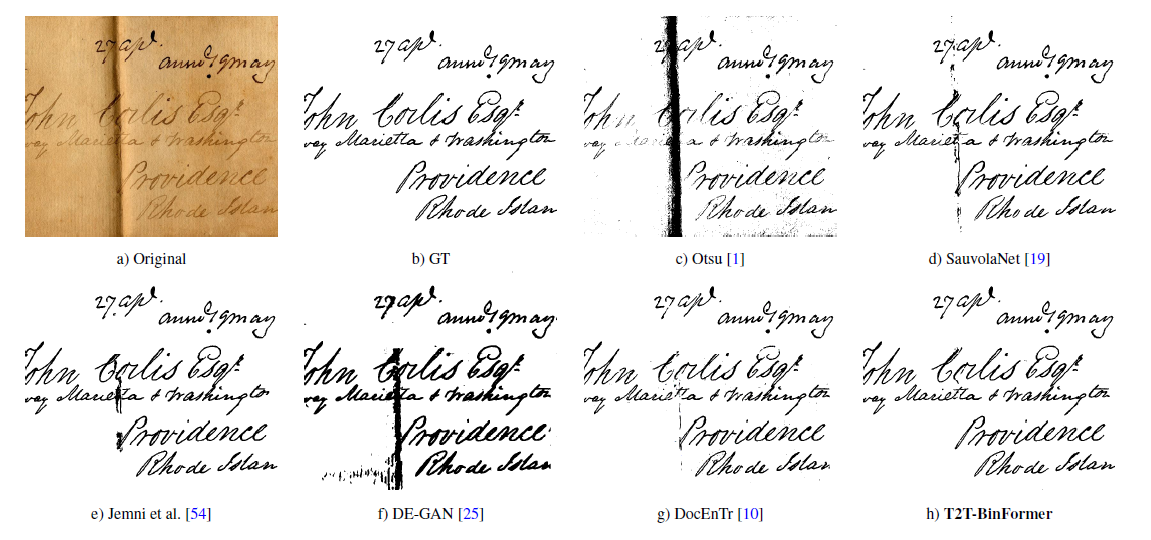

We propose to employ a Tokens-to-Token Transformer network for document image enhancement, a novel encoder-decoder architecture based on a tokens-to-token vision transformer.

Clone the repository to your desired location:

git clone https://github.com/RisabBiswas/T2T-BinFormer

cd T2T-BinFormerThe research and experiments are conducted on the DIBCO and H-DIBCO datasets. Find the dataset here - Link. After downloading, extract the folder named DIBCOSETS and place it in your desired data path. Means: /YOUR_DATA_PATH/DIBCOSETS/

Specify the data path, split size, validation, and testing sets to prepare your data. In this example, we set the split size as (256 X 256), the validation set as 2016, and the testing set as 2018 while running the process_dibco.py file.

python process_dibco.py --data_path /YOUR_DATA_PATH/ --split_size 256 --testing_dataset 2018 --validation_dataset 2016For training, specify the desired settings (batch_size, patch_size, model_size, split_size, and training epochs) when running the file train.py. For example, for a base model with a patch size of (16 X 16) and a batch size of 32, we use the following command:

python train.py --data_path /YOUR_DATA_PATH/ --batch_size 32 --vit_model_size base --vit_patch_size 16 --epochs 151 --split_size 256 --validation_dataset 2016You will get visualization results from the validation dataset on each epoch in a folder named vis+"YOUR_EXPERIMENT_SETTINGS" (it will be created). In the previous case, it will be named visbase_256_16. Also, the best weights will be saved in the folder named "weights".

To test the trained model on a specific DIBCO dataset (should match the one specified in Section Process Data, if not, run process_dibco.py again). Use your own trained model weights. Then, run the below command. Here, I test on H-DIBCO 2017, using the base model with a 16X16 patch size and a batch size of 16. The binarized images will be in the folder ./vis+"YOUR_CONFIGS_HERE"/epoch_testing/

python test.py --data_path /YOUR_DATA_PATH/ --model_weights_path /THE_MODEL_WEIGHTS_PATH/ --batch_size 16 --vit_model_size base --vit_patch_size 16 --split_size 256 --testing_dataset 2017The results of our model can be found Here.

Our project has adapted and borrowed the code structure from DocEnTr. We are thankful to the authors! Additionally, we really appreciate the great work done on vit_pytorch by Phil Wang.

If you use the T2T-BinFormer code in your research, we would appreciate a citation to the original paper:

@misc{biswas2023layerwise,

title={A Layer-Wise Tokens-to-Token Transformer Network for Improved Historical Document Image Enhancement},

author={Risab Biswas and Swalpa Kumar Roy and Umapada Pal},

year={2023},

eprint={2312.03946},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

If you have any questions, please feel free to reach out to Risab Biswas.

We really appreciate your interest in our research. The code should not have any bugs, but if there are any, we are really sorry about that. Do let us know in the issues section, and we will fix it ASAP! Cheers!