Currently the major work for generating joint embedding goes in creating large synthetic dataset using Stable Diffusion leveraging multimodal information like captions using Attentive Heatmaps. Visit Text-Based Object Discovery for latest updates.

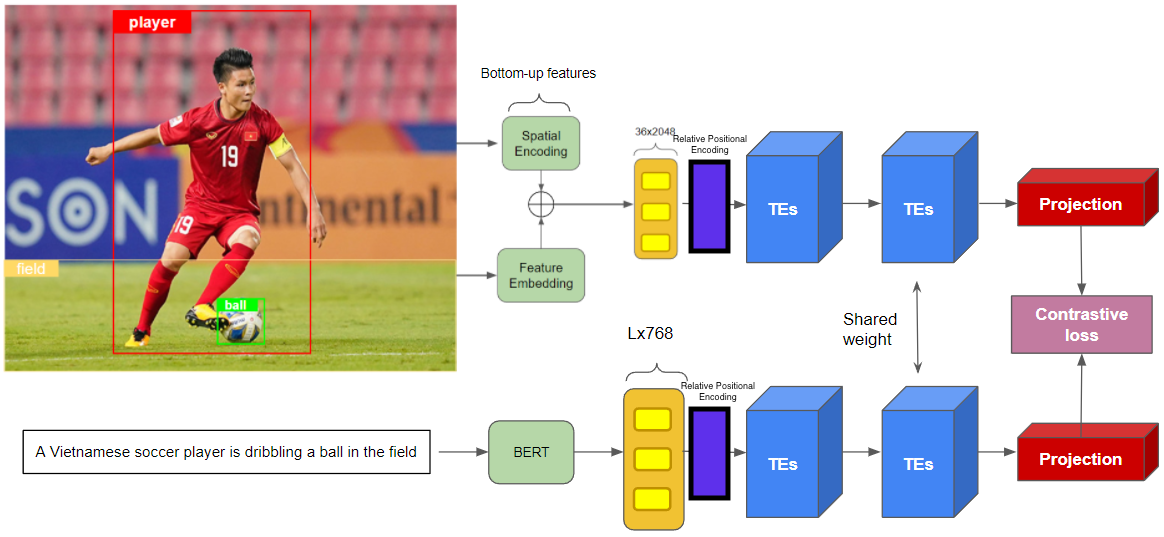

This project reimplements the idea from "Transformer Reasoning Network for Image-Text Matching and Retrieval" along with that from "Self-Attention with Relative Position Representations". To solve the task of cross-modal retrieval, representative features from both modal are extracted using distinctive pipeline and then projected into the same embedding space. Because the features are sequence of vectors, Transformer-based model can be utilised to work best. In this repo, my highlight contribution is:

- Reimplement TERN module, which exploits the effectiveness of using Transformer on bottom-up attention features and bert features.

- Implement Relative Positional Encoding on both textual and image side.

- Take advantage of facebookresearch's FAISS for efficient similarity search and clustering of dense vectors.

- Experiment various metric learning loss objectives from KevinMusgrave's Pytorch Metric Learning

The figure below shows the overview of the architecture

-

I trained TERN on Flickr30k dataset which contains 31,000 images collected from Flickr, together with 5 reference sentences provided by human annotators for each image. For each sample, visual and text features are pre-extracted as numpy files

-

Some samples from the dataset:

- Installation

I used M1 Max to conduct the experiments, if you have CUDA available you can optimize the training further through NVIDEA mixed precision training, APEX, etc.

- See the notebooks below for training, evaluation and inference.

- For detailed training, evaluation and inference refer to this all-in-one notebook

- To be updated...

@misc{messina2021transformer,

title={Transformer Reasoning Network for Image-Text Matching and Retrieval},

author={Nicola Messina and Fabrizio Falchi and Andrea Esuli and Giuseppe Amato},

year={2021},

eprint={2004.09144},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@misc{anderson2018bottomup,

title={Bottom-Up and Top-Down Attention for Image Captioning and Visual Question Answering},

author={Peter Anderson and Xiaodong He and Chris Buehler and Damien Teney and Mark Johnson and Stephen Gould and Lei Zhang},

year={2018},

eprint={1707.07998},

archivePrefix={arXiv},

primaryClass={cs.CV}

}

@article{JDH17,

title={Billion-scale similarity search with GPUs},

author={Johnson, Jeff and Douze, Matthijs and J{\'e}gou, Herv{\'e}},

journal={arXiv preprint arXiv:1702.08734},

year={2017}

}