Haoyu Denga, Ruijie Zhub, Xuerui Qiua, Yule Duanua, Malu Zhanga,† and Liang-Jian Denga,†

aUniversity of Electronic Science and Technology of China, 611731, China

bUniversity of California, Santa Cruz, 95064, United States

†Corresponding authors

If you have any questions, feel free to raise an issue or send a mail to academic@hydeng.cn. I will respond to you as soon as possible.

This is the official repository for paper Tensor Decomposition Based Attention Module for Spiking Neural Networks

paper:[pdf]

A Docker environment is strongly recommended, but you can also use pip to prepare the environment. If you are not familiar with Docker, you can refer to the following link to know about docker:

A docker file is provided in env directory. You can build the docker image and run the container with the following commands:

docker build -t pfa ./envWe provide pre-build docker image on docker hub. You can pull the image with the following command:

docker pull risingentropy409/pfaThe environment is ready to use after you have the image.

Use the following command to setup environment with pip:

pip install -r requirements.txtNOTE: If your cuda version is above 12.0, you may modify cupy-cuda11x in requirements.txt to cupy-cuda12x

Modify CIFAR/main.py according to your needs and run:

python main.pyThis code is modified from the example code of the spikingjelly framework. We appreciate the authors' great contribution.

Just run:

python run.pyFor more configuration, please refer to the code. The code is simple and clear.

We conduct generation tasks based on FSVAE. The modified code is in the Generation folder. To run it, please refer to Generation/README.md

If you think our work is useful, please give us a warm citation!

@article{DENG2024111780,

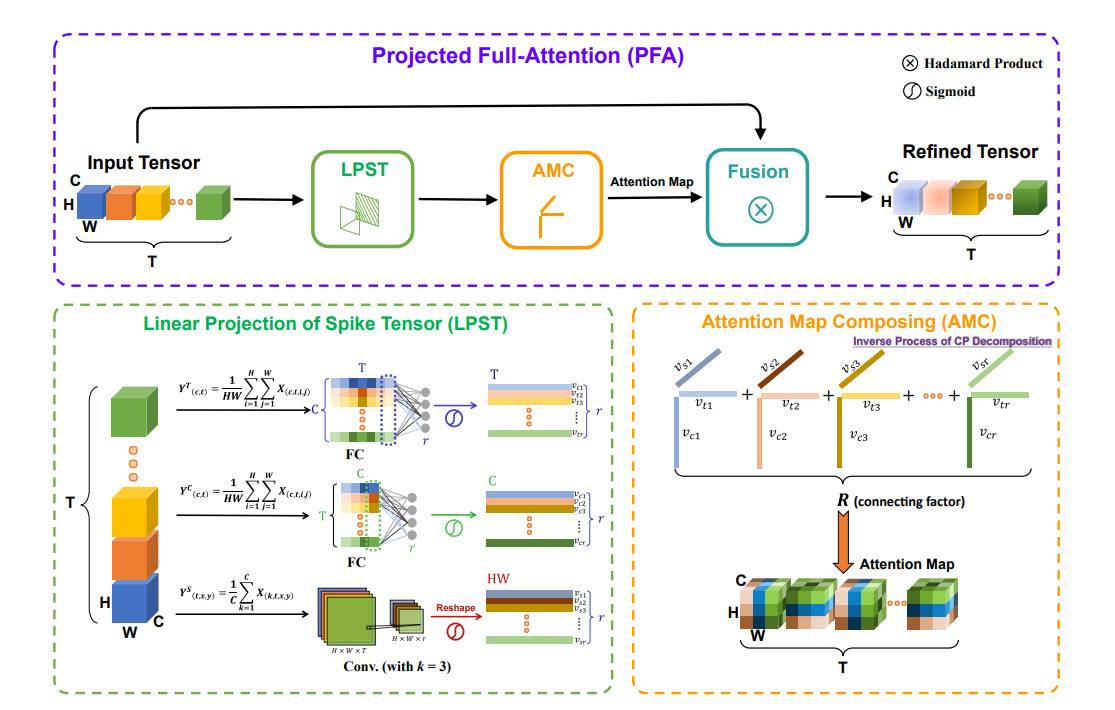

title = {Tensor decomposition based attention module for spiking neural networks},

journal = {Knowledge-Based Systems},

volume = {295},

pages = {111780},

year = {2024},

issn = {0950-7051},

doi = {https://doi.org/10.1016/j.knosys.2024.111780},

url = {https://www.sciencedirect.com/science/article/pii/S0950705124004143},

author = {Haoyu Deng and Ruijie Zhu and Xuerui Qiu and Yule Duan and Malu Zhang and Liang-Jian Deng},

keywords = {Spiking neural network, Attention mechanism, Tensor decomposition, Neuromorphic computing}

}