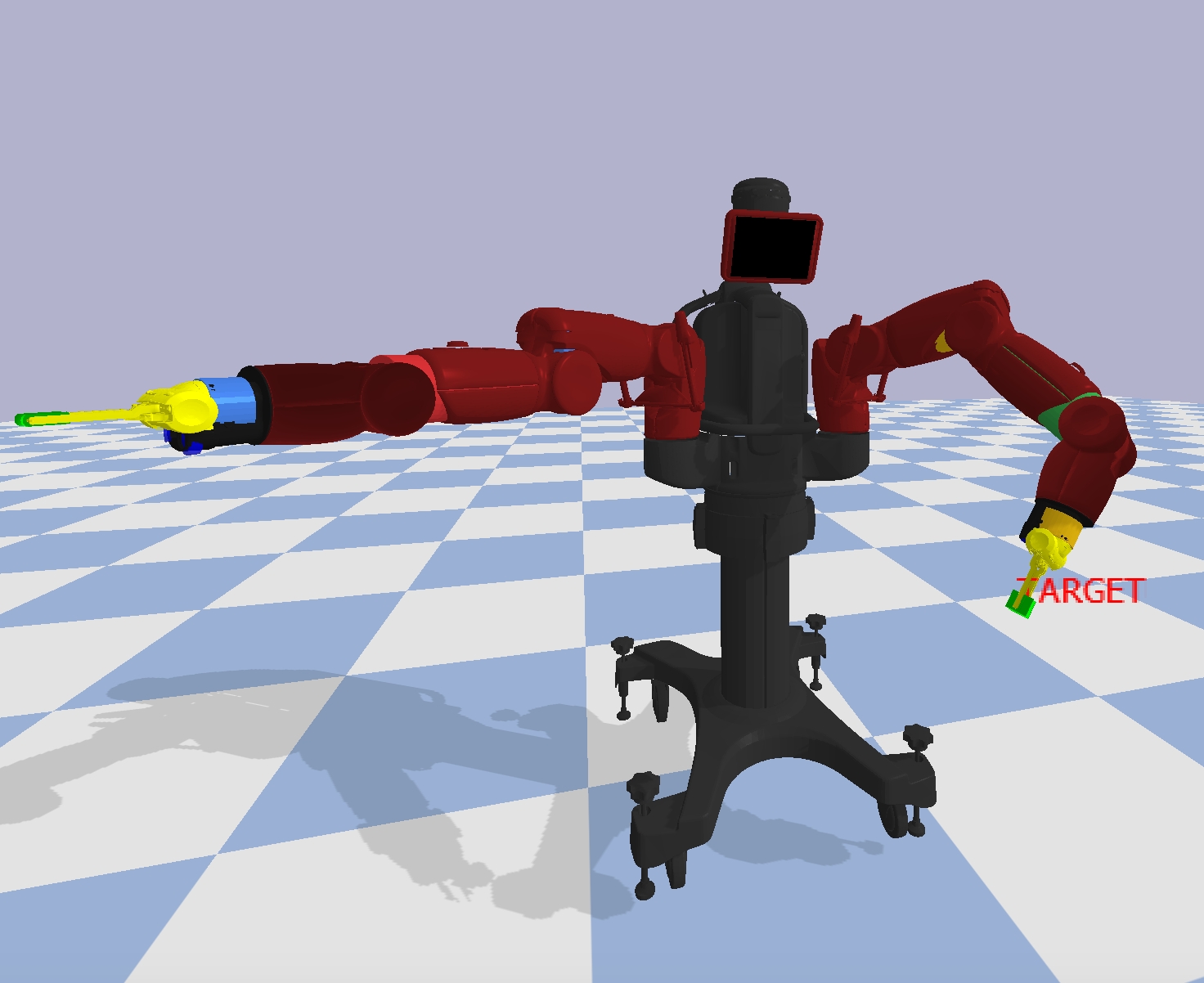

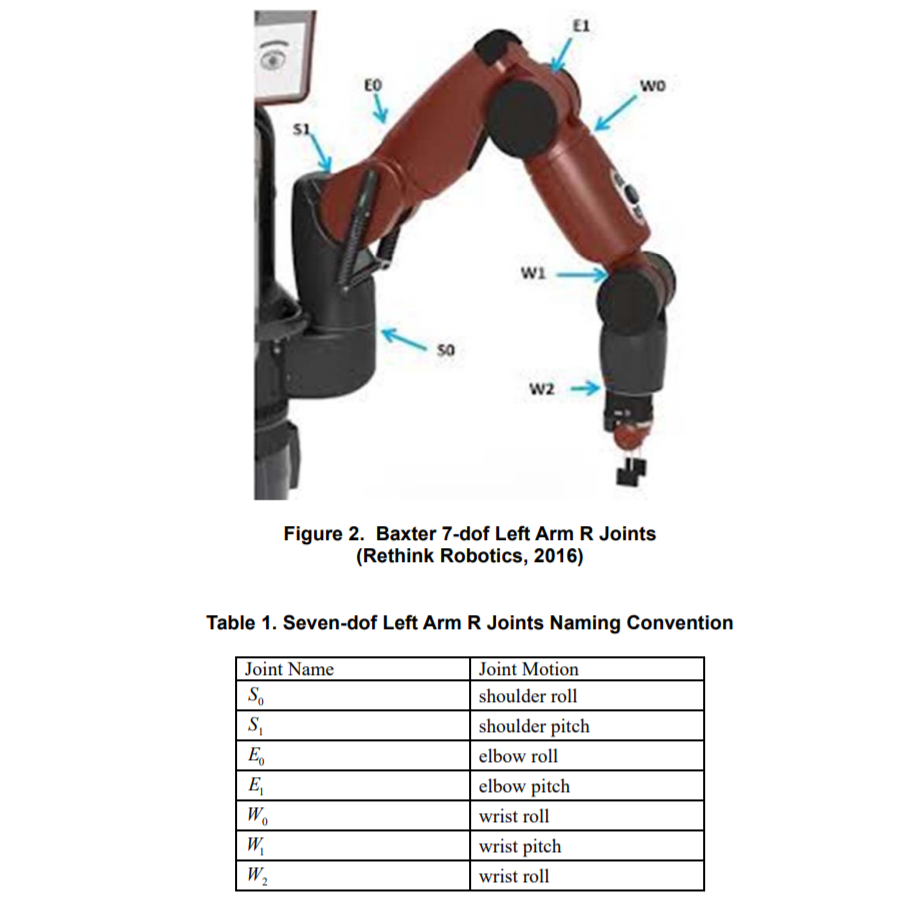

This environment is made by our team from RoboReG of Robotics Club,IIT BHU to tackle an open challenge in the field of robotics, which is Simultaneous Work. Generally, Humans have a good efficiency in managing different things simultaneously using both hands. Hence, we want to explore how the Reinforcement Learning Algorithms are trying to achieve this task comparable to humans or even better. For this purpose Baxter humanoid robot is used as the Agent. It is a gym compatible environment made using simulator PyBullet

Baxter is an industrial humanoid robot built by Rethink Robotics. We used it in our environment to get a good idea of how the humanoid agent will behave when left in the same scenario as that of a human.For more info on baxter visit this

We have divided our work into several steps which we want to achieve one by one.

- Creating experimental and environmental setup

- Finding Joint Reward Function

- Learn easy simultaneous tasks first

- Increase task complexity

- Increase interaction complexity

We made the required environment with aiming some basic works like simultaneous lifting, touching etc. Now we are working on our MDP (Markov Decision Process) formulation along with joint reward function.

Anyone who want to conribute to this repo or want to modify it, can fork the repo and create pull requests here. One can also take this environment as a ready made setup for aiming the same challenges.

- python3

- pybullet

- gym

- opencv

- numpy

- matplotlib

After cloning the repo run the following commands to install the required setup

-

Install the environment using

pip install -e baxter-env. It will automatically install the required additional dependencies -

In case there are problems with the PyBullet installation, you can refer to this guide.

-

After that use the following lines to import the environment in your workspace.

import gym

import baxter_env

env = gym.make('baxter_env-v0')After importing the environment, there are different functions available which can be used for various purposes, some of the important ones are listed below, for more details on the functions and arguments, you can see here

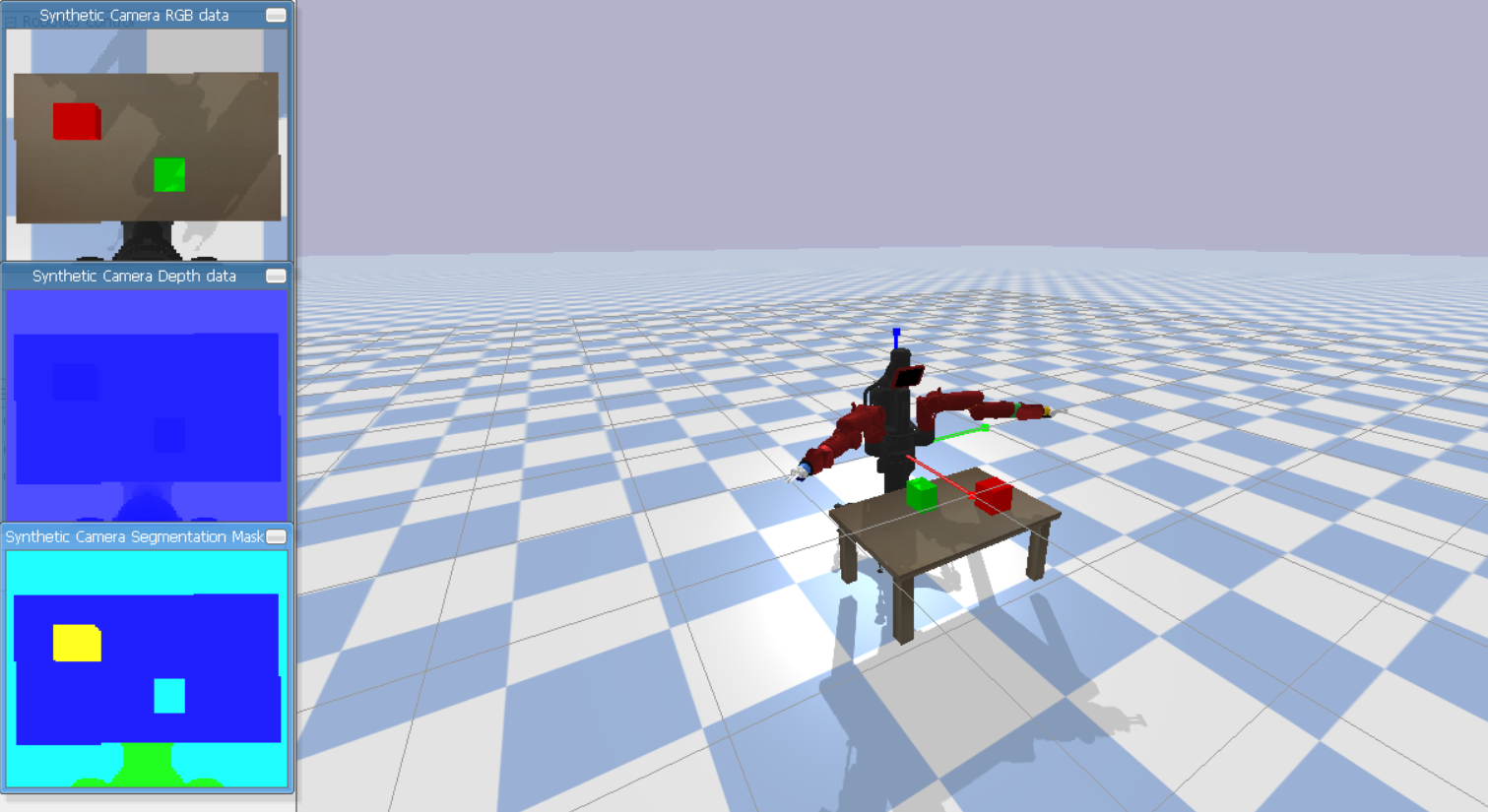

env.getImage()

This will return a tuple containing 128x128 RGB image along with the depth map of the environment from baxter's eye view.env.step()

This will take list of actions and perform that action along with providing required information of states.env.moveVC()

This will move the joints to a specified position by taking the index of that joint.env.render()

Function to render the eye view image of the environment.env.reset()

This will reset the whole environment and gives the information regarding the state.

There are other functions also which we have made for our purpose like

env.BeizerCurve()

This is an implementation of a family of curves which gives a trajectory passing through some points on the basis of some weights.env.getReward()

This will return the reward for trying simultaneous touching (naive implementation, working on making it better).

Some implementation related to these functions are available here

Few images and gifs of our environment and robot in action using some classical techniques.

|

Mainak Samanta |

Akshat Sood |

Aman Mishra |

Aditya Kumar |

|

Lokesh Krishna |

Niranth Sai |