One hour recording on YouTube: https://youtu.be/9eddLZZvr0E.

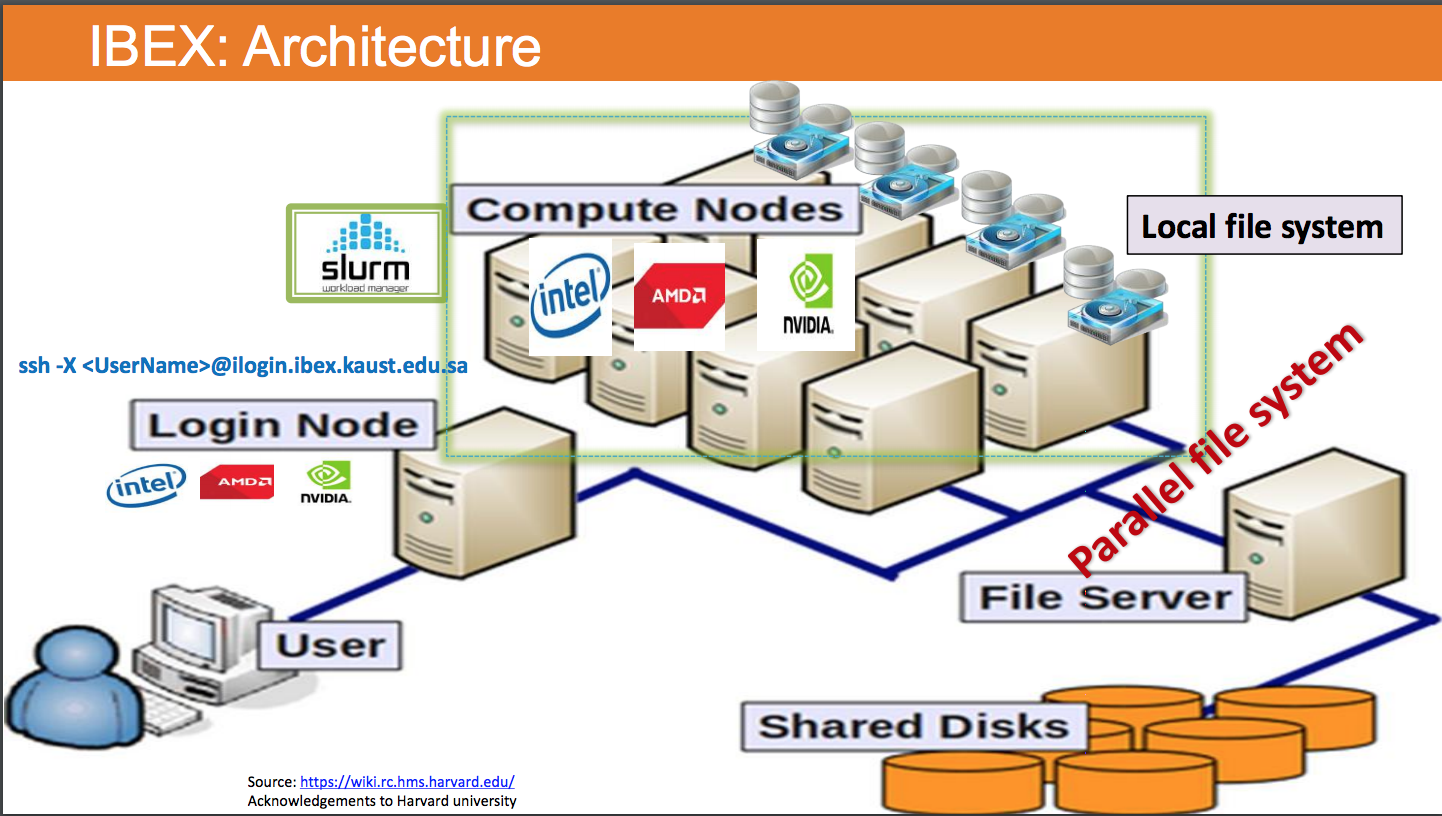

This is intended to be a less than 30 min tutorial on how to move work environment

from a lab node (standalone Linux) to IBEX (cluster using slurmto manage jobs).

The basic idea is if you know how to run your tasks on a standalone Linux machine, and feel there is a learning curve for using IBEX and hence hesitated, then after this tutorial, you should feel very comfortable to use IBEX.

sshandauthorized_keysfor login and how to stay in the same login node if necessary/ibex/scratch/<userID>,scp,rsync,wget,bquota,quota -sfor data transfer and disk usagemodule av,module load,module unloadto check and load certain packagesginfo,sinfo -p batch,snodesto check available resourcessqueue,srun,sbatch,debugvsbatchto ask a computing node and to submit jobs, check.outand.err, and how to go to that node to check usage stats.- Launch and access jupyter notebook, and custom web service

- Overview of the IBEX documentation

- Where to ask for help (such as installation and how to ask for extension on jobs)

tmuxfor remote shellimgcatthe shell integration tool to check images inside the iTerm commandline window.

cd,ls,vibasic Linux command line- Deep learning pipeline (but will give official tutorial links). We will only cover to

import torch/tensorflowand see if it works.

# client

cat ~/.ssh/id_rsa.pub| pbcopy

ssh <portal_ID>@glogin.ibex.kaust.edu.sa# server

vi ~/.ssh/authorized_keysCheck out bash: /home/user/.ssh/authorized_keys: No such file or directory if you creat the file for the first time.

# stay in the same login node

(base) [luod@login510-22 ~]$ which glogin

~/.myscript/glogin

(base) [luod@login510-22 ~]$ cat ~/.myscript/glogin

#!/bin/bash

ssh login510-27

(base) [luod@login510-22 ~]$ glogin

Last login: Sun Jan 31 17:00:01 2021 from 10.240.0.57

/-------------------------** IBEX Support **--------------16-12-2020-\

| |

| For help with Ibex use either: |

| |

| http://hpc.kaust.edu.sa/ibex - Wiki containing ibex information |

| |

| http://kaust-ibex.slack.com - use #general for simple queries |

| |

| ibex@hpc.kaust.edu.sa - to create a request ticket |

| |

\--------------------------------------------------------------------/

(base) [luod@login510-27 ~]$# server

(base) [luod@login510-27 ~]$ pwd

/home/luod

(base) [luod@login510-27 ~]$ which cds

alias cds='cd /ibex/scratch/luod'

/usr/bin/cd

(base) [luod@login510-27 ~]$ cds

(base) [luod@login510-27 luod]$ pwd

/ibex/scratch/luod# client

scp output.* luod@glogin.ibex.kaust.edu.sa:/ibex/scratch/luod/oxDNA_tutorial/oxDNA_tutorial_input_files_sbatch/tetraBrick_4_vertices/987/add_7/

# Make or update a remote location to be an exact copy of the source:

rsync -av --progress --delete /path/to/localdir/ USER@HOSTNAME:/path/to/destinationCheck IBEX doc here for rsync.

How to get past the login page with Wget?

(base) [luod@login510-27 ~]$ bquota # check scratch disk usage

Quota information for IBEX filesystems:

Scratch (/ibex/scratch): Used: 392.90 GB Limit: 1536.00 GB

(base) [luod@login510-27 ~]$ quota -s # check home dir usage(base) [luod@login510-27 ~]$ module av pytorch

------------------------------------- /sw/csgv/modulefiles/applications -------------------------------------

pytorch/1.0.1-cuda10.0-cudnn7.6-py3.6 pytorch/1.2.0-cuda10.0-cudnn7.6-py3.7

(base) [luod@login510-27 ~]$ module av tensorflow

------------------------------------- /sw/csgv/modulefiles/applications -------------------------------------

tensorflow/1.11.0 tensorflow/1.14.0(default)

tensorflow/1.11.0-cuda9.2-cudnn7.2-py3.6 tensorflow/1.14.0-cuda10.0-cudnn7.6-py3.7

tensorflow/1.13.1 tensorflow/2.0.0

tensorflow/1.13.1-cuda10.0-cudnn7.6-py3.6 tensorflow/2.0.0-cuda10.0-cudnn7.6-py3.7

(base) [luod@login510-27 ~]$ module av cuda

-------------------------------------- /sw/csgv/modulefiles/compilers ---------------------------------------

cuda/10.0.130 cuda/10.1.243(default) cuda/11.0.1 cuda/9.2.148.1

cuda/10.1.105 cuda/10.2.89 cuda/9.0.176

(base) [luod@login510-27 ~]$ module load pytorch/1.2.0-cuda10.0-cudnn7.6-py3.7

(base) [luod@login510-27 ~]$ python

Python 3.7.4 (default, Aug 13 2019, 20:35:49)

[GCC 7.3.0] :: Anaconda, Inc. on linux

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

>>> print(torch.__version__)

1.2.0

(base) [luod@login510-27 ~]$ module unload pytorch/1.2.0-cuda10.0-cudnn7.6-py3.7

Unloading module for Anaconda

Anaconda 4.4.0 is now unloaded

GNU 6.4.0 is now unloaded

Unloading module for Machine Learning 2019.02

Machine Learning 2019.02 is now unloaded

(base) [luod@login510-27 ~]$ python

Python 2.7.5 (default, Nov 16 2020, 22:23:17)

[GCC 4.8.5 20150623 (Red Hat 4.8.5-44)] on linux2

Type "help", "copyright", "credits" or "license" for more information.

>>> import torch

Traceback (most recent call last):

File "<stdin>", line 1, in <module>

ImportError: No module named torch(base) [luod@login510-27 ~]$ ginfo

GPUS currently in use:

GPU Models: Total Used Free

gtx1080ti 64 13 51

p100 12 5 7

p6000 4 0 4

rtx2080ti 32 11 21

v100 274 241 33

Total: 386 270 1165. squeue, srun, sbatch, debug vs batch to ask a computing node and to submit jobs, check .out and .err, and how to go to that node to check usage stats.

(base) [luod@login510-22 ~]$ myq

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

13912127 gpu4 plane_1X luod R 51:29 1 gpu211-10

(base) [luod@login510-22 ~]$ which myq

alias myq='squeue -u luod'

/opt/slurm/cluster/ibex/install/bin/squeue

(base) [luod@login510-22 ~]$ which gpu-4h-bash

alias gpu-4h-bash='srun -p batch --pty --time=4:00:00 --constraint=[gpu] --cpus-per-task=1 --gres=gpu:1 bash -l'

/opt/slurm/cluster/ibex/install/bin/srun

(base) [luod@login510-22 ~]$ which v100-4h-bash

alias v100-4h-bash='srun -p batch --pty --time=4:00:00 --constraint=[gpu] --cpus-per-task=1 --gres=gpu:v100:1 bash -l'

/opt/slurm/cluster/ibex/install/bin/srun

(base) [luod@login510-22 ~]$ gpu-4h-bash

srun: job 13912529 queued and waiting for resources

srun: job 13912529 has been allocated resources

(base) [luod@gpu211-06 ~]$ nvidia-smicheck here for IBEX job generator, and here for more srun commands.

(base) [luod@login510-22 test]$ ls

print_pytorch_version.py run_all.sh

(base) [luod@login510-22 test]$ cat print_pytorch_version.py

import torch

print(torch.__version__)

(base) [luod@login510-22 test]$ cat run_all.sh

#!/bin/bash

#SBATCH -N 1

#SBATCH --partition=batch

#SBATCH -J test

#SBATCH -o test.%J.out

#SBATCH -e test.%J.err

#SBATCH --mail-user=deng.luo@kaust.edu.sa

#SBATCH --mail-type=ALL

#SBATCH --time=4:00:00

#SBATCH --mem=128G

#SBATCH --gres=gpu:1

#SBATCH --cpus-per-task=6

#SBATCH --constraint=[gpu]

#run the application:

module load pytorch/1.2.0-cuda10.0-cudnn7.6-py3.7

python print_pytorch_version.py

now=$(date +"%T")

echo "Current time : $now"

sleep 120

now=$(date +"%T")

echo "Current time : $now"

(base) [luod@login510-22 test]$ sbatch run_all.sh

Submitted batch job 13912810

(base) [luod@login510-22 test]$ myq

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

13912810 gpu4 test luod R 0:01 1 gpu212-10

(base) [luod@login510-22 test]$ which showJob

~/.myscript/showJob

(base) [luod@login510-22 test]$ cat ~/.myscript/showJob

#!/bin/bash

if [[ $# -eq 0 ]] ; then

echo 'usage: showJob <SLURM_JOB_ID>'

exit 0

fi

SLURM_JOB_ID=$1

scontrol show jobid -dd $SLURM_JOB_ID

(base) [luod@login510-22 test]$ showJob 13912810

...

...

...

(base) [luod@login510-22 test]$ which gotoGPUJob

~/.myscript/gotoGPUJob

(base) [luod@login510-22 test]$ cat ~/.myscript/gotoGPUJob

#!/bin/bash

if [[ $# -eq 0 ]] ; then

echo 'usage: gotoGPUJob <SLURM_JOB_ID> [NUMBER_OF_GPUS=1]'

exit 0

fi

SLURM_JOB_ID=$1

if [ -z "$2" ]; then

NUMBER_OF_GPUS=1

else

NUMBER_OF_GPUS=$2

fi

srun --jobid $SLURM_JOB_ID --gpus=$NUMBER_OF_GPUS --pty /bin/bash --login

(base) [luod@login510-22 test]$ gotoGPUJob 13912810

(base) [luod@gpu212-10 test]$ nvidia-smi

...

...

...

(base) [luod@gpu212-10 test]$ logout

(base) [luod@login510-22 test]$ ls

run_all.sh test.13912810.err test.13912810.out

(base) [luod@login510-22 test]$ myq

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

13912810 gpu4 test luod R 1:01 1 gpu212-10

(base) [luod@login510-22 test]$ less test.13912810.out

(base) [luod@login510-22 test]$ less test.13912810.errCheck Email for BEGIN, COMPLETED, FAILED, CANCELED, notifications

Best Practices for Deep Learning

(base) [luod@login510-27 add_staple_2]$ tmux ls

ox: 1 windows (created Wed Jan 20 21:07:34 2021) [140x41]

oxdnaweb: 1 windows (created Thu Dec 31 15:04:54 2020) [110x44]

(base) [luod@login510-27 add_staple_2]$ tmux a -t oxdnaweb

[detached]Google "kaust ibex" and find Welcome to Ibex, go to tab "Documentation » Ibex User Guide".

There is an #extension channel in the slack group.

(base) [luod@login510-27 ~]$ tmux ls # show all sessions

ox: 1 windows (created Wed Jan 20 21:07:34 2021) [140x41]

oxdnaweb: 1 windows (created Thu Dec 31 15:04:54 2020) [127x48]

(base) [luod@login510-27 ~]$ tmux new -s test # create a new session named "test"

[detached]

(base) [luod@login510-27 ~]$ tmux ls

ox: 1 windows (created Wed Jan 20 21:07:34 2021) [140x41]

oxdnaweb: 1 windows (created Thu Dec 31 15:04:54 2020) [127x48]

test: 3 windows (created Tue Feb 2 12:04:36 2021) [80x24]

(base) [luod@login510-27 ~]$ tmux a -t test # to attach

[detached]control-b c to create more windows in a session

control-b <0/1/2/...> to switch to other windows in a session

control-b d to detach from a session

(base) [luod@login510-22 test]$ wget https://innovation.kaust.edu.sa/wp-content/uploads/2016/01/thought-leadership-blog-picchanged.jpg

(base) [luod@login510-22 test]$ imgcat thought-leadership-blog-picchanged.jpg