IBM Cloud Mobile Starter for Core ML and Visual Recognition in Swift.

This IBM Cloud Mobile Starter will showcase Core ML and the Watson Visual Recognition service. This starter kit uses Core ML to classify images offline using models trained by the Watson Visual Recognition service.

- iOS 11.0+

- Xcode 9

- Swift 4.0

- Visual Recognition Model Training

- IBM Cloud Mobile Services Dependency Management

- Watson Dependency Management

- Watson Credential Management

This application comes with support for a "Connectors" training data set. If the Connectors Visual Recognition model does not exist, the first model found remotely or any one that exists locally will be used. The gif and accompanying instruction below show you how to link your service to the Watson Studio and train your model. We also provide a gif in an mp4 format in the Resources folder if needed.

- Launch the Visual Recognition Tool from your Starter Kit's dashboard by selecting Launch Tool.

- Begin creating your model by selecting Create Model.

- If a project is not yet associated with the Visual Recognition instance you created, a project will be created. Otherwise, skip to Create Model.

- Name your project and click Create.

- Tip: If no storage is defined, click refresh.

- After creating your project, you will be moved to your project dashboard.

- Go to the settings tab, scroll down to Associated Services, select Add Service -> Watson.

- From the Watson services page, select Visual Recognition.

- Select the Existing tab on the service info page and then select your service instance from the dashboard.

- Now that your service has been bound you can begin creating your model by selecting Assets from your Project dashboard and then clicking Add Visual Recognition Model.

-

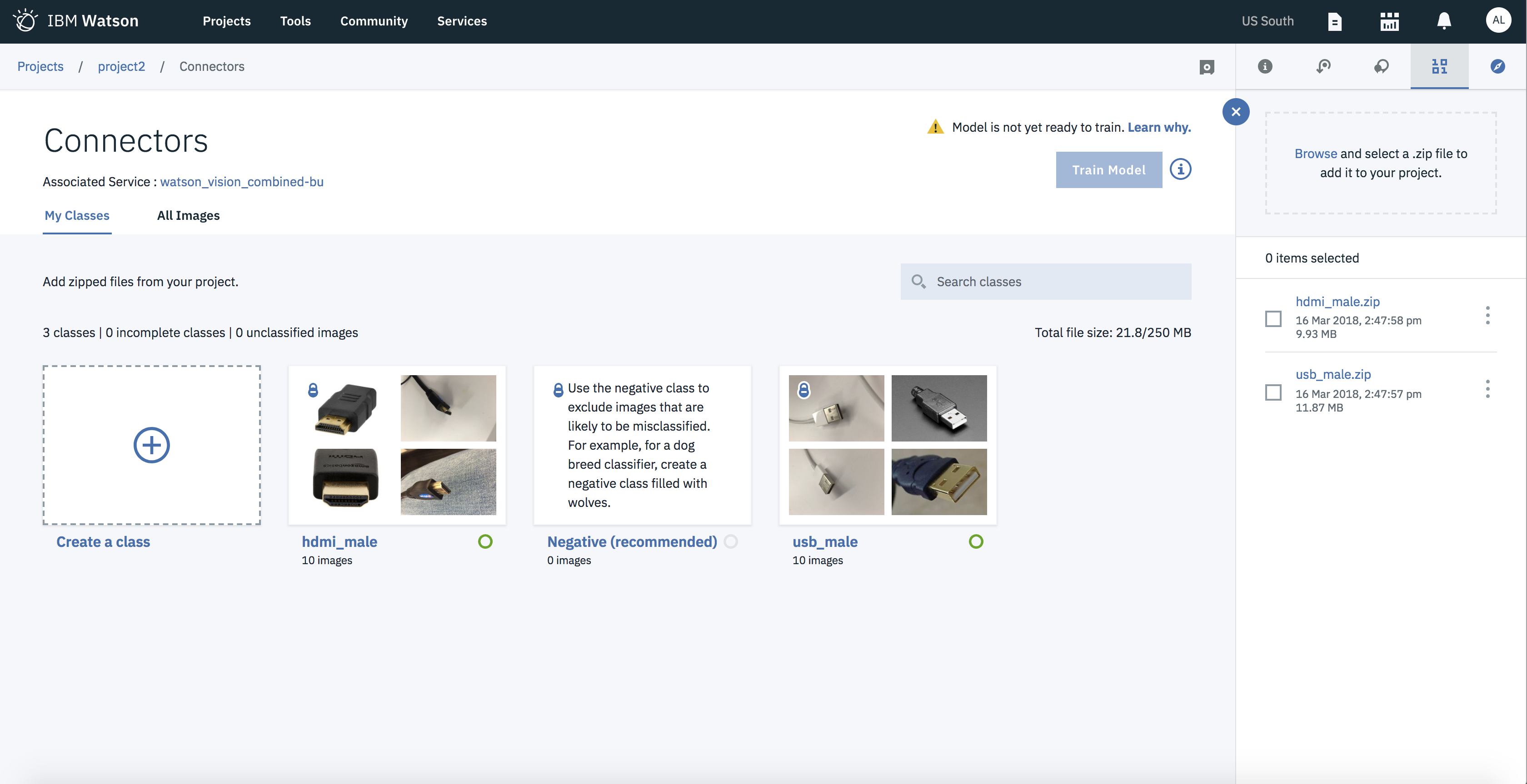

From the model creation tool, modify the classifier name to be

Connectors. By default, the application tries to use this classifier name. If it does not exist it will use the first available. If you would like to use a specific model, make sure to modify thedefaultClassifierIDfield in main View Controller. -

From the sidebar, upload hdmi_male.zip and usb_male.zip by dragging and dropping their example data sets found in

./Data/Trainingfolder into separate class folders. Then, select each dataset and add them to your model from the drop-down menu. Feel free to add more classes using your own image sets to enhance the classifier!

-

Select

Train Modelin the upper-right corner then simply wait for the model to be fully trained and you'll be good to go!

The IBM Cloud Mobile services SDK uses CocoaPods to manage and configure dependencies.

You can install CocoaPods using the following command:

$ sudo gem install cocoapodsIf the CocoaPods repository is not configured, run the following command:

$ pod setupFor this starter, a pre-configured Podfile has been included in your project. To download and install the required dependencies, run the following command in your project directory:

$ pod installNow Open the Xcode workspace: {APP_Name}.xcworkspace. From now on, open the .xcworkspace file because it contains all the dependencies and configurations.

If you run into any issues during the pod install, it is recommended to run a pod update by using the following commands:

$ pod update

$ pod installThis starter uses the Watson Developer Cloud iOS SDK in order to use the Watson Visual Recognition service.

The Watson Developer Cloud iOS SDK uses Carthage to manage dependencies and build binary frameworks.

You can install Carthage with Homebrew:

$ brew update

$ brew install carthageTo use the Watson Developer Cloud iOS SDK in any of your applications, specify it in your Cartfile:

github "watson-developer-cloud/swift-sdk"

For this starter, a pre-configured Cartfile has been included in your project.

Run the following command to build the dependencies and frameworks:

$ carthage update --platform iOSNote: You may have to run

carthage update --platform iOS --no-use-binaries, if the binary is a lower version than your current version of Swift.

Once the build has completed, the frameworks can be found in the Carthage/Build/iOS/ folder. The Xcode project in this starter already includes framework links to the following frameworks in this directory:

- VisualRecognitionV3.framework

If you build your Carthage frameworks in a separate folder, you will have to drag-and-drop the above frameworks into your project and link them in order to run this starter successfully.

Once the dependencies have been built and configured for the IBM Cloud Mobile service SDKs as well as the Watson Developer Cloud SDK, no more actions are needed! The unique credentials to your Watson Visual Recognition service have been injected into the application during generation.

You can now run the application on a simulator or physical device:

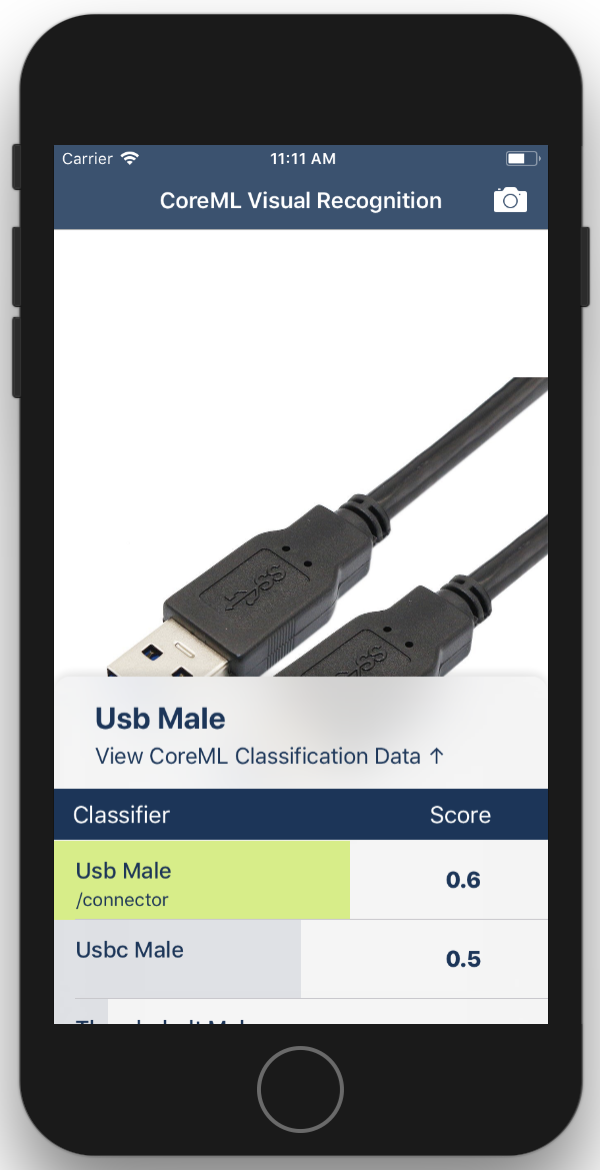

The application enables you to perform Visual Recognition on images from your Photo Library or from an image you take using your device's camera (physical device only).

When you run the app, the SDK makes sure that the version of the Visual Recognition model on your device is in sync with the latest version on IBM Cloud. If the local version is older, the SDK downloads the model to your device. With a local model, you can classify images offline.

After your Core ML model has been created, you can select an image to have analyzed using the Core ML framework. Take a photo using your device's camera or choose a photo from your library using the toolbar buttons on the bottom of the application. The analysis will output a set of tags and their respective score indicating Watson's confidence.

This package contains code licensed under the Apache License, Version 2.0 (the "License"). You may obtain a copy of the License at http://www.apache.org/licenses/LICENSE-2.0 and may also view the License in the LICENSE file within this package.