Please cite these papers when using this code and data:

[MIG2020.pdf] Investigating perceptually based models to predict importance of facial blendshapes. Emma Carrigan, Katja Zibrek, Rozenn Dahyot, and Rachel McDonnell. 2020. In Motion, Interaction and Games (MIG '20). Association for Computing Machinery, New York, NY, USA, Article 2, 1–6. DOI:10.1145/3424636.3426904

and

[CAG2021.pdf] Model for predicting perception of facial action unit activation using virtual humans Rachel McDonnell, Katja Zibrek, Emma Carrigan and Rozenn Dahyot, Computers & Graphics 2021 DOI:10.1016/j.cag.2021.07.022

The code is in R and Rmd formats and it can be run with RStudio (code has been tested on Windows 10 on RStudio Version 1.3.1093) This package contains:

- Data

- combined_resultsUpdate.xlsx (Experiment 1: Laboratory, used in MIG2020.pdf and CAG2021.pdf)

- Main_analysis.csv (Experiment 2: online, used in CAG2021.pdf)

- Experiment 1 R/Rmd code

- MIG2020.Rmd with output MIG2020.html

- CAG2021.Rmd with output CAG2021.html

- Experiment 2 R code

- publications:

- MIG2020.pdf this paper was awarded Best Short Paper at MIG2020)

- CAG2021.pdf preprint accepted at Computers and Graphics 2021.

All packages need to be installed in R/RStudio to run the code e.g. in the R console for installing package xlsx run:

install.packages("xlsx")

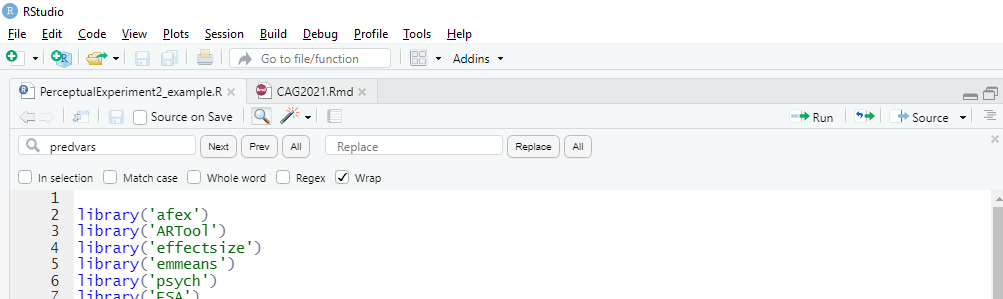

For the file *.R, to run the code used the R console to run

source('~/GitHub/facial-blendshapes/PerceptualExperiment2_example.R')

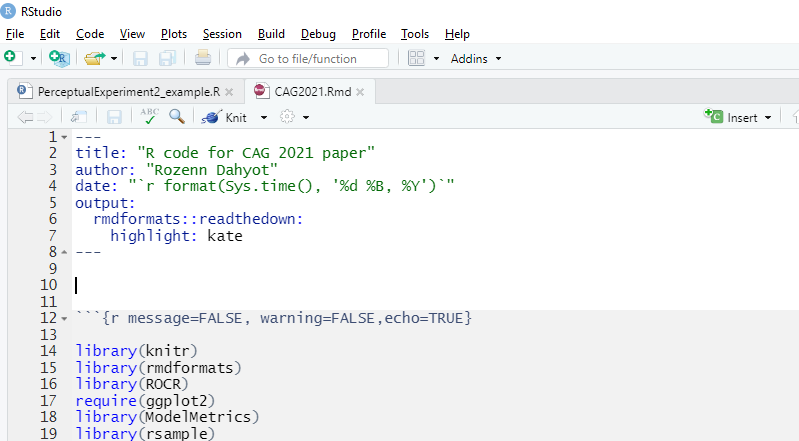

To compile the *.Rmd code with RStudio, press the button "knit" (see image).

If a problem occurs when knitting at the stage when reading the data (package xlsx), a possible fix (tested in Windows 10) is to do the following:

-

Install recent java https://www.java.com/en/download/win10.jsp

-

in the R console, set the variable

JAVA_HOMEwith the path to your newly installed Java folder e.g. (note: replacejre1.8.0_291with the one you have installed)

Sys.setenv(JAVA_HOME='C:\\Program Files\\Java\\jre1.8.0_291')

Then try to knit again the Rmd file.

This repo was given the replicability stamp by the Graphics Replicability Stamp Initiative (GRSI).

@article{CAG2021,

title = {Model for predicting perception of facial action unit activation using virtual humans},

author={Rachel McDonnell, Katja Zibrek, Emma Carrigan and Rozenn Dahyot},

journal = {Computers \& Graphics},

url= {https://roznn.github.io/facial-blendshapes/CAG2021.pdf},

note= {Github: https://github.com/Roznn/facial-blendshapes},

issn= {0097-8493},

doi={10.1016/j.cag.2021.07.022},

year={2021},

abstract= {Blendshape facial rigs are used extensively in the industry for facial animation of

virtual humans. However, storing and manipulating large numbers of facial meshes

(blendshapes) is costly in terms of memory and computation for gaming applications.

Blendshape rigs are comprised of sets of semantically-meaningful expressions, which

govern how expressive the character will be, often based on Action Units from the Facial

Action Coding System (FACS). However, the relative perceptual importance of blendshapes has not yet been investigated. Research in Psychology and Neuroscience has

shown that our brains process faces differently than other objects so we postulate that

the perception of facial expressions will be feature-dependent rather than based purely

on the amount of movement required to make the expression. Therefore, we believe that

perception of blendshape visibility will not be reliably predicted by numerical calculations of the difference between the expression and the neutral mesh. In this paper, we

explore the noticeability of blendshapes under different activation levels, and present

new perceptually-based models to predict perceptual importance of blendshapes. The

models predict visibility based on commonly-used geometry and image-based metrics.}

}

@inproceedings{10.1145/3424636.3426904,

author = {Carrigan, Emma and Zibrek, Katja and Dahyot, Rozenn and McDonnell, Rachel},

title = {Investigating Perceptually Based Models to Predict Importance of Facial Blendshapes},

year = {2020},

isbn = {9781450381710},

publisher = {Association for Computing Machinery},

address = {New York, NY, USA},

url = {https://doi.org/10.1145/3424636.3426904},

doi = {10.1145/3424636.3426904},

abstract = {Blendshape facial rigs are used extensively in the industry for facial animation of

virtual humans. However, storing and manipulating large numbers of facial meshes is costly

in terms of memory and computation for gaming applications, yet the relative perceptual

importance of blendshapes has not yet been investigated. Research in Psychology and Neuroscience

has shown that our brains process faces differently than other objects, so we postulate that

the perception of facial expressions will be feature-dependent rather than based purely on the

amount of movement required to make the expression. In this paper, we explore the noticeability

of blendshapes under different activation levels, and present new perceptually based models to

predict perceptual importance of blendshapes. The models predict visibility based on commonly-used

geometry and image-based metrics. },

booktitle = {Motion, Interaction and Games},

articleno = {2},

numpages = {6},

keywords = {perception, blendshapes, linear model, action units},

location = {Virtual Event, SC, USA},

series = {MIG '20}

}

- Project Webpage: https://roznn.github.io/facial-blendshapes/

- Authors: Rozenn Dahyot and Katja Zibrek