Prefer to run this repo via docker

- Python >= 3.7

- Docker

- Node.js >= v11.14.0 (For front-end webapp UI)

- npm >= 6.9.0 or yarn >= 1.15.2 (For front-end webapp UI)

-

rubixml (A pip library)

- TextCNN (PyTorch implementation from scratch)

- RNN (LSTM/GRU cell)

- spaCy (Residual TextCNN)

- BERT (Transformer)

-

- Miniconda

- PyTorch

- Jupyter Notebook and Lab

- Docker

-

trainer (The main application to train models)

- Analytics (LDA + Word Cloud)

- Train model and predict the comments in test.txt

- Docker

-

webapi (Back-end)

- Flask Server

- Docker

-

webapp (Front-end)

- React-Redux

-

Pipeline

- buildkite or travis-cli

-

Start Flask Server:

cd ./sentiment-analysis./start-webapi- (optinal) request

http://127.0.0.1:5000/api/textcnn/predict, preferpostman

-

Start Web UI:

- (optional) instal yarn (preferred) or npm

cd ./sentiment-analysis/webappyarn installornpm installyarn startornpm start- visit

http://127.0.0.1:3000in your browser (prefer Chrome)

-

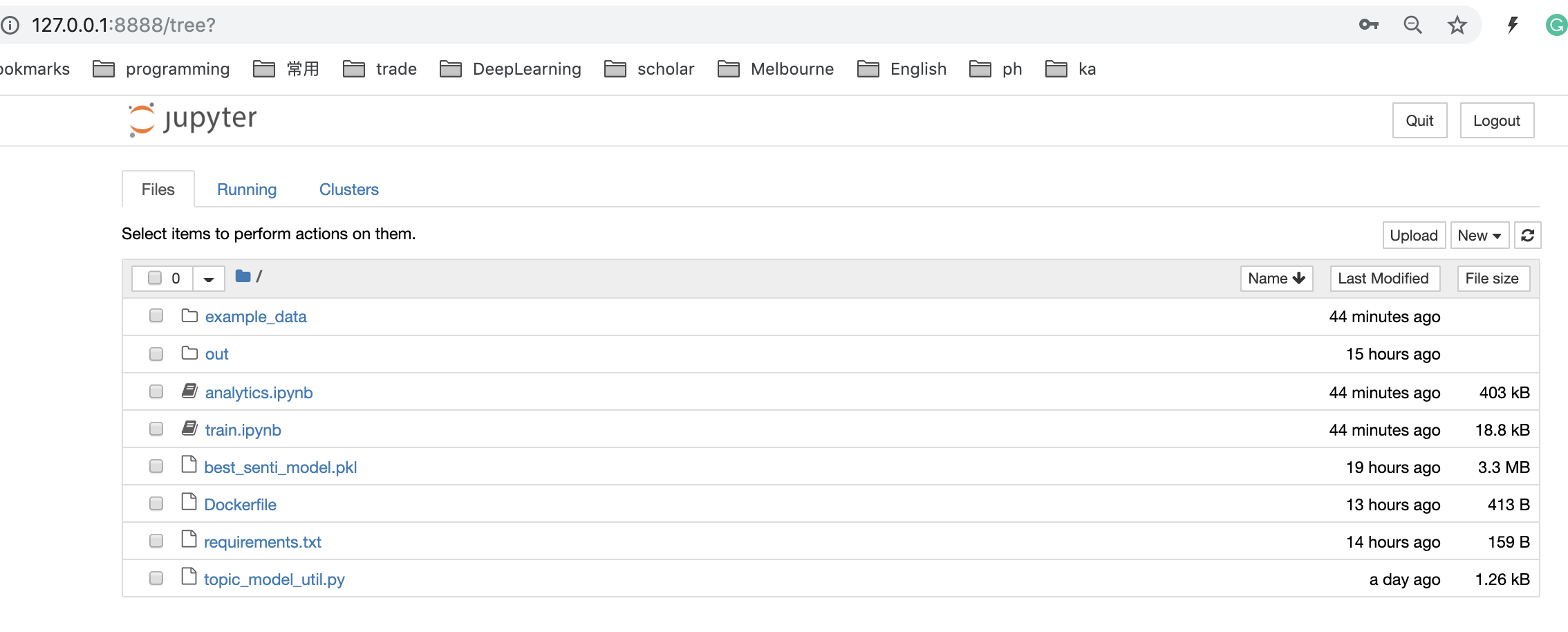

(Optional) Start Trainer

cd ./sentiment-analysis./start-trainer- visit

http://127.0.0.1:8888in your browser. You will see a Jupyter notebook page. - type the token

abcd

A pip python library that includes machine learning models. The reason of creating this library is to unify the training process and deployment process, which can avoid manually copy scripts. It also can easily manage version controls.

- TextCNN

cd ./sentiment-analysis/rubixml & pytest .

Run cd ./sentiment-analysis/rubixml & pip install .

# import model

from rubixml.torch_textcnn import TextCNNSentimentClassifier

# initialize model

model = TextCNNSentimentClassifier(embed_dim=50, lr=0.001, dropout=0.5)

model.fit('./example_data/train.txt', nepoch=1)

# predict using the last model weights

last_result = model.predict_prob(sentences)

# reset model with the best model weights

model.use_best_model()

# predict the model using the best model weights

best_result = model.predict_prob(sentences)create a basic docker image which contains some necessary libraries, such as jupyter, pytorch, etc.

Link: https://hub.docker.com/r/yinchuandong/miniconda-torch/tags

Current Tag: v1.0.0

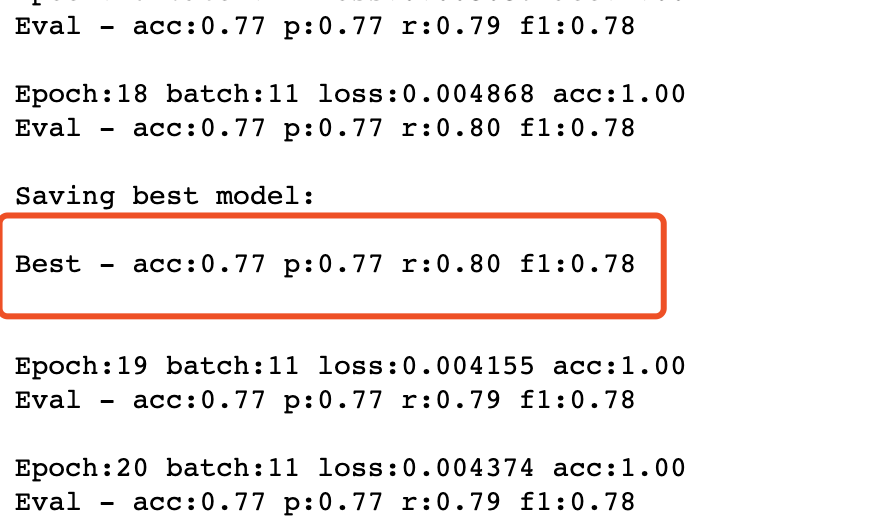

The main application to analyse dataset and train models

- file:

./trainer/analytics.ipynb- implement word cloud

- implement LDA

- file:

./trainer/train.ipynb

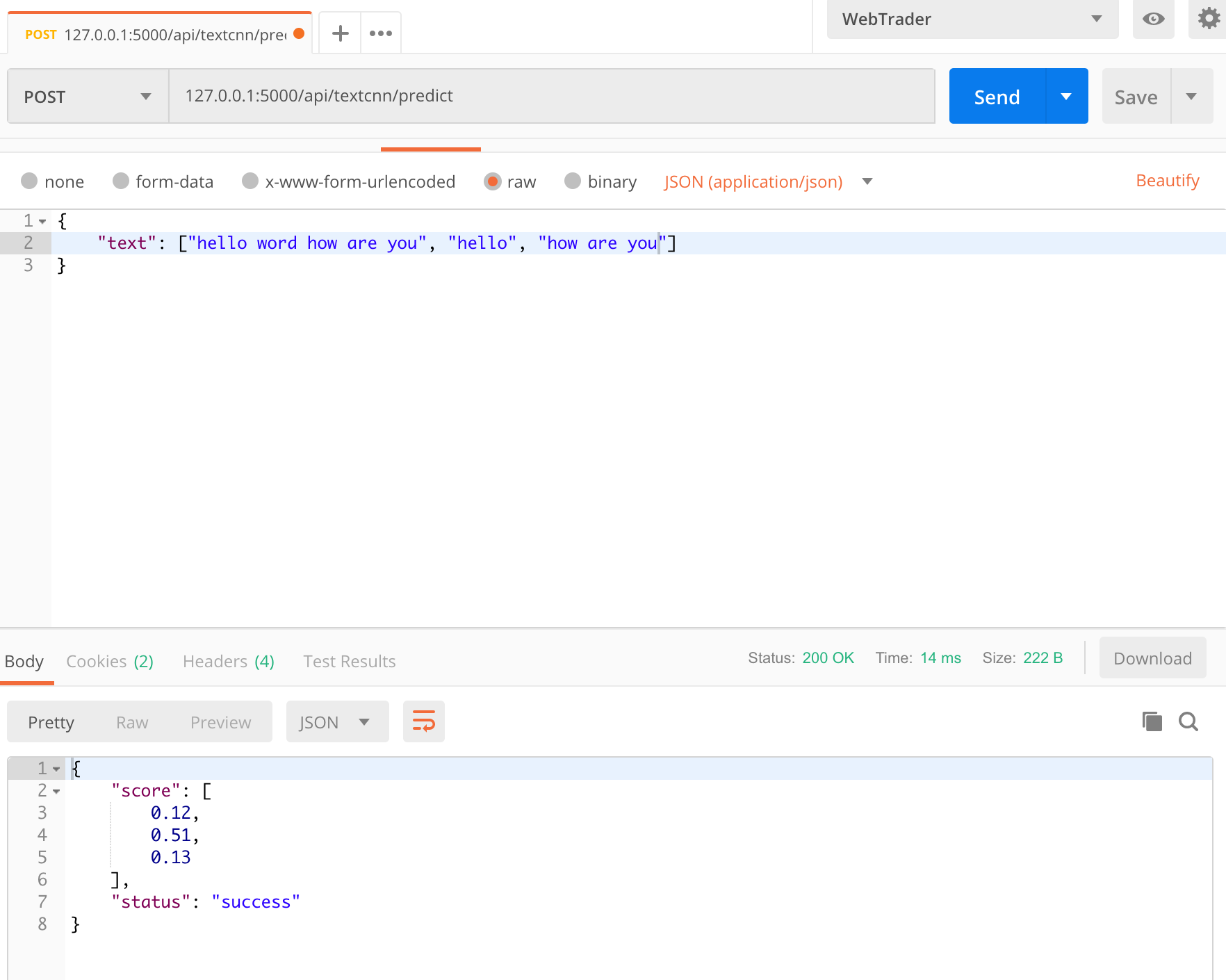

A Python Flask Server (uWSGI + nginx) to host the well-trained machine learning models from trainer. It provides public access to models via http requests. (BASE_URL=http://127.0.0.1:5000/)

-

Endpoint

POST /api/textcnn/predict

-

Request

name type description text list of strings the text to be predicted -

Response

name type description score list of floats the predicted scores between 0 to 1 status string indicate whether the request is successful

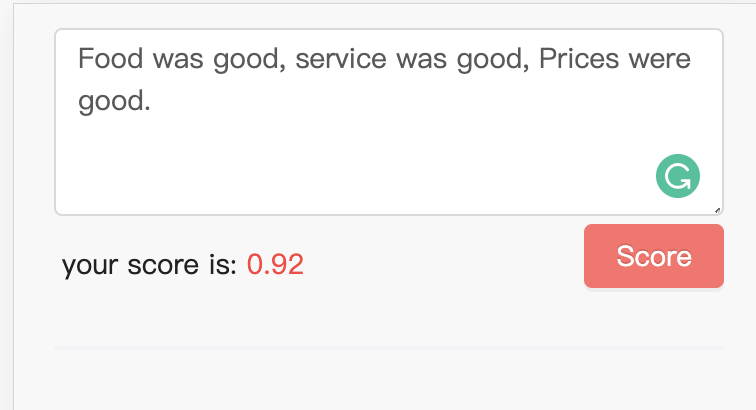

A simple web page that allows user to type text on browser and show the prediction results. (BASE_URL=http://127.0.0.1:3000/)