DADA: Dialect Adaptation via Dynamic Aggregation of Linguistic Rules

DADA: Dialect Adaptation via Dynamic Aggregation of Linguistic Rules

This repository contains the code implementation for the paper titled "DADA: Dialect Adaptation via Dynamic Aggregation of Linguistic Rules".

Table of Contents

Abstract

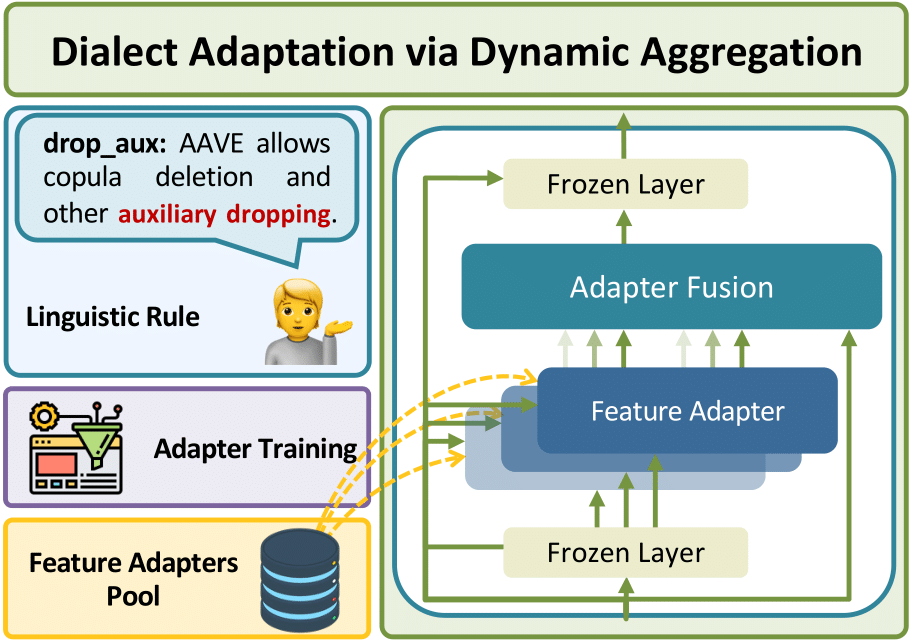

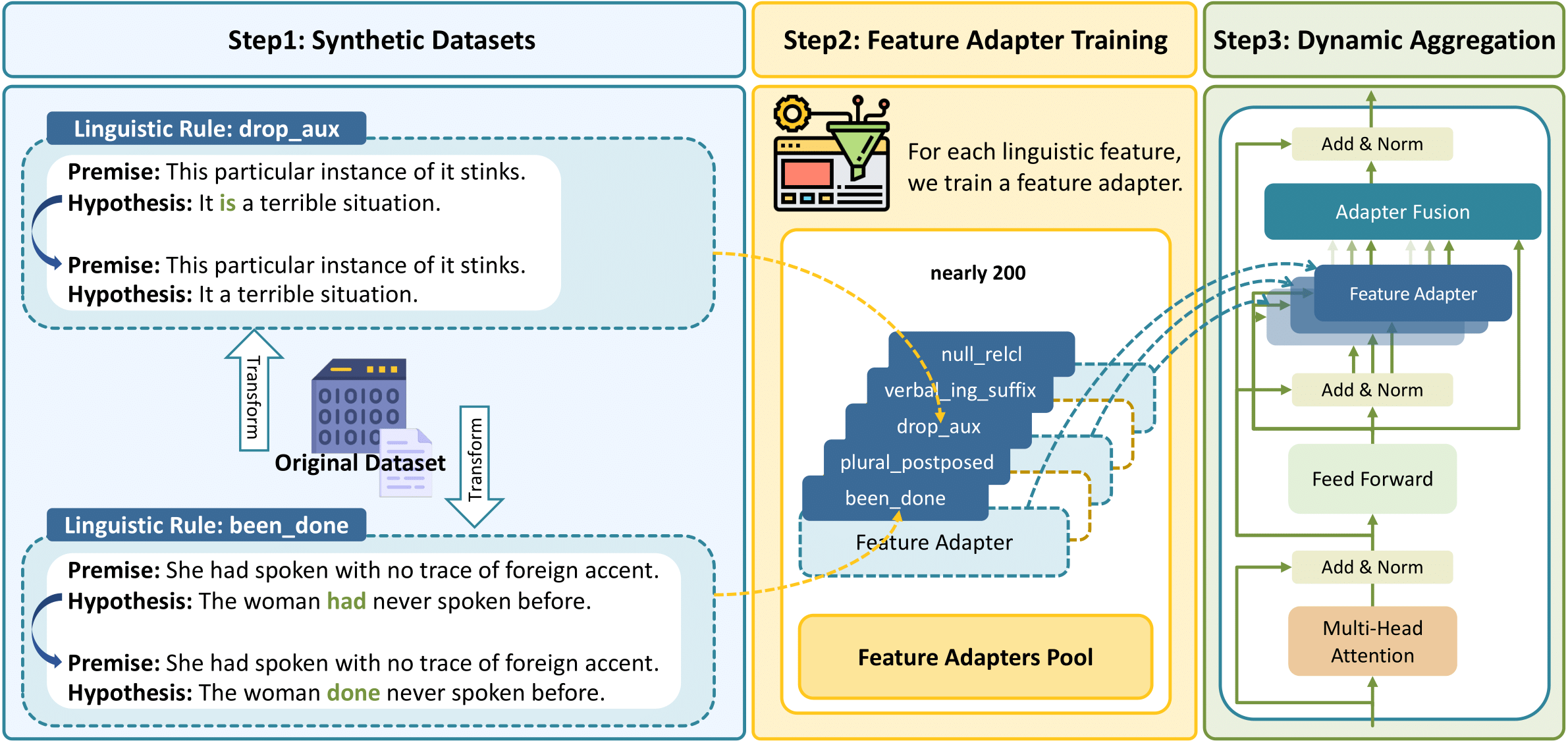

Existing large language models (LLMs) that mainly focus on Standard American English (SAE) often lead to significantly worse performance when being applied to other English dialects. While existing mitigations tackle discrepancies for individual target dialects, they assume access to high-accuracy dialect identification systems. The boundaries between dialects are inherently flexible, making it difficult to categorize language into discrete predefined categories. In this paper, we propose DADA (Dialect Adaptation via Dynamic Aggregation), a modular approach to imbue SAE-trained models with multi-dialectal robustness by composing adapters which handle specific linguistic features. The compositional architecture of DADA allows for both targeted adaptation to specific dialect variants and simultaneous adaptation to various dialects. We show that DADA is effective for both single task and instruction finetuned language models, offering an extensible and interpretable framework for adapting existing LLMs to different English dialects.

Installation

please run the command below to install the dependent libraries.

conda create -n DADA python=3.8

conda activate DADA

conda install --file requirements.txt

Usage

Citation and Contact

If you find this repository helpful, please cite our paper.

@inproceedings{liu2023dada,

title={DADA: Dialect Adaptation via Dynamic Aggregation of Linguistic Rules},

author={Yanchen Liu and William Held and Diyi Yang},

booktitle={Proceedings of the 2023 Conference on Empirical Methods in Natural Language Processing},

year={2023},

}

Feel free to contact Yanchen at yanchenliu@g.harvard.edu, if you have any questions about the paper.