BlackboxBench is a comprehensive benchmark containing mainstream adversarial black-box attack methods implemented based on PyTorch. It can be used to evaluate the adversarial robustness of any ML models, or as the baseline to develop more advanced attack and defense methods.

✨ BlackBoxBench will be continously updated by adding more attacks. ✨

✨ You are welcome to contribute your black-box attack methods to BlackBoxBench!! See how to contribute✨

Contents

For Requirements and Quick start of transfer-based black-box adversarial attacks in BlackboxBench, please refer to the README here. We also provide some model checkpoints for users' convenience.

CIFAR-10, NIPS2017. Please first download these two datasets into transfer/data/dataset.

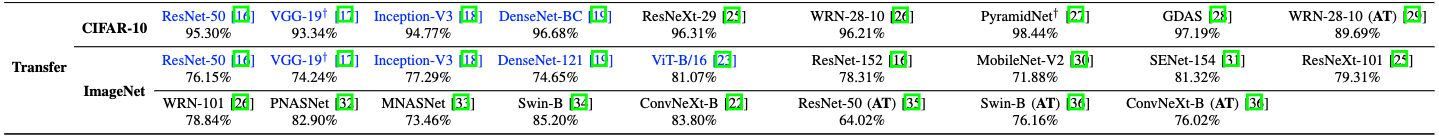

BlackboxBench evaluates contained transfer-based attack methods on the below models 👇 (models in blue are treated as surrogate models). But users can attack any model trained on CIFAR-10 and ImageNet by adding it into BlackboxBench, following the step 1️⃣ in Quick start.

For Requirements and Quick start of query-based black-box adversarial attacks in BlackboxBench, please refer to the README here.

| Score-Based | File name | Paper |

|---|---|---|

| NES Attack | nes_attack.py | Black-box Adversarial Attacks with Limited Queries and Information ICML 2018 |

| ZO-signSGD | zo_sign_agd_attack.py | signSGD via Zeroth-Order Oracle ICLR 2019 |

| Bandit Attack | bandit_attack.py | Prior Convictions: Black-Box Adversarial Attacks with Bandits and Priors ICML 2019 |

| SimBA | simple_attack.py | Simple Black-box Adversarial Attacks ICML 2019 |

| ECO Attack | parsimonious_attack.py | Parsimonious Black-Box Adversarial Attacks via Efficient Combinatorial Optimization ICML 2019 |

| Sign Hunter | sign_attack.py | Sign Bits Are All You Need for Black-Box Attacks ICLR 2020 |

| Square Attack | square_attack.py | Square Attack: a query-efficient black-box adversarial attack via random search ECCV 2020 |

| Decision-Based | File name | Paper |

|---|---|---|

| Boundary Attack | boundary_attack.py | Decision-Based Adversarial Attacks: Reliable Attacks Against Black-Box Machine Learning Models ICLR 2017 |

| OPT | opt_attack.py | Query-Efficient Hard-label Black-box Attack: An Optimization-based Approach ICLR 2019 |

| Sign-OPT | sign_opt_attack.py | Sign OPT: A Query Efficient Hard label Adversarial Attack ICLR 2020 |

| Evolutionary Attack | evo_attack.py | Efficient Decision based Blackbox Adversarial Attacks on Face Recognition CVPR 2019 |

| GeoDA | geoda_attack.py | GeoDA: a geometric framework for blackbox adversarial attacks CVPR 2020 |

| HSJA | hsja_attack.py | HopSkipJumpAttack: A Query Efficient Decision Based Attack IEEE S&P 2020 |

| Sign Flip Attack | sign_flip_attack.py | Boosting Decision based Blackbox Adversarial Attacks with Random Sign Flip ECCV 2020 |

| RayS | rays_attack.py | RayS: A Ray Searching Method for Hard-label Adversarial Attack KDD 2020 |

CIFAR-10, ImageNet. Please first download these two datasets into query/data/. Here, we test the contained attack methods on the whole CIFAR-10 testing set and ImageNet competition dataset comprised of 1000 samples.

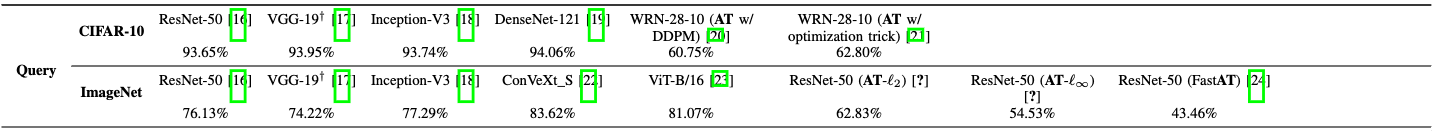

BlackboxBench evaluates contained query-based attack methods on the below models 👇. But users can attack any model trained on CIFAR-10 and ImageNet by adding it into BlackboxBench, following the step 1️⃣ in Quick start.

Analysis tools will be released soon!

You are welcome to contribute your black-box attacks or defenses to BlackBoxBench! 🤩

In the following sections there are some tips on how to prepare you attack.

We divide various efforts to improve I-FGSM into four distinct perspectives: data, optimization, feature and model. Attacks belonging to different perspectives can be implemented by modifying below blocks:

input_transformation.py: the block registering various input transformation functions. Attacks from data perspective are most likely to happen here. For example, the key of DI-FGSM is randomly resizing the image, so its core function is defined here:

@Registry.register("input_transformation.DI")

def DI(in_size, out_size):

def _DI(iter, img, true_label, target_label, ensemble_models, grad_accumulate, grad_last, n_copies_iter):

...

return padded

return _DI

loss_function.py: the block registering various loss functions. Attacks from feature perspective are most likely to happen here. For example, the key of FIA is designing a new loss function, so its core function is defined here:

@Registry.register("loss_function.fia_loss")

def FIA(fia_layer, N=30, drop_rate=0.3):

...

def _FIA(args, img, true_label, target_label, ensemble_models):

...

return -loss if args.targeted else loss

return _FIA

gradient_calculation.py: the block registering various ways to calculate gradients. Attacks from optimization perspective are most likely to happen here. For example, the key of SGM is using gradients more from the skip connections, so its core function is defined here:

@Registry.register("gradient_calculation.skip_gradient")

def skip_gradient(gamma):

...

def _skip_gradient(args, iter, adv_img, true_label, target_label, grad_accumulate, grad_last, input_trans_func, ensemble_models, loss_func):

...

return gradient

return _skip_gradient

update_dir_calculation.py: the block registering various ways to calculate update direction on adversarial examples. Attacks from optimization perspective are most likely to happen here. For example, the key of MI is using the accumulated gradient as update direction, so its core function is defined here:

@Registry.register("update_dir_calculation.momentum")

def momentum():

def _momentum(args, gradient, grad_accumulate, grad_var_last):

...

return update_dir, grad_accumulate

return _momentum

model_refinement.py: the block registering various ways to refine the surrogate model. Attacks from model perspective are most likely to happen here. For example, the key of LGV is finetune model with a high learning rate, so its core function is defined here:

@Registry.register("model_refinement.stochastic_weight_collecting")

def stochastic_weight_collecting(collect, mini_batch=512, epochs=10, lr=0.05, wd=1e-4, momentum=0.9):

def _stochastic_weight_collecting(args, rfmodel_dir):

...

return _stochastic_weight_collecting

Design your core function and register it in the suitable .py file to fit into our unified attack pipeline.

You should also fill a json file which is structured in the following way and put it in transfer/config/<DATASET>/<TARGET>/<L-NORM>/<YOUR-METHOD>.py. Here is an example from transfer/config/NIPS2017/untargeted/l_inf/I-FGSM.json):

{

"source_model_path": ["NIPS2017/pretrained/resnet50"],

"target_model_path": ["NIPS2017/pretrained/resnet50",

"NIPS2017/pretrained/vgg19_bn",

"NIPS2017/pretrained/resnet152"],

"n_iter": 100,

"shuffle": true,

"batch_size": 200,

"norm_type": "inf",

"epsilon": 0.03,

"norm_step": 0.00392157,

"seed": 0,

"n_ensemble": 1,

"targeted": false,

"save_dir": "./save",

"input_transformation": "",

"loss_function": "cross_entropy",

"grad_calculation": "general",

"backpropagation": "nonlinear",

"update_dir_calculation": "sgd",

"source_model_refinement": ""

}

Make sure your core function is well specified in the last six fields.

If you want to use BlackboxBench in your research, cite it as follows:

@misc{zheng2023blackboxbench,

title={BlackboxBench: A Comprehensive Benchmark of Black-box Adversarial Attacks},

author={Meixi Zheng and Xuanchen Yan and Zihao Zhu and Hongrui Chen and Baoyuan Wu},

year={2023},

eprint={2312.16979},

archivePrefix={arXiv},

primaryClass={cs.CR}

}

The source code of this repository is licensed by The Chinese University of Hong Kong, Shenzhen under Creative Commons Attribution-NonCommercial 4.0 International Public License (identified as CC BY-NC-4.0 in SPDX). More details about the license could be found in LICENSE.

This project is built by the Secure Computing Lab of Big Data (SCLBD) at The Chinese University of Hong Kong, Shenzhen, directed by Professor Baoyuan Wu. SCLBD focuses on research of trustworthy AI, including backdoor learning, adversarial examples, federated learning, fairness, etc.