This repository is the official implementation of Effective Backdoor Defense by Exploiting Sensitivity of Poisoned Samples.

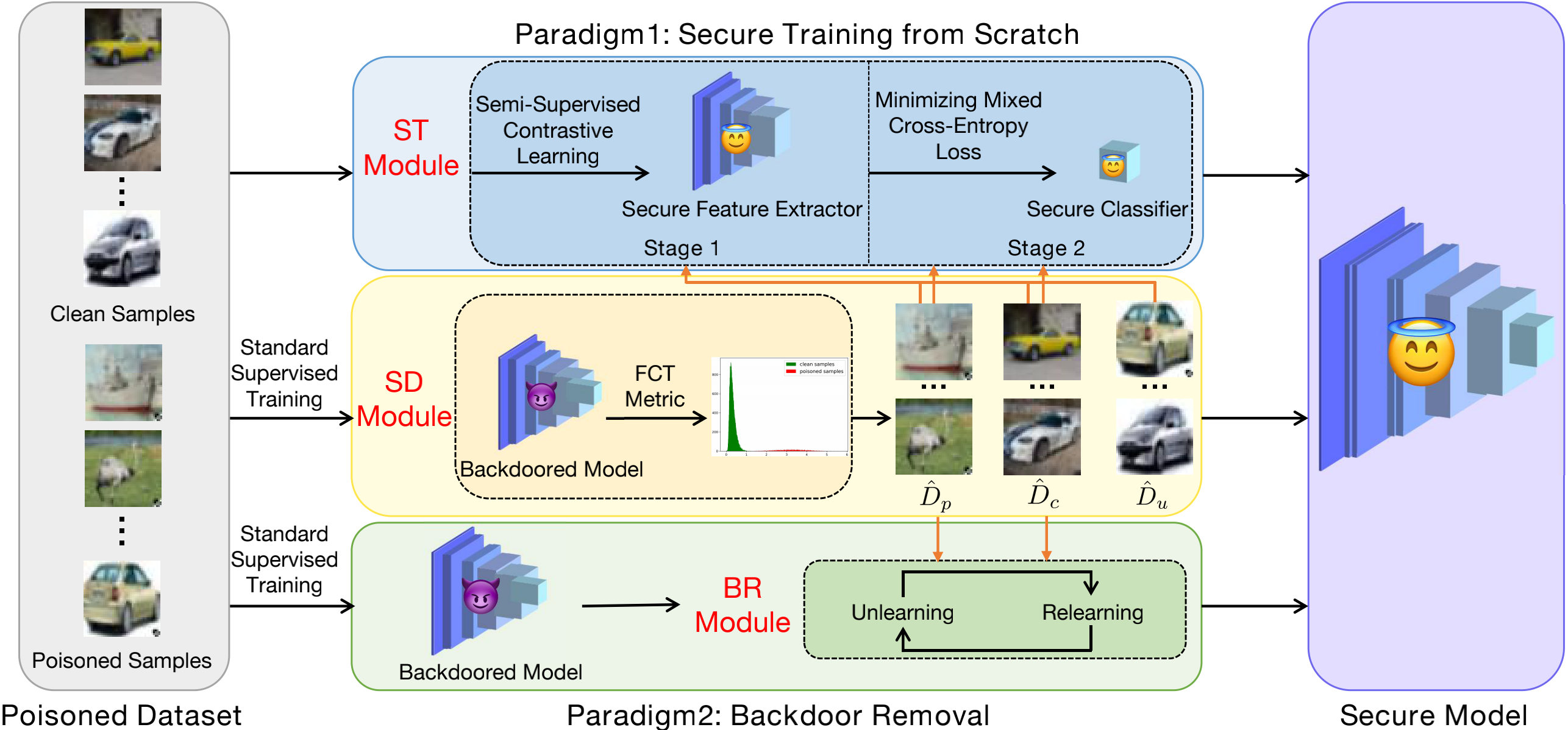

We propose two effective backdoor defense methods for training a secure model from scratch (D-ST) and removing backdoor from the backdoored model (D-BR), respectively. D-ST consists of two modules: the SD module and the ST module. D-BR includes two modules: the SD module and the BR module. SD module distinguishes samples according to the FCT metric and separates training samples into clean samples, poisoned samples and uncertain samples. ST module firstly trains a secure feature extractor via semi-supervised contrastive learning and then trains a secure classifier by minimizing a mixed cross-entropy loss. BR module removes backdoor by unlearning the distinguished poisoned samples and relearning the distinguished clean samples.

This code is implemented in PyTorch, and we have tested the code under the following environment settings:

- python = 3.8.8

- torch = 1.7.1

- torchvision = 0.8.2

- tensorflow = 2.4.1

The default configurations are as follows:

- dataset = cifar10

- model = resnet18

- poison_rate = 0.10

- target_type = all2one

- trigger_type = gridTrigger

You might change the configurations to apply our method in different settings. Note that the attacks used in our paper correspond to the following configurations:

| Attack | target_type | trigger_type |

|---|---|---|

| BadNets-all2one | all2one | gridTrigger |

| BadNets-all2all | all2all | squareTrigger |

| Trojan | all2one | trojanTrigger |

| Blend-Strip | all2one | signalTrigger |

| Blend-Kitty | all2one | kittyTrigger |

| SIG | cleanLabel | sigTrigger |

| CL | cleanLabel | fourCornerTrigger |

| SSBA | all2one | SSBA |

| BadNets-all2one (on ImageNet) | all2one | squareTrigger_imagenet |

| Blend-Strip (on ImageNet) | all2one | signalTrigger_imagenet |

Results on CIFAR-10:

| CIFAR-10 | Backdoored | D-BR | D-ST | |||

| Attack | ACC | ASR | ACC | ASR | ACC | ASR |

| BadNets-all2one | 91.64 | 100.00 | 92.83 | 0.40 | 92.77 | 0.03 |

| BadNets-all2all | 92.79 | 88.01 | 92.61 | 0.56 | 89.22 | 2.05 |

| Trojan | 91.91 | 100.00 | 92.21 | 0.76 | 93.72 | 0.00 |

| Blend-Strip | 92.09 | 99.97 | 92.40 | 0.06 | 93.59 | 0.00 |

| Blend-Kitty | 92.69 | 99.99 | 92.11 | 0.14 | 91.82 | 0.00 |

| SIG | 92.88 | 99.69 | 92.73 | 0.24 | 90.07 | 0.00 |

| CL | 93.20 | 93.34 | 92.08 | 0.00 | 90.46 | 6.40 |

Results on CIFAR-100:

| CIFAR-100 | Backdoored | D-BR | D-ST | |||

| Attack | ACC | ASR | ACC | ASR | ACC | ASR |

| BadNets-all2one | 71.23 | 99.13 | 72.58 | 0.25 | 68.43 | 0.12 |

| Trojan | 75.75 | 100.00 | 74.52 | 0.00 | 68.04 | 0.08 |

| Blend-Strip | 75.54 | 99.99 | 74.35 | 0.00 | 67.63 | 0.00 |

| Blend-Kitty | 75.18 | 99.97 | 72.00 | 0.01 | 67.06 | 0.00 |

Results on ImageNet Subset:

| ImageNet | Backdoored | D-BR | ||

| Attack | ACC | ASR | ACC | ASR |

| BadNets-all2one | 84.72 | 95.80 | 83.66 | 0.00 |

| Blend-Strip | 84.36 | 97.64 | 80.40 | 0.00 |

| Blend-Kitty | 85.46 | 99.68 | 84.29 | 0.00 |

| SSBA | 85.24 | 99.64 | 83.77 | 0.09 |

Step1: Train a backdoored model without any data augmentations.

python train_attack_noTrans.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 2

Models of each epoch are saved at "./saved/backdoored_model/poison_rate_0.1/noTrans/cifar10/resnet18/gridTrigger".

Step2: Fine-tune the backdoored model with intra-class loss

python finetune_attack_noTrans.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 10 --checkpoint_load ./saved/backdoored_model/poison_rate_0.1/noTrans/cifar10/resnet18/gridTrigger/1.tar

This step aims to enlarge the distance between genuinely clean samples with target class and genuinely poisoned samples.

--checkpoint_load specifies the path of the backdoored model.

Models of each epoch are saved at "./saved/backdoored_model/poison_rate_0.1/noTrans_ftsimi/cifar10/resnet18/gridTrigger".

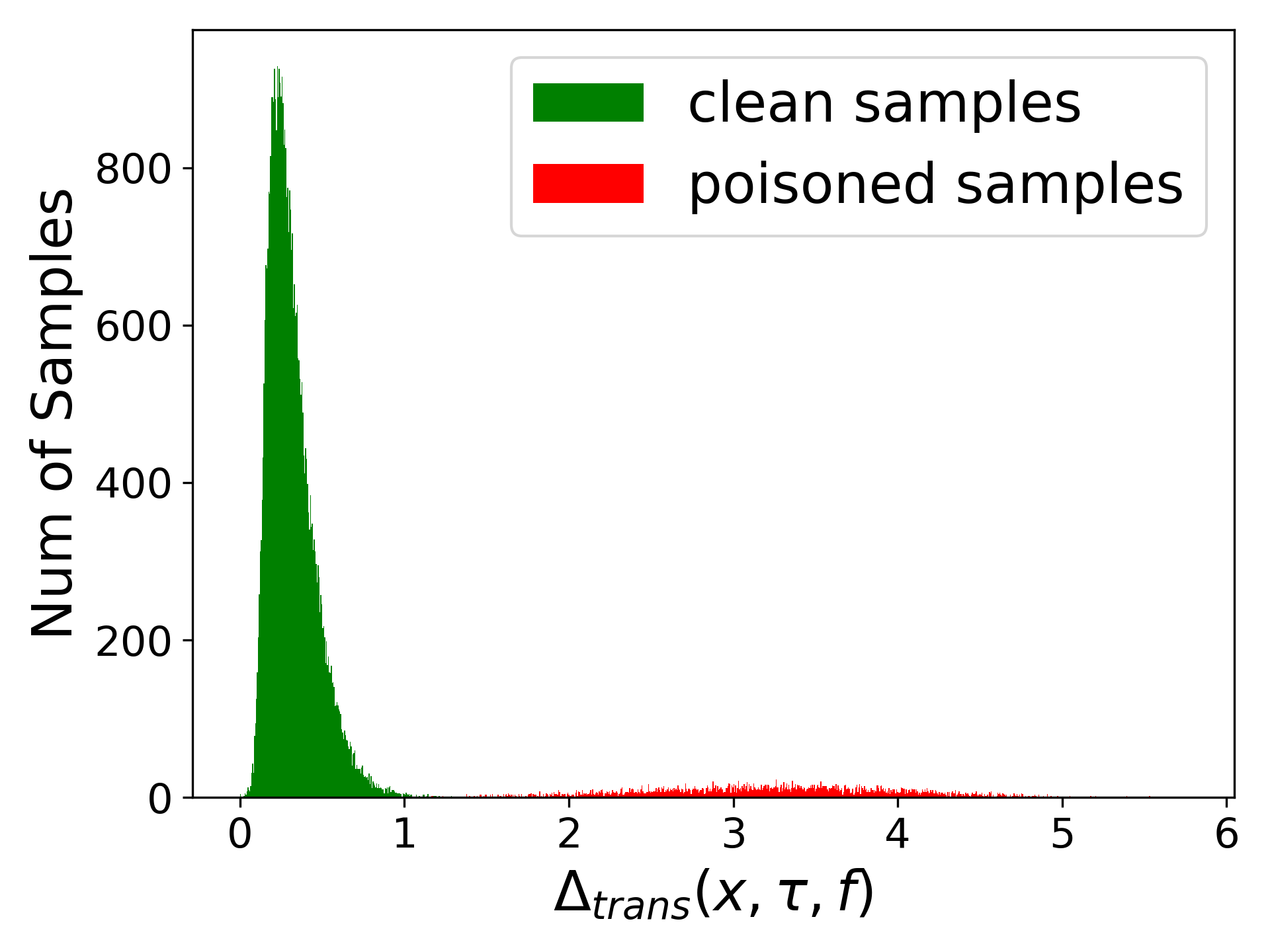

Step3: Calculate the values of the FCT metric ($\Delta_{trans}(x;\tau,f)$) for all training samples.

python calculate_consistency.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --checkpoint_load ./saved/backdoored_model/poison_rate_0.1/noTrans_ftsimi/cifar10/resnet18/gridTrigger/9.tar

--checkpoint_load specifies the path of the fine-tuned model.

If you want to visualize values of the FCT metric, you can run:

python visualize_consistency.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --checkpoint_load ./saved/backdoored_model/poison_rate_0.1/noTrans_ftsimi/cifar10/resnet18/gridTrigger/9.tar

The resulting figure is saved as "9.jpg" and shown as:

Step4: Calculate thresholds for choosing clean and poisoned samples.

python calculate_gamma.py --clean_ratio 0.20 --poison_ratio 0.05 --checkpoint_load ./saved/backdoored_model/poison_rate_0.1/noTrans_ftsimi/cifar10/resnet18/gridTrigger/9.tar

In this step, you obtain two values,

--clean_ratio and --poison_ratio specify

Step5: Separate training samples into clean samples

python separate_samples.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --batch_size 1 --clean_ratio 0.20 --poison_ratio 0.05 --gamma_low 0.0 --gamma_high 19.71682357788086 --checkpoint_load ./saved/backdoored_model/poison_rate_0.1/noTrans_ftsimi/cifar10/resnet18/gridTrigger/9.tar

--gamma_low and --gamma_high specify

The separated samples are saved at "./saved/separated_samples/cifar10/resnet18/gridTrigger_0.2_0.05". Specifically,

cd ST

Step1: Train the feature extractor via semi-supervised contrastive learning.

python train_extractor.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 200 --learning_rate 0.5 --temp 0.1 --cosine --save_freq 20 --batch_size 512

Parameters are set as the same in Supervised Contrastive Learning (https://github.com/HobbitLong/SupContrast).

Checkpoints are saved at "./save/poison_rate_0.1/SupCon_models/cifar10/resnet18/gridTrigger_0.2_0.05/SupCon_cifar10_resnet18_lr_0.5_decay_0.0001_bsz_512_temp_0.1_trial_0_cosine_warm".

Step2: Train the classifier via minimizing a mixed cross-entropy loss.

python train_classifier.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 10 --learning_rate 5 --batch_size 512 --ckpt ./save/poison_rate_0.1/SupCon_models/cifar10/resnet18/gridTrigger/SupCon_cifar10_resnet18_lr_0.5_decay_0.0001_bsz_512_temp_0.1_trial_0_cosine_warm/last.pth

Parameters are set as the same in Supervised Contrastive Learning. --ckpt specifies the path of the trained feature extractor.

Checkpoints are saved at "./save/poison_rate_0.1/SupCon_models/cifar10/resnet18/gridTrigger_0.2_0.05/Linear_cifar10_resnet18_lr_5.0_decay_0_bsz_512".

Step3: Test the final model.

python test.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --model_ckpt ./save/poison_rate_0.1/SupCon_models/cifar10/resnet18/gridTrigger/SupCon_cifar10_resnet18_lr_0.5_decay_0.0001_bsz_512_temp_0.1_trial_0_cosine_warm/last.pth --classifier_ckpt ./save/poison_rate_0.1/SupCon_models/cifar10/resnet18/gridTrigger/Linear_cifar10_resnet18_lr_5.0_decay_0_bsz_512/ckpt_epoch_9.pth

--model_ckpt and --classifier_ckpt specify the path of the trained feature extractor and classifier, respectively.

Step1: Train a backdoored model with classical data augmentations.

If you use cifar10 or cifar100 as the dataset, please run the following command.

python train_attack_withTrans.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 200

Models of each epoch are saved at "./saved/backdoored_model/poison_rate_0.1/withTrans/cifar10/resnet18/gridTrigger". Note that you can refer to https://github.com/weiaicunzai/pytorch-cifar100 if you want the accuracy of the model trained on cifar100 is higher.

If you use imagenet as the dataset, please run the following command.

python train_attack_withTrans_imagenet.py --dataset imagenet --model resnet18 --trigger_type squareTrigger_imagenet --epochs 25 --lr 0.001 --gamma 0.1 --schedule 15 20

Models of each epoch are saved at "./saved/backdoored_model/poison_rate_0.1/withTrans/imagenet/resnet18/squareTrigger_imagenet".

Step2: Unlearn and relearn the backdoored model.

python unlearn_relearn.py --dataset cifar10 --model resnet18 --trigger_type gridTrigger --epochs 20 ---clean_ratio 0.20 --poison_ratio 0.05 -checkpoint_load ./saved/backdoored_model/poison_rate_0.1/withTrans/cifar10/resnet18/gridTrigger/199.tar --checkpoint_save ./saved/backdoored_model/poison_rate_0.1/withTrans/cifar10/resnet18/gridTrigger/199_unlearn_purify.py --log ./saved/backdoored_model/poison_rate_0.1/withTrans/cifar10/resnet18/gridTrigger/unlearn_purify.csv

This step unlearns

--checkpoint_load specifies the path of the backdoored model. --checkpoint_save specifies the path to save the model. --log specifies the path to record.

If you want to run the CL attack, you need to train a clean model first. Please use the following command.

python train_clean_withTrans.py --dataset cifar10 --model resnet18 --epochs 200

Models of each epoch are saved at "./saved/benign_model/cifar10/resnet18".

If you want to run the SSBA attack, please use the following annotated code in dataloader_bd.py.

from utils.SSBA.encode_image import bd_generator

You also need to refer to https://github.com/SCLBD/ISSBA to download the encoder for imagenet and save it at "./trigger/imagenet_encoder/saved_model.pb".