This is the repo to host the code for OCCAM (Object-Centric Compositional Attention Model) in the following paper:

Zhonghao Wang, Mo Yu, Kai Wang, Jinjun Xiong, Wen-mei Hwu, Mark Hasegawa-Johnson and Humphrey Shi, Interpretable Visual Reasoning via Induced Symbolic Space, Arxiv link.

Note: Our code will be released soon, stay tuned.

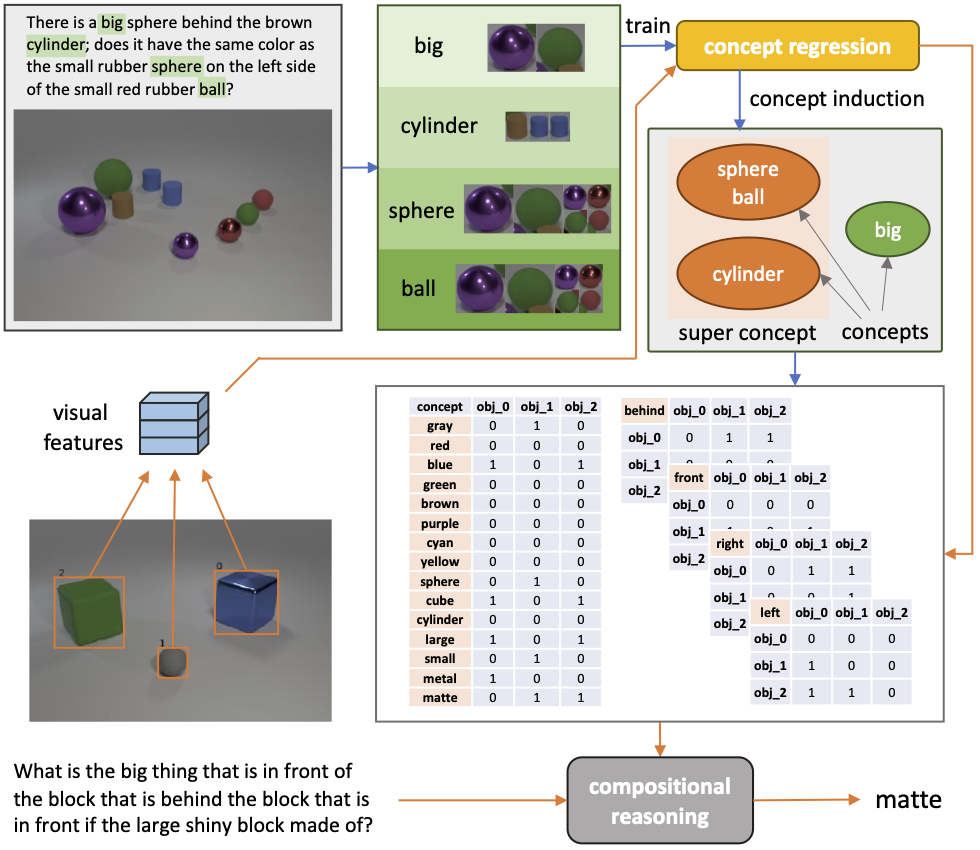

Our proposed OCCAM framework performs pure object-level reasoning and achieves a new state-of-the-art without human-annotated functional programs on the CLEVR dataset. Our framework makes the object-word cooccurrence information avaiable, which enables induction of the concepts and super concepts based on the inclusiveness and the mutual exclusiveness of words’ visual mappings. When working on concepts instead of visual features, OCCAM achieves comparable performance, proving the accuracy and sufficiency of the induced concepts.

In this table, we report the comparison of our object-level compositional reasoning framework to the state-of-the-art methods. * indicates the method uses external program annotations.

| method | overall | count | exist | comp numb |

query attr |

comp attr |

|---|---|---|---|---|---|---|

| Human | 92.6 | 86.7 | 96.6 | 86.5 | 95.0 | 96.0 |

| NMN* | 72.1 | 52.5 | 72.7 | 79.3 | 79.0 | 78.0 |

| N2NMN* | 83.7 | 68.5 | 85.7 | 84.9 | 90.0 | 88.7 |

| IEP* | 96.9 | 92.7 | 97.1 | 98.7 | 98.1 | 98.9 |

| TbD* | 99.1 | 97.6 | 99.4 | 99.2 | 99.5 | 99.6 |

| NS-VQA* | 99.8 | 99.7 | 99.9 | 99.9 | 99.8 | 99.8 |

| RN | 95.5 | 90.1 | 93.6 | 97.8 | 97.1 | 97.9 |

| FiLM | 97.6 | 94.5 | 93.8 | 99.2 | 99.2 | 99.0 |

| MAC | 98.9 | 97.2 | 99.4 | 99.5 | 99.3 | 99.5 |

| NS-CL | 98.9 | 98.2 | 99.0 | 98.8 | 99.3 | 99.1 |

| OCCAM (ours) | 99.4 | 98.1 | 99.8 | 99.0 | 99.9 | 99.9 |

@article{wang2020interpretable,

title={Interpretable Visual Reasoning via Induced Symbolic Space},

author={Wang, Zhonghao and Yu, Mo and Wang, Kai and Xiong, Jinjun and Hwu, Wen-mei and Hasegawa-Johnson, Mark and Shi, Humphrey},

journal={arXiv preprint arXiv:2011.11603},

year={2020}

}