UltraSR: Spatial Encoding is a Missing Key for Implicit Image Function-based Arbitrary-Scale Super-Resolution

Paper Link: ArXiv Preprint

By Xingqian Xu, Zhangyang Wang, and Humphrey Shi,

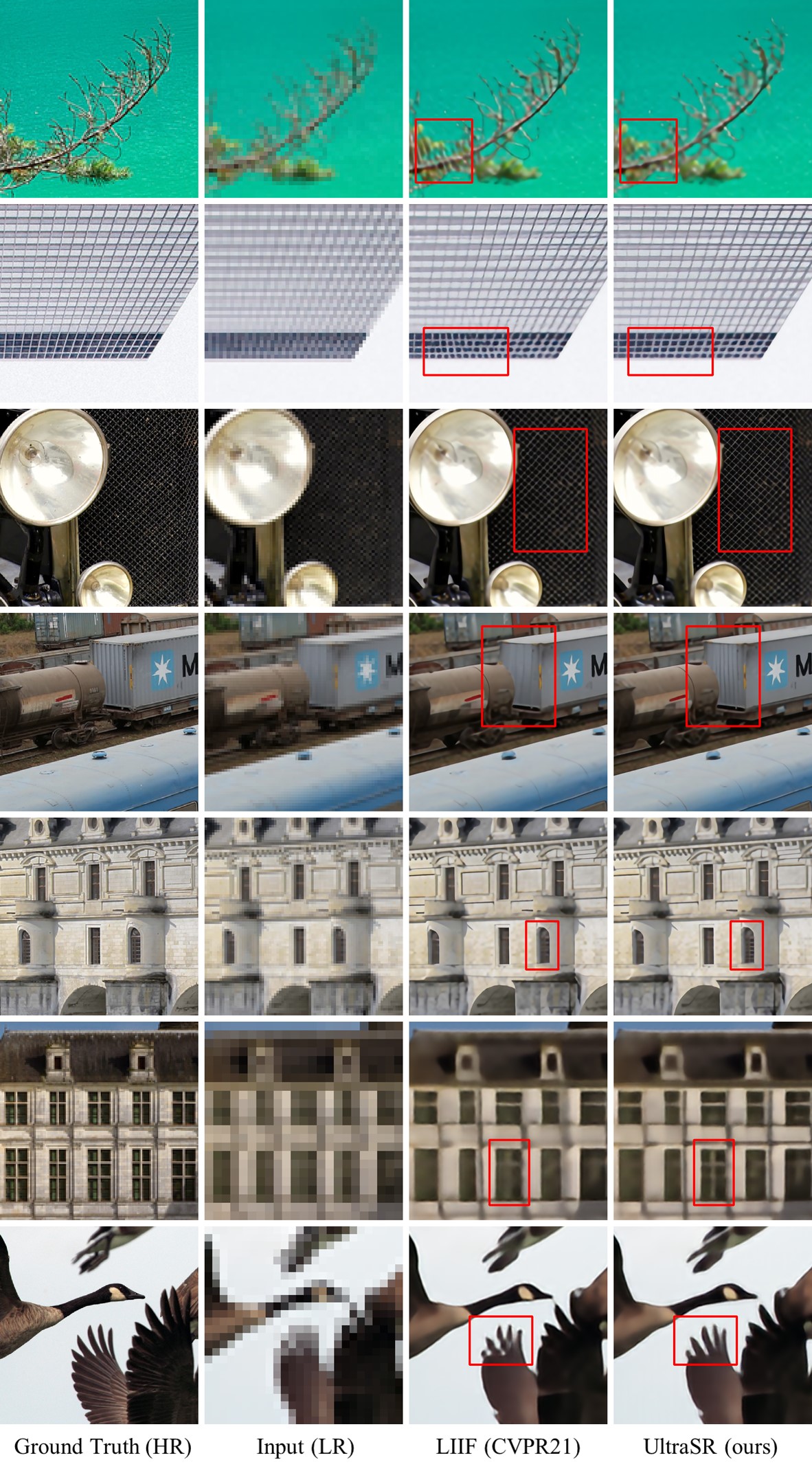

Arbitrary-scale super-resolution is a raising research topic with tremendous application potentials. Prior CNN-based SR approaches usually apply to only one fixed resolution scale, and thus unable to adjust their output dimension without changing the low-resolution input. Such design creates a huge gap between academic research and practical usage, and a majority of image up-sampling applications, even sensitive to precision, still heavily relied on bicubic interpolation despite its poor quality. Empowered by the rapidly advancing techniques in implicit neural representation, images and scenes can now be generalized by network-learned implicit functions on various vision topics. Specifically for our SR task, the idea that use one trained network for all zoom-in scales on any input image will bring both convenience and accuracy to downstream users in the near future. In this work, we propose UltraSR, a simple yet effective new network design based on implicit image functions in which spatial coordinates and periodic encoding are deeply integrated with the implicit neural representation. Our UltraSR sets new state-of-the-art performance on the DIV2K benchmark on Arbitrary-scale super-resolution. UltraSR also achieves superior performance on other standard benchmark datasets in which it outperforms prior works in almost all experiments.

Result comparison on DIV2K validation dataset.

| Method | x2 | x3 | x4 | x6 | x12 | x18 | x24 | x30 |

| Bicubic | 31.01 | 28.22 | 26.66 | 24.82 | 22.27 | 21.00 | 20.19 | 19.59 |

| EDSR | 34.55 | 30.90 | 28.92 | - | - | - | - | - |

| MetaSR-EDSR | 34.64 | 30.93 | 28.92 | 26.61 | 23.55 | 22.03 | 21.06 | 20.37 |

| LIIF-EDSR | 34.67 | 30.96 | 29.00 | 26.75 | 23.71 | 22.17 | 21.18 | 20.48 |

| UltraSR-EDSR | 34.69 | 31.02 | 29.05 | 26.81 | 23.75 | 22.21 | 21.21 | 20.51 |

| MetaSR-RDN | 35.00 | 31.27 | 29.25 | 26.88 | 23.73 | 22.18 | 21.17 | 20.47 |

| LIIF-RDN | 34.99 | 31.26 | 29.27 | 26.99 | 23.89 | 22.34 | 21.31 | 20.59 |

| UltraSR-RDN | 35.00 | 31.30 | 29.32 | 27.03 | 23.93 | 22.36 | 21.33 | 20.61 |

Result comparison on other 5 benchmark datasets.

| Dataset | Method | x2 | x3 | x4 | x6 | x8 | x12 |

| Set5 | RDN | 38.24 | 34.71 | 32.47 | - | - | - |

| MetaSR-RDN | 38.22 | 34.63 | 32.38 | 29.04 | 29.96 | - | |

| LIIF-RDN | 38.17 | 34.68 | 32.50 | 29.15 | 27.14 | 24.86 | |

| UltraSR-RDN | 38.21 | 34.67 | 32.49 | 29.33 | 27.24 | 24.81 | |

| Set14 | RDN | 34.01 | 30.57 | 28.81 | - | - | - |

| MetaSR-RDN | 33.98 | 30.54 | 28.78 | 26.51 | 24.97 | - | |

| LIIF-RDN | 33.97 | 30.53 | 28.80 | 26.64 | 25.15 | 23.24 | |

| UltraSR-RDN | 33.97 | 30.59 | 28.86 | 26.69 | 25.25 | 23.32 | |

| B100 | RDN | 32.34 | 29.26 | 27.72 | - | - | - |

| MetaSR-RDN | 32.33 | 29.26 | 27.71 | 25.90 | 24.83 | - | |

| LIIF-RDN | 32.32 | 29.26 | 27.74 | 25.98 | 24.91 | 23.57 | |

| UltraSR-RDN | 32.35 | 29.29 | 27.77 | 26.01 | 24.96 | 23.59 | |

| Urban100 | RDN | 32.89 | 28.80 | 26.61 | - | - | - |

| MetaSR-RDN | 32.92 | 28.82 | 26.55 | 23.99 | 22.59 | - | |

| LIIF-RDN | 32.87 | 28.82 | 26.68 | 24.20 | 22.79 | 21.15 | |

| UltraSR-RDN | 32.97 | 28.92 | 26.78 | 24.30 | 22.87 | 21.20 | |

| Manga109 | RDN | 39.18 | 34.13 | 31.00 | - | - | - |

| MetaSR-RDN | - | - | - | - | - | - | |

| LIIF-RDN | 39.26 | 34.21 | 31.20 | 27.33 | 25.04 | 22.36 | |

| UltraSR-RDN | 39.09 | 34.28 | 31.32 | 27.42 | 25.12 | 22.42 |

@article{xu2021ultrasr,

title={UltraSR: Spatial Encoding is a Missing Key for Implicit Image Function-based Arbitrary-Scale Super-Resolution},

author={Xingqian Xu and Zhangyang Wang and Humphrey Shi},

journal={arXiv preprint arXiv:2103.12716},

year={2021}

}Our work benefited greatly from LIIF (CVPR 2021) etc. We thank the authors for sharing their codes.