Distilling a Powerful Student Model via Online Knowledge Distillation (Link) .

.

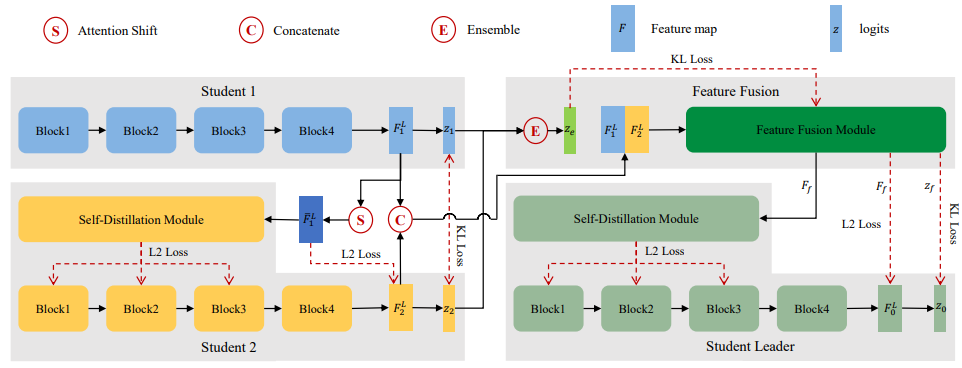

The framework of our proposed FFSD for online knowledge distillation. First, student 1 and student 2 learn from each other in a collaborative way. Then by shifting the attention of student 1 and distilling it to student 2, we are able to enhance the diversity among students. Last, the feature fusion module fuses all the students’ information into a fused feature map. The fused representation is then used to assist the learning of the student leader. After training, we simply adopt the student leader which achieves superior performance over all other students.

The code has been tested using Pytorch1.5.1 and CUDA10.2 on Ubuntu 18.04.

Please type the command

pip install -r requirements.txtto install dependencies.

-

You can run the following code to train models on CIFAR-100:

python cifar.py --dataroot ./database/cifar100 --dataset cifar100 --model resnet32 --lambda_diversity 1e-5 --lambda_self_distillation 1000 --lambda_fusion 10 --gpu_ids 0 --name cifar100_resnet32_div1e-5_sd1000_fusion10

-

You can run the following code to train models on ImageNet:

python distribute_imagenet.py --dataroot ./database/imagenet --dataset imagenet --model resnet18 --lambda_diversity 1e-5 --lambda_self_distillation 1000 --lambda_fusion 10 --gpu_ids 0,1 --name imagenet_resnet18_div1e-5_sd1000_fusion10

We provide the student leader models in the experiments, along with their training loggers and configurations.

| Model | Dataset | Top1 Accuracy (%) | Download |

|---|---|---|---|

| ResNet20 | CIFAR-100 | 72.64 | Link |

| ResNet20 | CIFAR-100 | 72.58 | Link |

| ResNet20 | CIFAR-100 | 72.88 | Link |

| ResNet32 | CIFAR-100 | 74.92 | Link |

| ResNet32 | CIFAR-100 | 74.82 | Link |

| ResNet32 | CIFAR-100 | 74.82 | Link |

| ResNet56 | CIFAR-100 | 75.84 | Link |

| ResNet56 | CIFAR-100 | 75.66 | Link |

| ResNet56 | CIFAR-100 | 75.91 | Link |

| WRN-16-2 | CIFAR-100 | 75.87 | Link |

| WRN-16-2 | CIFAR-100 | 75.86 | Link |

| WRN-16-2 | CIFAR-100 | 75.69 | Link |

| WRN-40-2 | CIFAR-100 | 79.13 | Link |

| WRN-40-2 | CIFAR-100 | 79.19 | Link |

| WRN-40-2 | CIFAR-100 | 79.11 | Link |

| DenseNet | CIFAR-100 | 77.29 | Link |

| DenseNet | CIFAR-100 | 77.70 | Link |

| DenseNet | CIFAR-100 | 77.17 | Link |

| GoogLeNet | CIFAR-100 | 81.52 | Link |

| GoogLeNet | CIFAR-100 | 81.93 | Link |

| GoogLeNet | CIFAR-100 | 81.34 | Link |

| ResNet-18 | ImageNet | 70.87 | Link |

| ResNet-34 | ImageNet | 74.69 | Link |

You can use the following code to test our models.

python test.py

--dataroot ./database/cifar100

--dataset cifar100

--model resnet32

--gpu_ids 0

--load_path ./resnet32/cifar100_resnet32_div1e-5_sd1000_fusion10_1/modelleader_best.pthAny problem, free to contact the authors via emails:shaojieli@stu.xmu.edu.cn.