A ComfyUI custom node package based on the BAGEL-7B-MoT multimodal model.

BAGEL is an open-source multimodal foundation model with 7B active parameters (14B total) that adopts a Mixture-of-Transformer-Experts (MoT) architecture. It is designed for multimodal understanding and generation tasks, outperforming top-tier open-source VLMs like Qwen2.5-VL and InternVL-2.5 on standard multimodal understanding leaderboards, and delivering text-to-image quality competitive with specialist generators such as SD3.

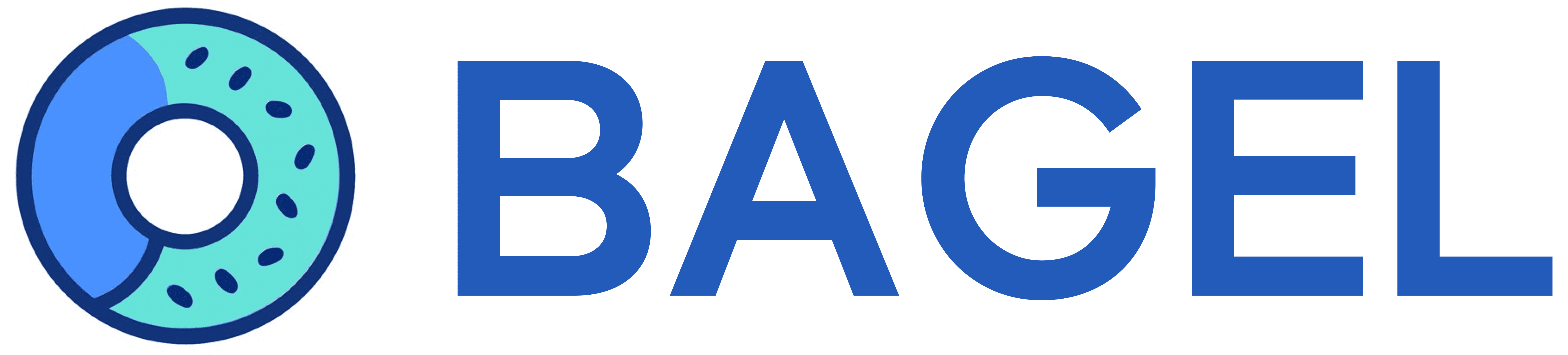

- Text-to-Image Generation: Generate high-quality images using natural language prompts

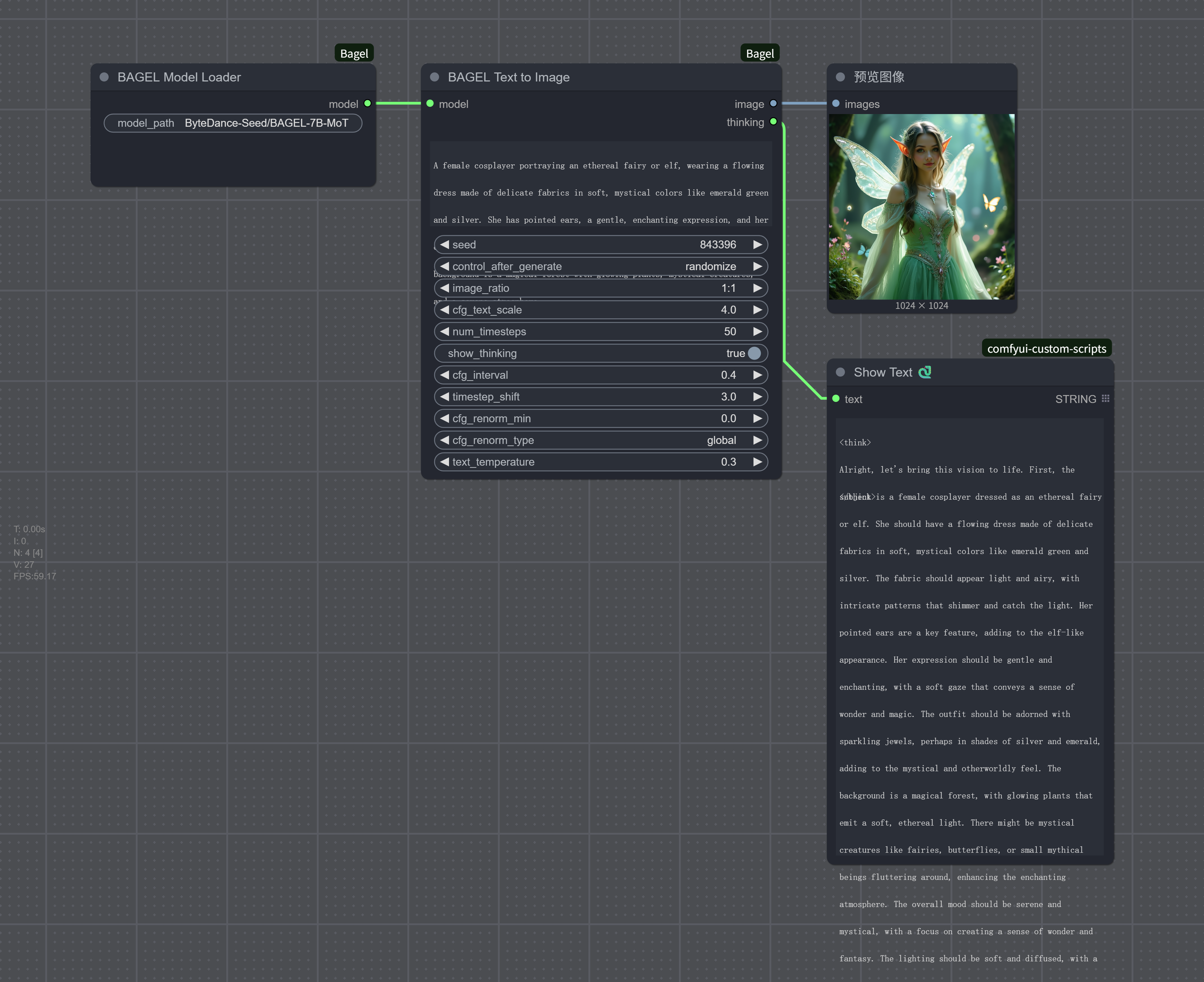

- Image Editing: Edit existing images based on textual descriptions

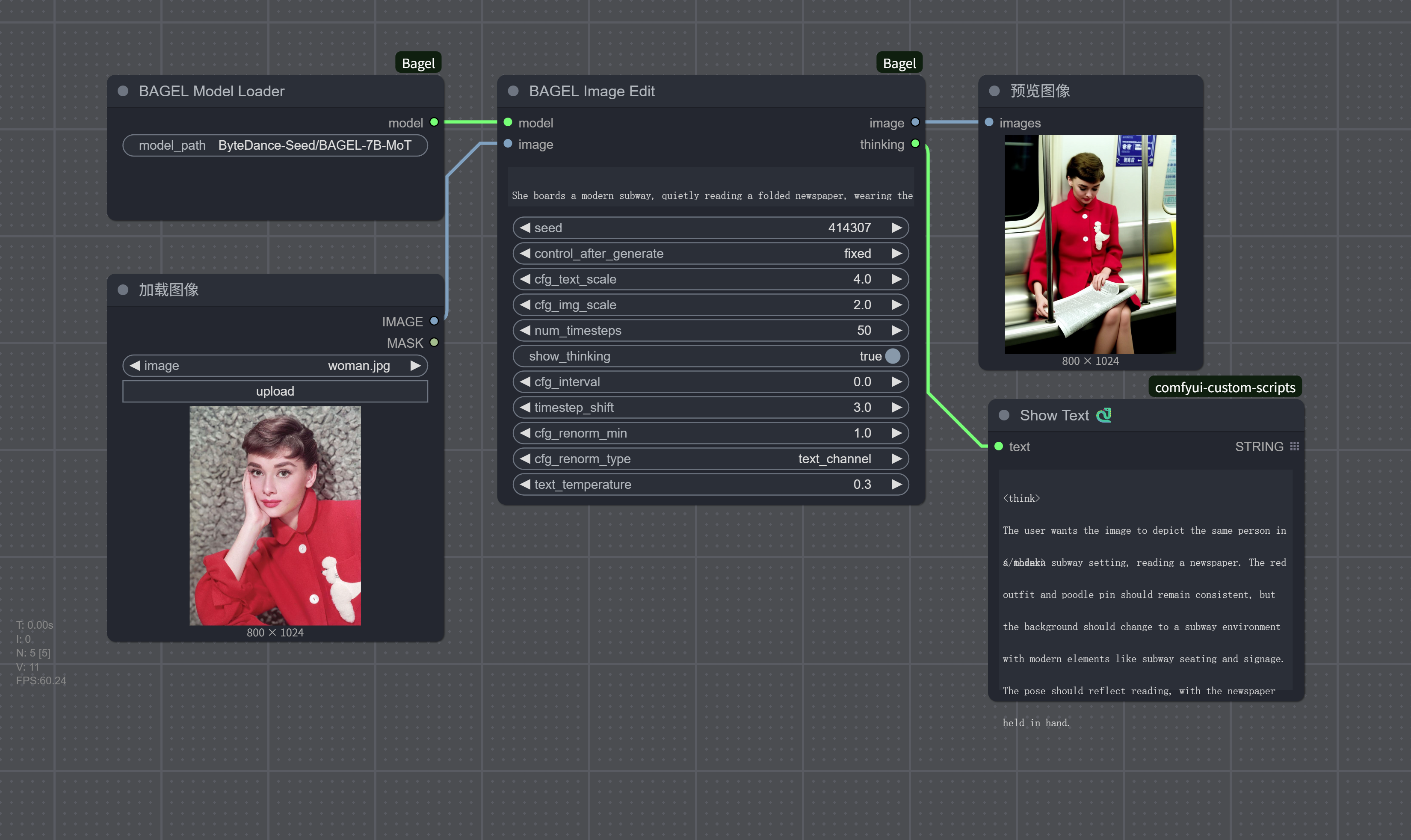

- Image Understanding: Perform Q&A and analysis on images

- Reasoning Process Display: Optionally display the model's reasoning process

The BAGEL-7B-MoT model will be automatically downloaded to models/bagel/BAGEL-7B-MoT/ when first used. You can also manually download it:

# Clone model using git lfs (recommended)

git lfs install

git clone https://huggingface.co/ByteDance-Seed/BAGEL-7B-MoT models/bagel/BAGEL-7B-MoT

# Or use huggingface_hub

pip install huggingface_hub

python -c "from huggingface_hub import snapshot_download; snapshot_download(repo_id='ByteDance-Seed/BAGEL-7B-MoT', local_dir='models/bagel/BAGEL-7B-MoT')"Install dependencies:

pip install -r requirements.txtRestart ComfyUI to load the new nodes.

Generate high-quality images from text descriptions. Suitable for creative design and content generation.

Generate high-quality images from text descriptions. Suitable for creative design and content generation.

Edit existing images based on textual descriptions, supporting local modifications and style adjustments.

Edit existing images based on textual descriptions, supporting local modifications and style adjustments.

Analyze and answer questions about image content, suitable for content understanding and information extraction.

Analyze and answer questions about image content, suitable for content understanding and information extraction.

This project is licensed under the Apache 2.0 License. Please refer to the official license terms for the use of the BAGEL model.

Contributions are welcome! Please submit issue reports and feature requests. If you wish to contribute code, please create an issue to discuss your ideas first.

The official recommendation for generating a 1024×1024 image is over 80GB GPU memory. However, multi-GPU setups can distribute the memory load. For example:

- Single GPU: A100 (40GB) takes approximately 340-380 seconds per image.

- Multi-GPU: 3 RTX3090 GPUs (24GB each) complete the task in about 1 minute.

- Compressed Model: Using the DFloat11 version requires only 22GB VRAM and can run on a single 24GB GPU, with peak memory usage around 21.76GB (A100) and generation time of approximately 58 seconds.

For more details, visit the GitHub issue.

A quantized version of BAGEL is currently under development, which aims to reduce VRAM requirements further.

This issue is likely related to environment or dependency problems. For more information, refer to this GitHub issue.