In this project, GANimator is used to perform crowd simulation using only one motion sequence. This project was done as the final project for "High Performance Computing for Data Science" course at the University of Trento.

For instructions on how to run the model, the original readme from the GANimator repository has been appended to the end this file

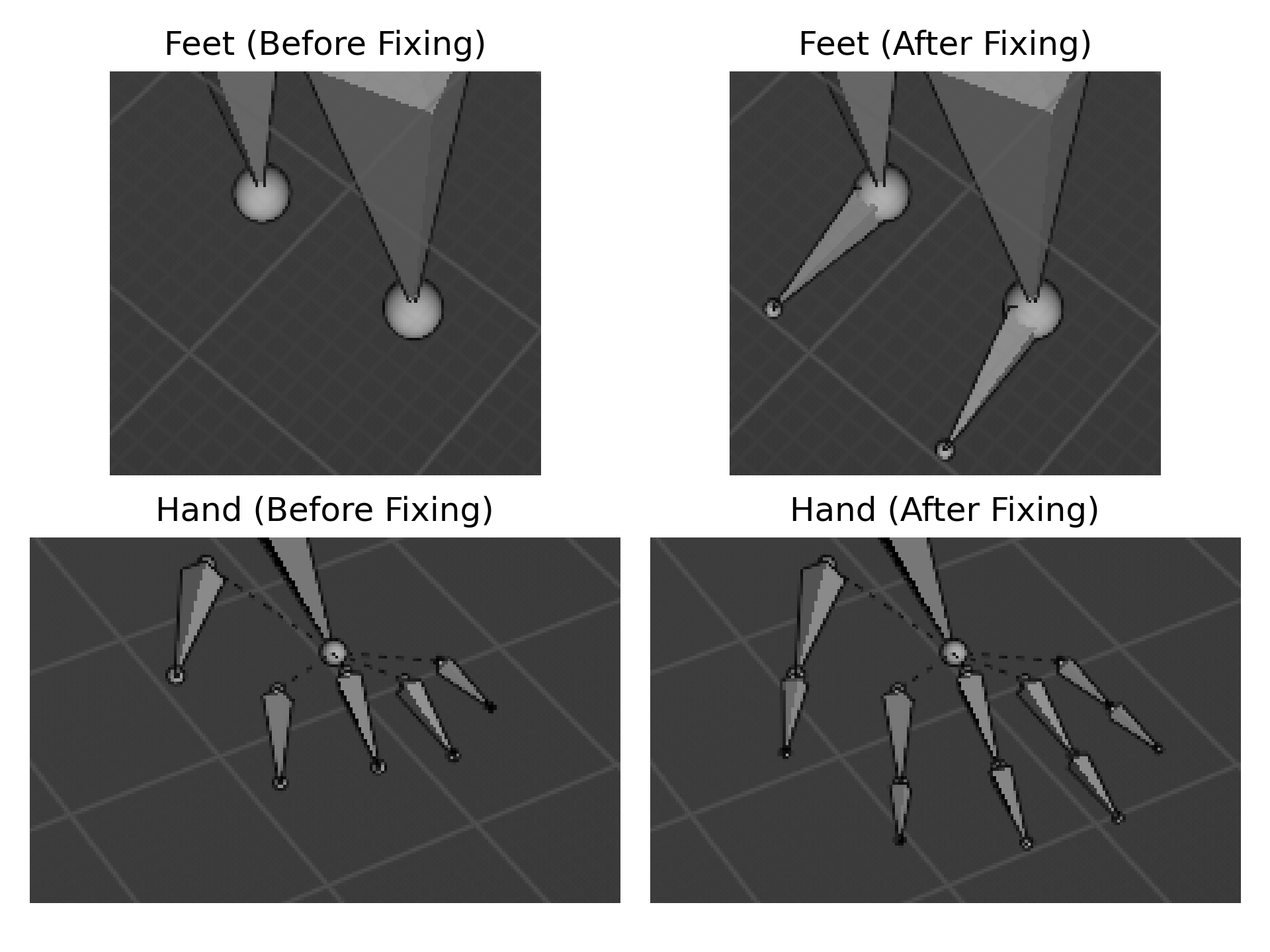

In all of the BVH files generated by GANimator, the offset of the end sites is set as zero in all three dimensions. This will result in end sites being in the exact same position as their parent joint, therefore, they are not visible. Since the generated BVH file has the exact same joints and initial positions as the training example, it is possible to transfer the correct end site offsets from the training example to the generated result. You can do this by running the transfer_endsites.py script in the data directory.

python transfer_endsites.py source.bvh target.bvhFirst download the pre-trained weights from here. Extract the zip file and transfer the Eight directory to pre-trained directory in the root of the project. This directory contains the pre-trained weights for the Eight motion sequence available in the data directory. You can also train your own model using the instructions in the original readme.

After downloading the weights, you can generate a crowd by running the demo.sh script.

./demo.shThis will generate 30 different motion sequences using the Eight pre-trained weights. The result is saved in pre-trained/Eight directory. You can visualize the generated BVH files using Blender.

This repository provides a library for novel motion synthesis from a single example, as well as applications including style transfer, motion mixing, key-frame editing and conditional generation. It is based on our work GANimator: Neural Motion Synthesis from a Single Sequence that is published in SIGGRAPH 2022.

This code has been tested under Ubuntu 20.04. Before starting, please configure your Anaconda environment by

conda env create -f environment.yaml

conda activate ganimatorIn case you encounter a GLIBCXX-3.4.29 not found issue, please link your existing libstdc++.so file to the anaconda environment by ln -sf /usr/lib/x86_64-linux-gnu/libstdc++.so.6 {path to anaconda}/envs/ganimator/bin/../lib/libstdc++.so.6.

There is a known bug in the version of pytorch used in this project. If you encountered AttributeError: module 'distutils' has no attribute 'version', downgrade your setuptools to version 59.5.0.

Alternatively, you may install the following packages (and their dependencies) manually:

- pytorch == 1.10

- tensorboard >= 2.6.0

- tqdm >= 4.62.3

- scipy >= 1.7.3

We provide several pretrained models for various characters. Download the pretrained model from Google Drive. Please extract the downloaded file and put the pre-trained directory directly under the root of the ganimator directory.

Run demo.sh. The result for Salsa and Crab Dace will be saved in ./results/pre-trained/{name}/bvh. The result after foot contact fix will be saved as result_fixed.bvh. You may visualize the generated bvh files with Blender.

Similarly, use command python demo.py --save_path=./pre-trained/{name of pre-trained model} will generate the result for the given pretrained model.

A separate module for evaluation is required. Before starting with evaluation, please refer to the instruction of installation here.

Use the following command to evaluate a trained model:

python evaluate.py --save_path={path to trained model}Particularly, python evaluate.py --save_path=./pre-trained/gangnam-style yields the quantitative result of our method with full approach reported in Table 1 and 2 of the paper.

We provide instructions for retraining our model.

We include several animations under ./data directory.

Here is an example for training the crab dance animation:

python train.py --bvh_prefix=./data/Crabnew --bvh_name=Crab-dance-long --save_path={save_path}You may specify training device by --device=cuda:0 using pytorch's device convention.

For customized bvh file, specify the joint names that should be involved during the generation and the contact name in ./bvh/skeleton_databse.py, and set corresponding bvh_prefix and bvh_name parameter for train.py.

A conditional generator takes the motion of one or several given joints as constraints and generate animation complying with the constraints. Before training a conditional generator, a regular generator must be trained on the same training sequence.

Here is an example for training a conditional generator for the walk-in-circle motion:

python train.py --bvh_prefix=./data/Joe --bvh_name=Walk-In-Circle --save_path={save_path} --skeleton_aware=1 --path_to_existing=./pre-trained/walk-in-circle --conditional_generator=1This example assumes that a pre-trained regular generator is stored in ./pre-trained/walk-in-circle, which is specified by the --path_to_existing parameter.

This repository contains the code using the motion of root joint as condition. However, it is also possible to customize the conditional joints. It can be done by modify the get_layered_mask() function in models/utils.py. It takes the --conditional_mode parameter as its first parameter and returns the corresponding channel indices in the tensor representation.

When trained with two or more sequences, our framework generates a mixed motion of the input animations.

This is an example for training on multiple sequences using --multiple_sequence=1:

python --bvh_prefix=./data/Elephant --bvh_name=list.txt --save_path={save_path} --multiple_sequence=1The list.txt in ./data/Elephant contains the names of the sequences to be trained.

We also provide a pre-trained model for the motion mixing of the elephant motions:

python demo.py --save_path=./pre-trained/elephantInstead of generating the motion from random noise, we can perform key-frame editing by providing the edited key-frames in the coarsest level.

This is an example for keyframe editing:

python demo.py --save_path=./pre-trained/baseball-milling --keyframe_editing=./data/Joe/Baseball-Milling-Idle-edited-keyframes.bvhThe --keyframe_editing parameter points to the bvh file containing the edited key-frames, which should be as the same temporal resolution as the coarsest level. Note that in this specific example, the --ratio parameter for the model is set to 1/30, leading to a sparser key-frame setting that makes editing easier.

Similarly, when the model is trained on style input and the coarsest level is given by the content input, our model can achieve style transfer.

This is an example for style transfer:

python demo.py --save_path=./pre-trained/proud-walk --style_transfer=./data/Xia/normal.bvhNote the content of content input is required to be similar to the content of style input, in order to generate high-quality results as discussed in the paper.

When a pre-defined motion of part of the skeleton (e.g., root trajectory) is given, a conditional generation model can produce animation complying with given constraints.

This is an example for conditional generation:

python demo.py --save_path=./pre-trained/conditional-walk --conditional_generation=./data/Joe/traj-example.bvhThis pre-trained model takes the position and orientation of root joint from traj-example.bvh. If the conditional source file is not specified, the script will sample a trajectory from a pre-traiend regular generator as the condition.

Additionally, --interactive=1 option will generate the animation with interactive mode. In this mode, the condition information will be fed into the generator gradually. It is conceptually an interactive generation, but not an interactive demo that can be controlled with a keyboard or gamepad.

For more details about specifying conditional joints, please refer to Training a Conditional Generator.

The code in models/skeleton.py is adapted from deep-motion-editing by @kfiraberman, @PeizhuoLi and @HalfSummer11.

Part of the code in bvh is adapted from the work of Daniel Holden.

Part of the training examples is taken from Mixamo and Truebones.

If you use this code for your research, please cite our paper:

@article{li2022ganimator,

author = {Li, Peizhuo and Aberman, Kfir and Zhang, Zihan and Hanocka, Rana and Sorkine-Hornung, Olga },

title = {GANimator: Neural Motion Synthesis from a Single Sequence},

journal = {ACM Transactions on Graphics (TOG)},

volume = {41},

number = {4},

pages = {138},

year = {2022},

publisher = {ACM}

}