Universal Interpreter

A solution to help the differently-abled people, i.e. deaf, dumb, blind or combination of any, by using Image Recognition and AI/ML.

Inspiration

The present world we live in, a world dominated by visual and audio peripherals, can prove to be a tough place to live in for differently abled people. Hence, our aim was inspired by the need to use the very same dominating technologies to help the differently abled overcome their challenges.

Requirements

- Python

- Keras

- Tensorflow

- OpenCV

- Web development/Android tools for UI/Testing

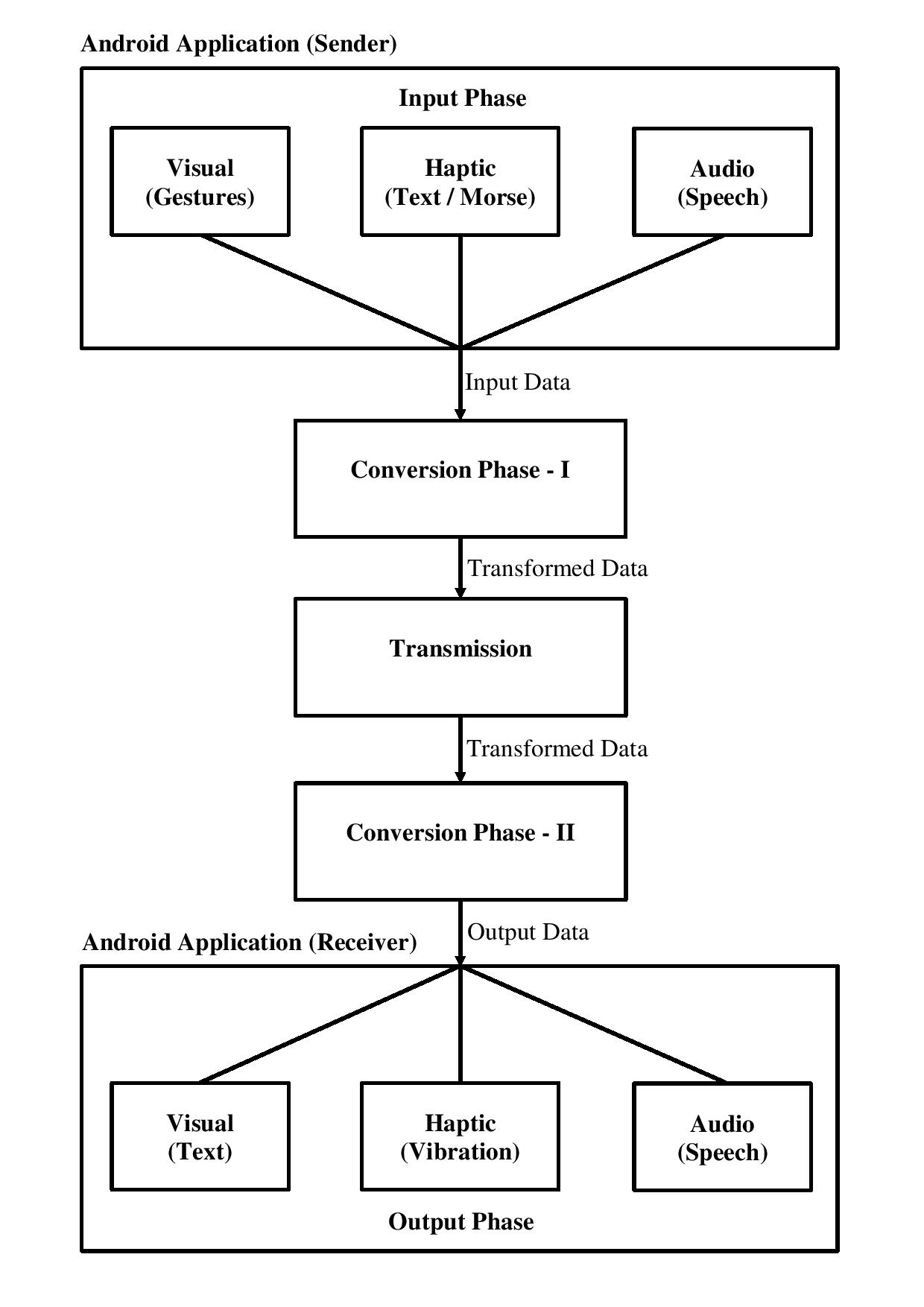

Project Flow

Phase 1 - Input Phase

Collect Data such as Gestures (Sign language), taps (for Morse Code) or direct speech (Voice) from any client device.

Phase 2 - Conversation and Transmission Phase

Convert the input data into some fundamental form (such as Morse/Digital), transmit and process the data.

Phase 3 - Output Phase

Take the output data and represent it either visually, via vibrations or via speech, depending on the following:

- Voice/Morse for the Blind

- Sign-language/Text for the Deaf

- Sign-language/Voice for the Dumb

- Morse codes wherever these are not possible