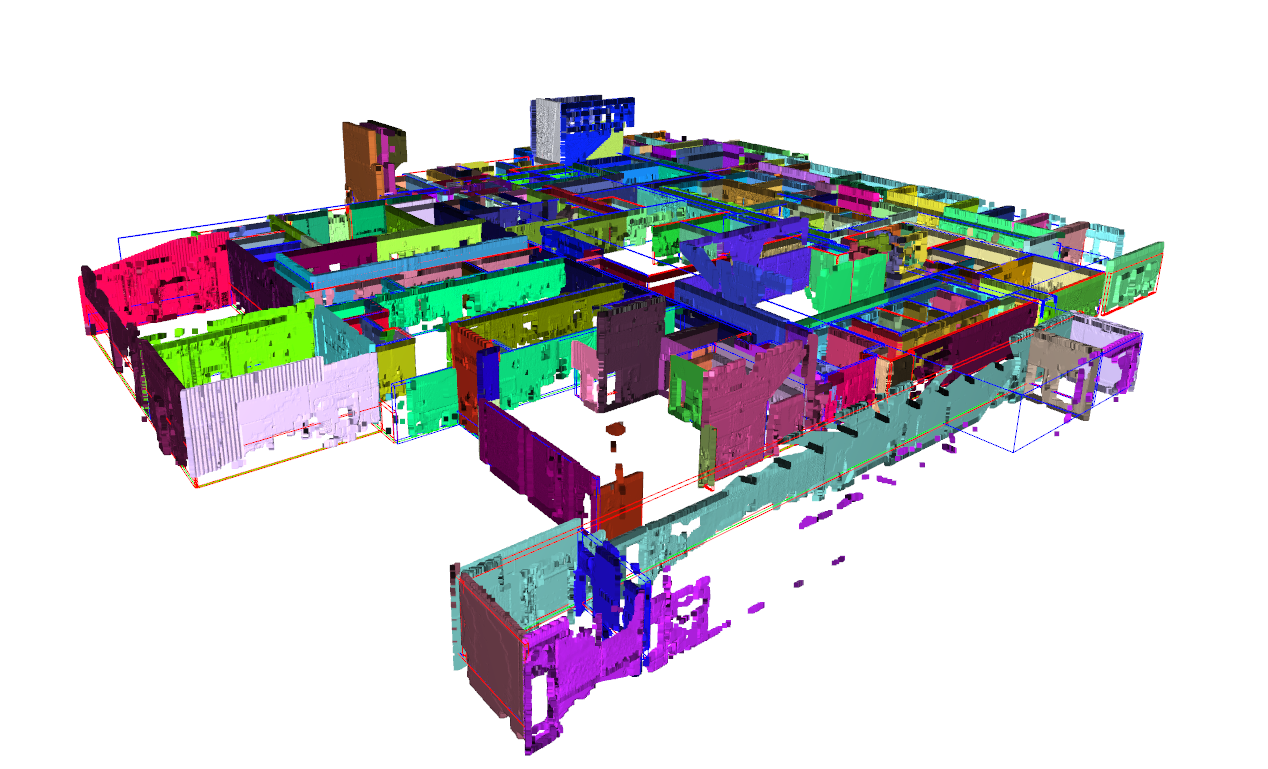

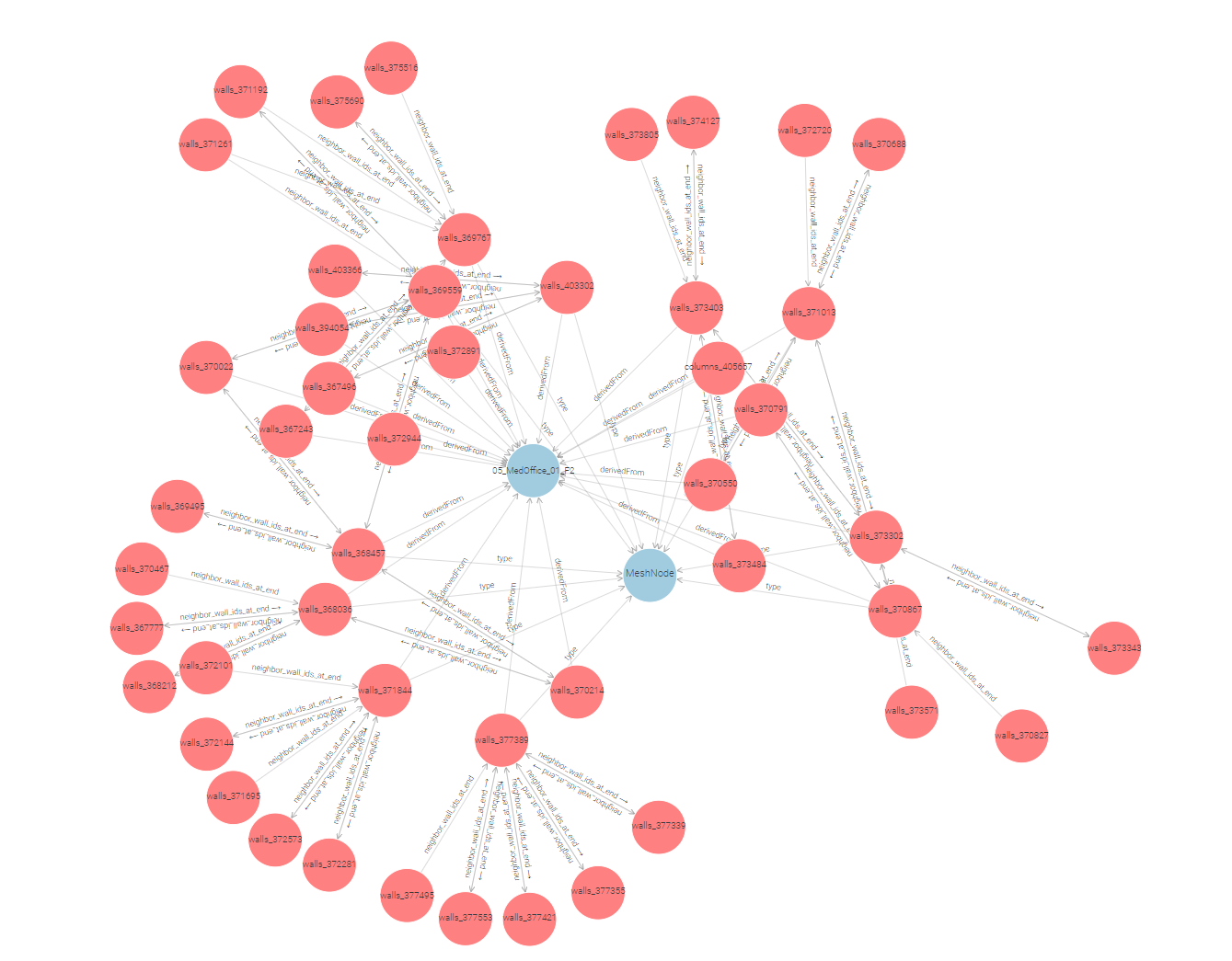

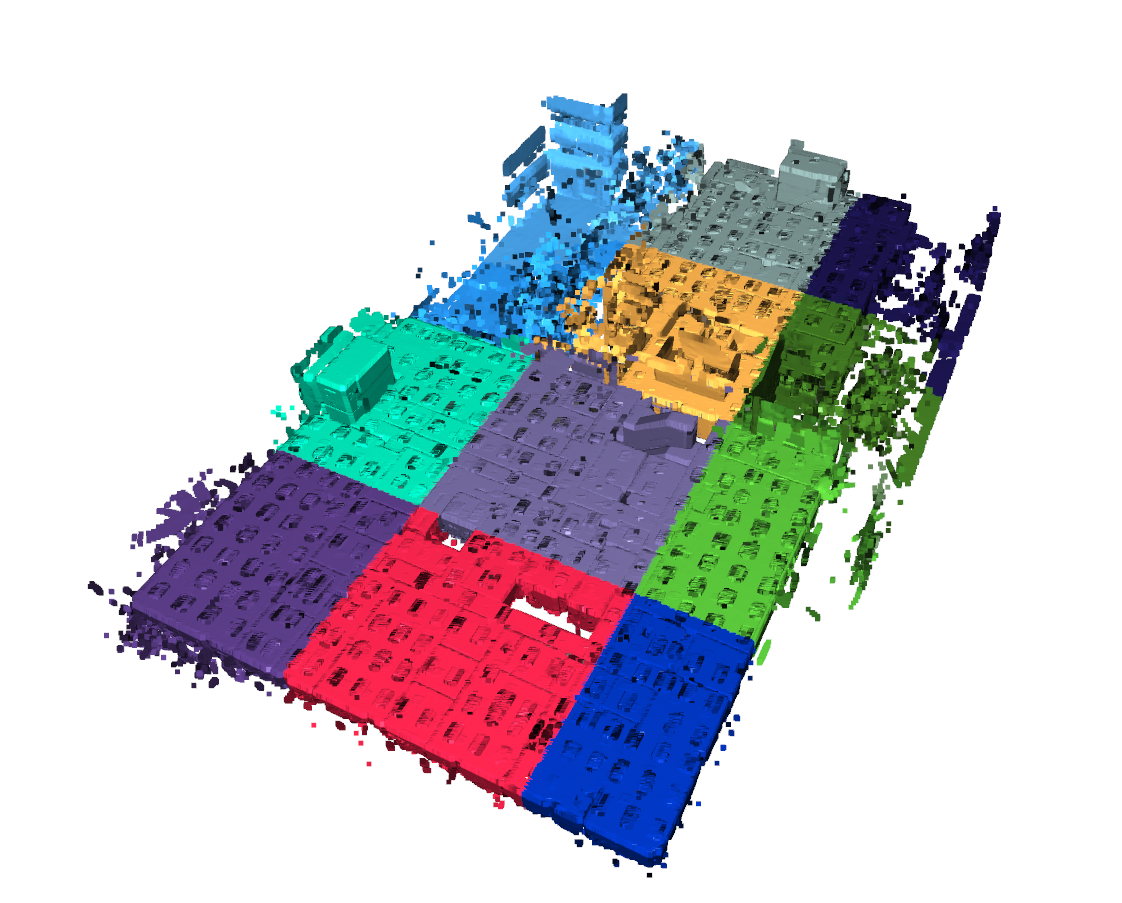

The preprocessing includes subsampling the point cloud to 0.01m and parsing the training data jsons to triangle mesh objects. The result is an .obj with submeshes for each wall, column and door element (see Figure 1). Additionally, we segment the point clouds according to these elements to form the ground truth for the instance segmentation training (Figure 2). We also generate RDF graphs with metric metadata of each point cluster and link them to the BIM Objects so they can be tracked throughout the reconstruction process. Take a look at our GEOMAPI toolbox to read more about how this is done.

In the detection step, we compute the instance segmentation of the primary (walls, ceilings, floors, columns) and secondary structure classes (doors). Two scalar field are assigned to the unstructured point clouds. First, a class label is computed for every point of the in total 7 classes (0.Floors, 1.Ceilings, 2. Walls, 3.Columns, 4.Doors, 5.beams, 255.Unassigned). Second, an object label is assigned to every point and a json is computed with the 3D information of the detected objects.

- T1. Semantic Segmentation: PTV3+PPT is an excellent model for segmenting unstructured points clouds such as walls, ceilings and floors. This is confirmed in a validation using the partitioned training data with mIoU: 89.4 and mAcc: 91.6 over these classes. Theoretically, it should also work well for columns as they have distinct geometric signatures. However, columns are heavily occluded and only make up 0.7% of the scene (Table 1). With training results stagnating at 40%, we combined it with a vision approach in T2. The doors were expected to heavily underperform (42% mIoU with 0.9% representation) as they often represent vacuums instead of geometry and have a poor geometric signature overall. Instead, we solely rely on vision transformers after the walls were found for this class. Other issues are discussed in our paper.

Table 1: Summary statistics and evaluation results for different classes

| Class | Mean (%) | Std Dev (%) | Count parts | mIoU (%) | mAcc (%) |

|---|---|---|---|---|---|

| floors | 12.5 | 4.8 | 41 | 92.5 | 95.0 |

| ceilings | 17.7 | 6.9 | 40 | 92.7 | 94.1 |

| walls | 30.9 | 8.3 | 40 | 82.9 | 85.8 |

| columns | 0.7 | 0.4 | 12 | 38.6 | 40.1 |

| doors | 0.9 | 0.5 | 39 | 42.9 | 58.5 |

| unassigned | 40.6 | 17.7 | 42 | 91.3 | 100.0 |

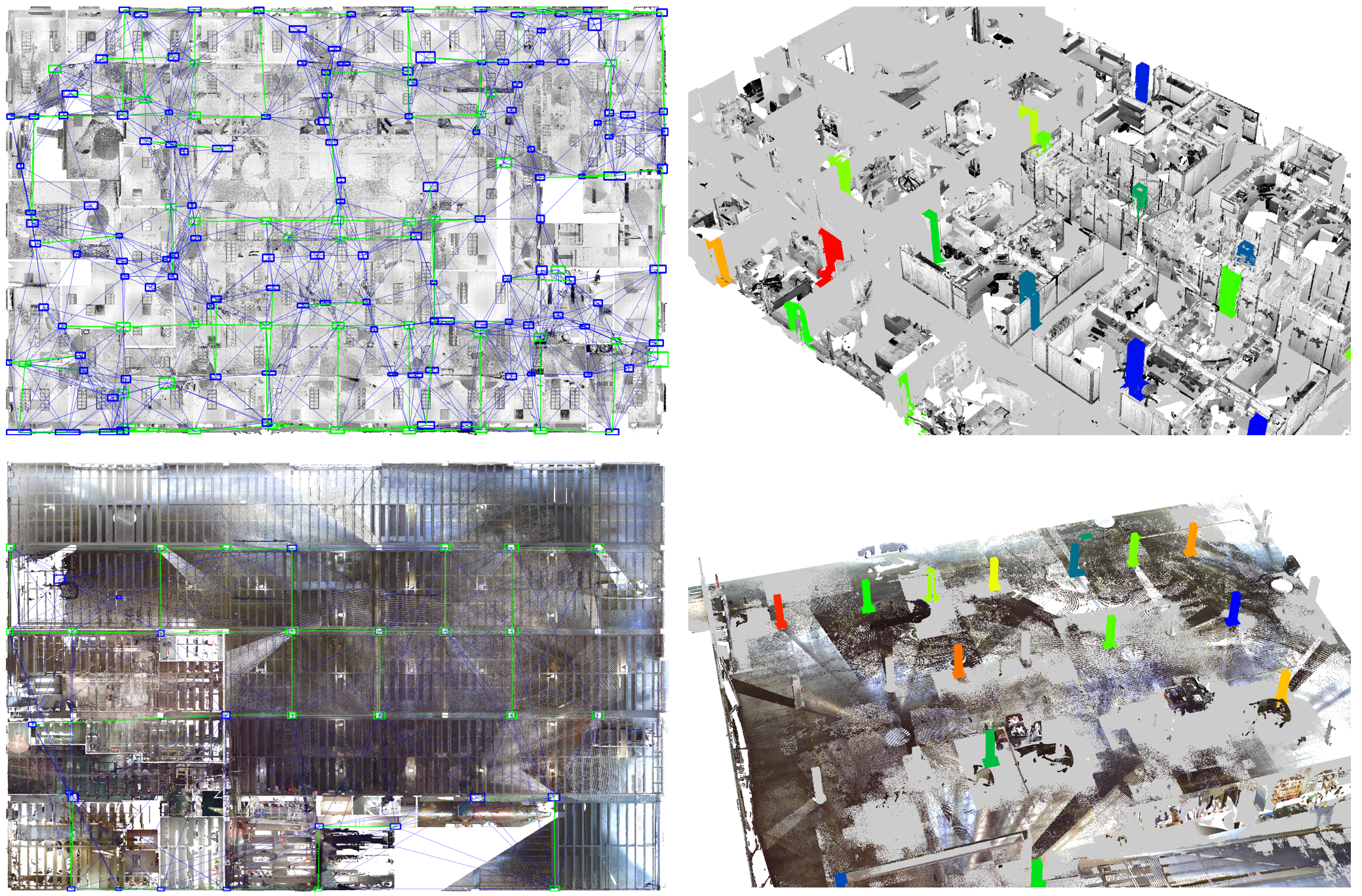

- T2. Column Detection: Seeing the poor performance of the geometric detection, we opted to support it with a vision approach and leverage the grid pattern of structural elements. We trained a YOLO v8 on the 8 training data sets and maximised the recall for the detection. The candidate columns were then processed in 3D and tested for their detection score, grid compliance and point cloud signature. The detection rate skyrocketed to 86.1% f1-score at the 5cm range with a mIuO of 79.6%, doubling the initial geometric detection. This shows the power of multi-modal data processing. More results will be part of another paper.

Figure 6: 2D vision transformer for column detection with the new grid compliance conditioning, resulting in 79.6% mIuO.

- T3. Object Detection: As doors are unlikely to be found in a point cloud, and marking them as ground truth for a vision transformer is a hasstle, we opted to zero shot this class with GroundingDINO since doors are a general class. As doors are exclusively found in walls, we combine this task with T7 door reconstruction (see results there) to await the T6 wall reconstruction. Given the Bounding boxes of the walls, we computed orthographic imagery of the walls and querriedd them in grounding Dino. To improve the detection, we querried both sides of the walls and compared the results.

- T4. Filter and cluster the results: We compute clusters from the detected instances using DBSCAN and RANSAC plane fitting since most of the objects of interest are planar.To improve the detection rate, we impose some constraints on the detected instances, specifically, the following conditions will be placed on the detected intances:

- Wall clusters dimensions should be between 0.08<w<0.7m

- Columns clusters should be between 0.1<w<1.5m and 0.1<l<1.5m

- Doors clusters 1.5<h<2.5m and 0.5<w<1.5m

In the second step, we compute the parametric information and geometries of the BIM elements. Per convention, BIM models are hierarchically reconstructed starting from the IfcBuildingStory elements, followed by the IfcWallStandardCase and IfcColumn elements. Once the primary building elements are established, the secondary building elements (IfcDoor), non-metric elements (IfcSpace) and wall detailing (IfcOpeningElement). To this end, a scene Graph is constructed that links together the different elements. However, as the competition requires very specific geometries, we will also generate the necassary geometry for the competition aswell.

Figure 8: Reconstruction procedure with T5. IfcBuildingStory, T6. IfcWallStandardCase, T7. IfcColumn and T9. IfcSpace.

- T5. IfcBuildingStory: For the reference levels, we consider the architectural levels (Finish Floor Level or FFL) since we are modeling the visible construction elements in the architectural domain. The highest level is represented by the ceilings since no other geometries are captured.

- IfcLocalPlacement (m): center point of the IfcBuildingElement

- FootPrint (m): 2D lineset or parametric 2D orientedBoundingBox (c_x,c_y,c_z,R_z,s_u,s_v)

- Elevation (m): c_z

- Resource (Open3D.TriangleMesh): plane of the storey

| Threshold | Columns (%) | Doors (%) | Walls (%) |

|---|---|---|---|

| 0.05 | mIoU: 80.6 | mIoU: 39.6 | mIoU: 84.3 |

| F1: 81.4 | F1: 41.5 | F1: 81.3 | |

| 0.1 | mIoU: 88.7 | mIoU: 55.4 | mIoU: 91.5 |

| F1: 89.5 | F1: 58.0 | F1: 88.3 | |

| 0.2 | mIoU: 91.7 | mIoU: 60.1 | mIoU: 97.2 |

| F1: 92.5 | F1: 63.1 | F1: 93.9 | |

| Overall Average | mIoU: 72.9 | mIoU: 53.2 | mIoU: 67.8 |

| F1: 87.8 | F1: 54.2 | F1: 87.8 |

Figure 9: Reconstruction results with the mIuO and f1-scores over all 6 training datasets.

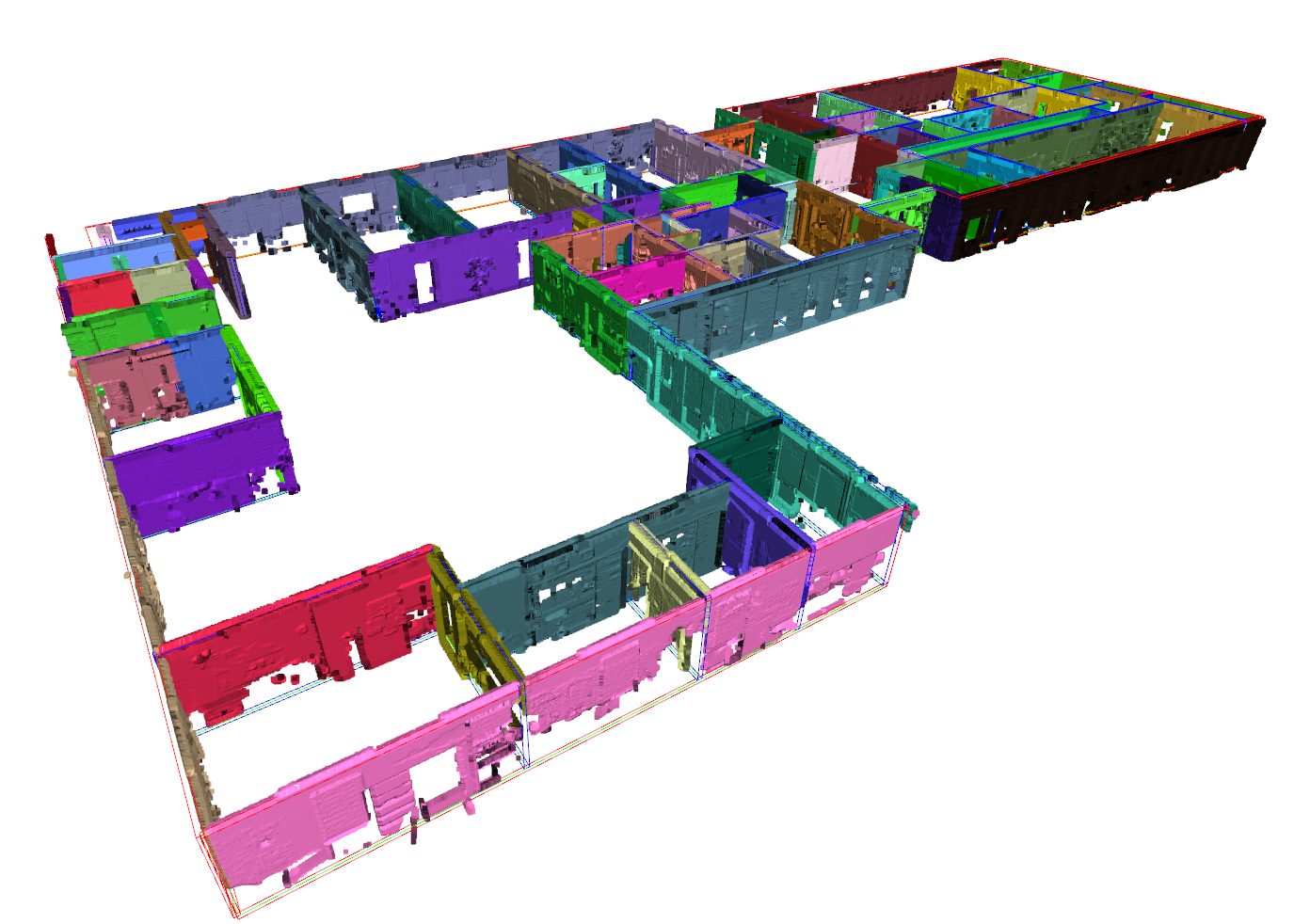

- T6. IfcWallStandardCase: Only straight walls are reconstructed in this repo (because they are the only type of wall in the challenge). For non-straight walls, look at our previous work As such, only coplanar elements can contribute to the parameter estimation. Consectively, we follow a two-step procedure to reconstruct first the candidate wall geometries, after which we complete the topology. Additionally, we detect interior vs exterior walls and whether walls are single-faced (scanned only from one side) or double-faced, as each have a different default thickness. Lastly, the wall thickness will not be clustered (which is typical in a scan-to-bim project), to achieve the highest possible accuracy.

- IfcLocalPlacement (p_1,p_2): the two control points at both ends of the wall axis. Note that the wall axis is at the center of the wall. In the case of single faced walls, the positioning of this axis is determined by the closest floor and ceiling geometries to correct the spawn location. This significantly improves the mIuO scores.

- Wall Thickness (m): We compute the orthogonal distance between the two dominant planes in the wall (this gave the best results)

- base constraint (URI): bottom reference level

- base offset (m): offset from the base constraint level to the bottom of the IfcBuildingElement. This is ignored in this benchmark as the CVPR data does not use this feature.

- top constraint (URI): top reference level

- top offset (m): offset from the base constraint level to the bottom of the IfcBuildingElement. This is ignored in this benchmark as the CVPR data does not use this feature.

- HasOpenings (URI): links through IfcRelVoidsElement to IfcOpeningElement objects that define holes in the wall.

- Resource (Open3D.TriangleMesh): OrientedBoundingBox of the wall

Overall, the wall reconstruction is quite succesfull with an average 87.4% f1-score on the training data. However, the mIuO (67.4%) is significantly impacted by small diferences in the axis placement, width and height of the walls. The is reflected in the 15% mIuO difference between the 0.05m and 0.2m range. In contrast, the topology has much less impact. This is especially impactfull for walls since 60% of the walls are single-faced. 40% are exterior walls and the 18% interior walls are either to small, heavily occluded or only scanned from one side.

- T7. IfcColumn: The columns are reconstructed using a Ransac plane fitting on the dominant plane. The best fit rectangle is reconstructed from the dominent plane with the following parameters. Note that only rectangular columns are considered as these are the only type of columns in the datasets.

- IfcLocalPlacement (c): center of column at the base of the column

- width (w)

- height (h)

- base constraint (URI): bottom reference level

- base offset (m): offset from the base constraint level to the bottom of the IfcBuildingElement

- top constraint (URI): top reference level

- top offset (m): offset from the top constraint level to the top of the IfcBuildingElement

- Resource (Open3D.TriangleMesh): cylinder or orientedBoundingBox

Overall, columns perfrom very well with the detector. the initial 40% detection rate was increased to 72.9% mIuO and 87.8% f1-score on the training data.

the wall reconstruction is quite succesfull with an average 87.4% f1-score on the training data. However, the mIuO (67.4%) is significantly impacted by small diferences in the axis placement, width and height of the walls. The is reflected in the 15% mIuO difference between the 0.05m and 0.2m range. In contrast, the topology has much less impact. This is especially impactfull for walls since 60% of the walls are single-faced. 40% are exterior walls and the 18% interior walls are either to small, heavily occluded or only scanned from one side.

-

T8. IfcDoor: Secondary IfcBuildingElement with the following parameters.

- width (m): w

- height (m): h

- IfcLocalPlacement (m): center of the door

- wall constraint (URI): link to reference wall

- resource (Open3D.TriangleMesh): OrientedBoundingBox of the door 0.466771 average_miou 0.477599, average_f1, which is approximately the same for each level.

-

T9. IfcSpace: Non-metric element that is defined based on its bounding elements.

- BoundedBy (URI): link to slab and wall elements

- IfcBuildingStorey (URI): link to reference level

- Resource (Open3D.TriangleMesh): OrientedBoundingBox of the space (slab to slab)

-

T10. IfcOpeningElement: These are child elements of IfcWallStandardCase to increase the detailing of the initial wall geometry. They define boolean subtraction operations between both geometric bodies of the element and the opening. It has the following parameters.

- Geometry (c_x,c_y,c_z,R_x,R_y,R_z,s_u,s_v,s_w) : The easiest definition is an orientedBoundingBox orthogonal to the wall's axis. This geometry is defined by its parameters (c_x,c_y,c_z,R_x,R_y,R_z,s_u,s_v,s_w) or it's 8 bounding points.

- Resource (Open3D.OrientedBoundingBox): OrientedBoundingBox of the opening

- HasOpenings (Inverse IfcRelVoidsElement URI)