Paper Review on StyleSDF

This Blog article is the review of paper StyleSDF: High-Resolution 3D-Consistent Image and Geometry Generation published in CVPR 2022 [1]. First we will look at a brief introduction to the topic, followed by relevant concepts and some related works. Then we will discuss the implementation details in depth, followed by evaluations and results. Finally, we conclude this review by looking at the limitations & future work. You can check my presentation slides for this paper review here.

Introduction

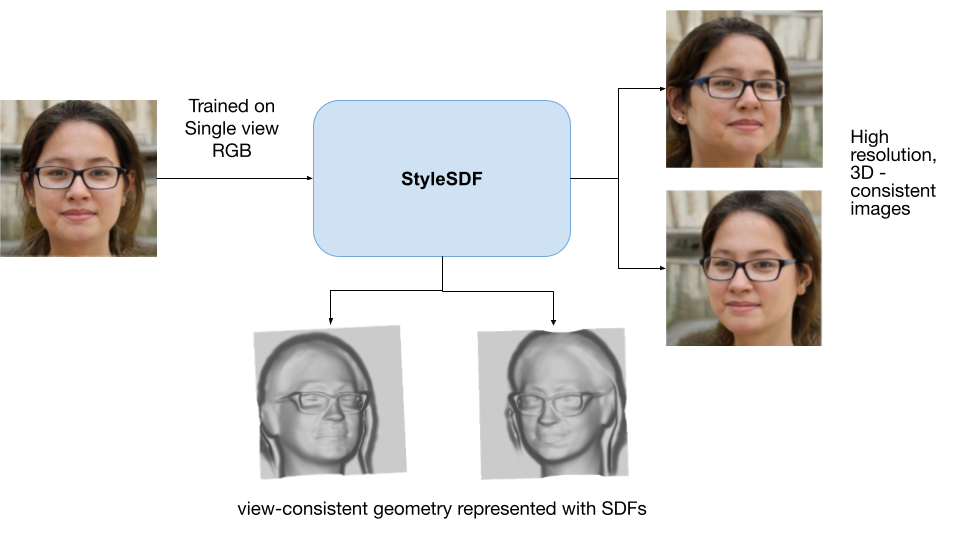

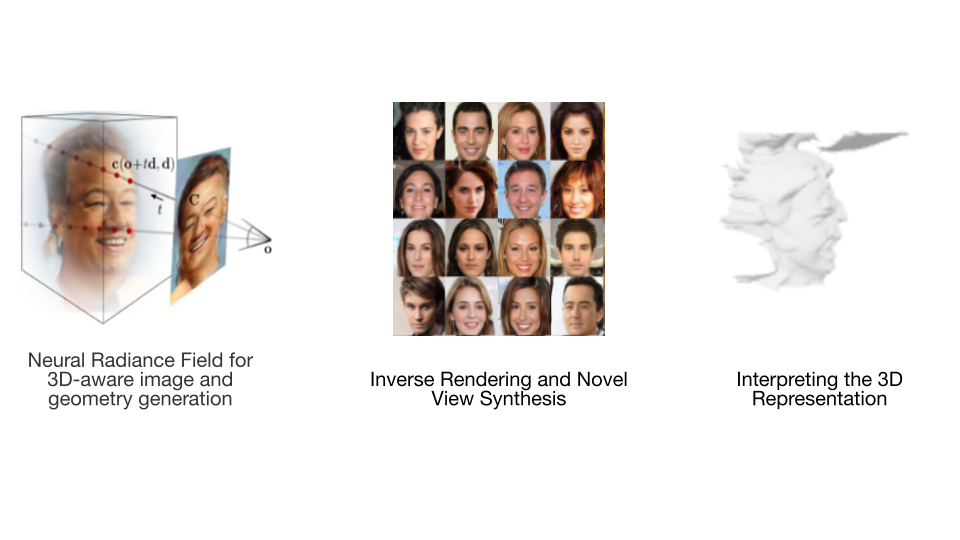

2D Image Generation, the task of generating new images, is becoming increasingly popular now a days. Extending this generation task to another dimension brings us to the concept of 3D image generation. Techniques such as GRAF [2], HoloGAN [3], PiGAN [4] have made a great amount of contribution in this area. Although these techniques have their pros and cons, overall 3D-image generation poses two main challenges, high resolution view-consistent generation of RGB images and detailed 3D shape generation. StyleSDF [1] attempts to achieve these challenges. StyleSDF is a technique that generates high resolution 3D-consistent RGB images and detailed 3D shapes, with nover views that are globally aligned, while having stylistic awareness that enables image editing. StyleSDF is trained on single-view RGB data only and Fig.01 summarises the goal of StyleSDF in a concise manner.

Fig.01 The goal of StyleSDF

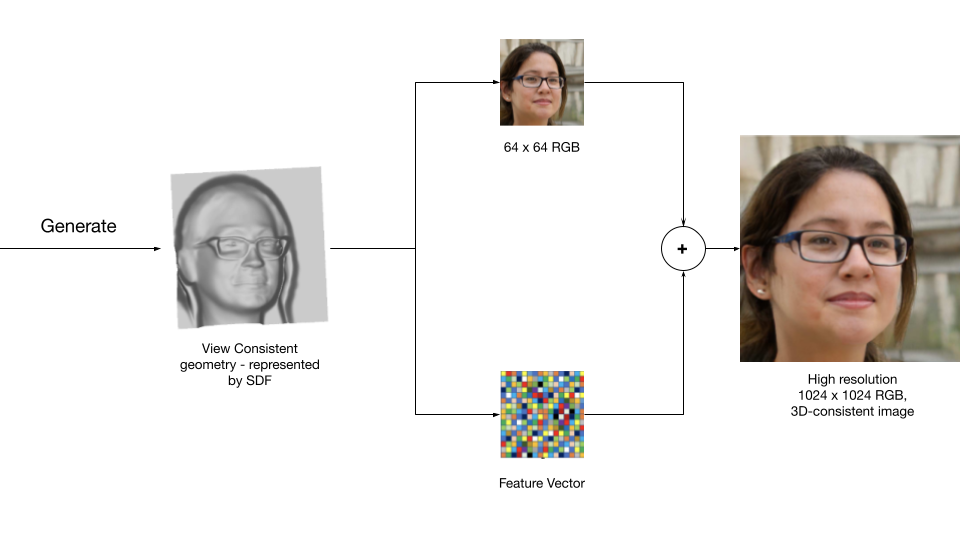

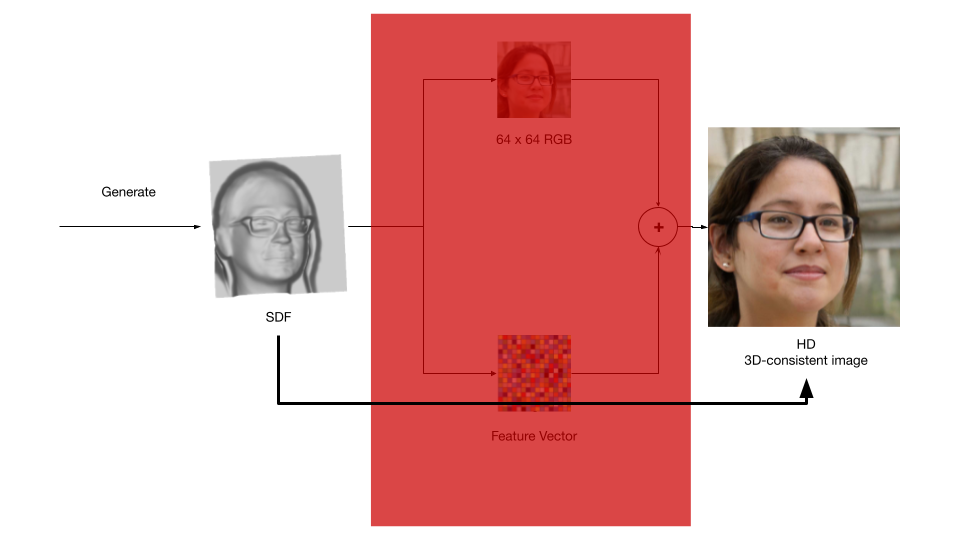

Fig.02 summarizes how StyleSDF is implemented to achieve it's goals. As we can see that StyleSDF first generates a view-consistent 3D shape, from which it then extracts a 64x64 RGB image and its corresponding feature vector. Later, it combines both the low resolution RGB-image and feature vector to generate a high resolution 1024x1024 RGB-image that is 3D-consistent.

Fig.02 High level view of StyleSDF algorithm

Before discussing about StyleSDF more detailly, Let us look at some relavant topics and related works that better equip us to understand the technique in a much efficient manner.

Relevant Topics

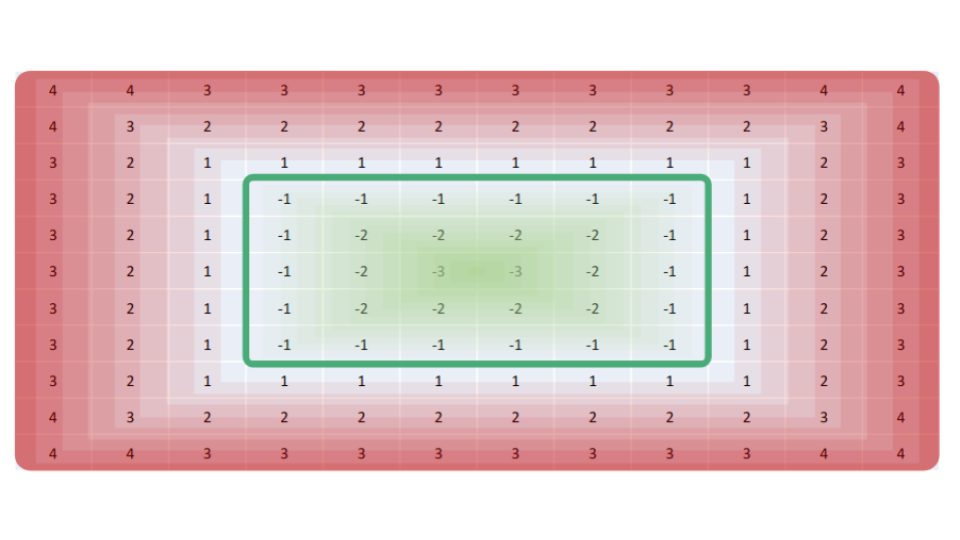

Signed Distance Field

Signed Distance Field (SDF) is a 3D Volumetric representation in which each 3D spatial coordinate will have a value, called Distance value (Fig.03). This distance value can be either positive, 0 or negative and the value tells us how farther away spatially are we from the nearest point on the surface. A zero distance value at a particular location indicates that there exists a surface at that spatial point. A positive values indicates the distance away from surface in the direction of surface normal and a negative value indicates the distance away from the surface in the opposite direction of surface normal.

Fig.03 Signed Distance Field

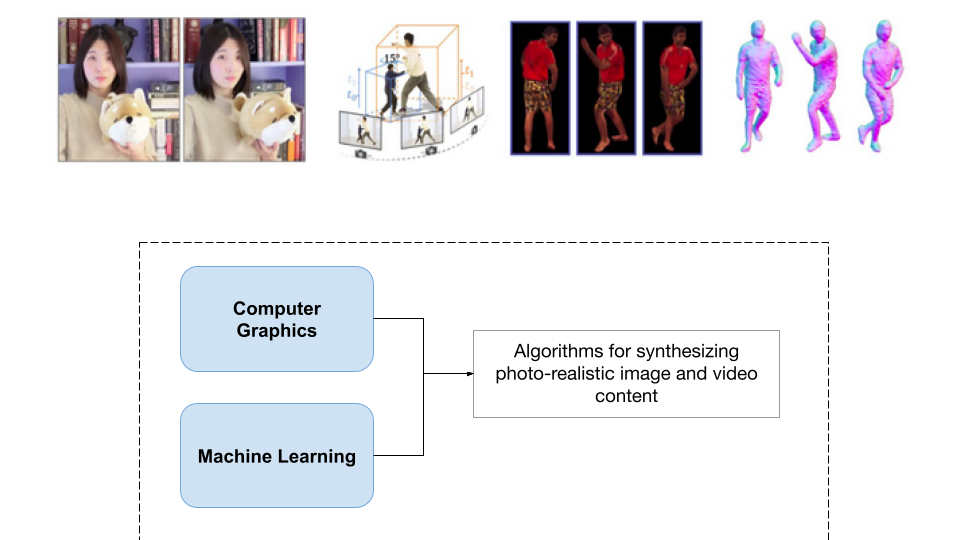

Neural Rendering

The concept of neural rendering combines ideas from classical computer graphics and machine learning to create algorithms for synthesizing images from real-world observations [5]. Neural rendering is 3D consistent by design and it enables applications such as novel viewpoint synthesis of a captured scene.

Fig.04 Neural Rendering

Neural Radiance Field - NeRF

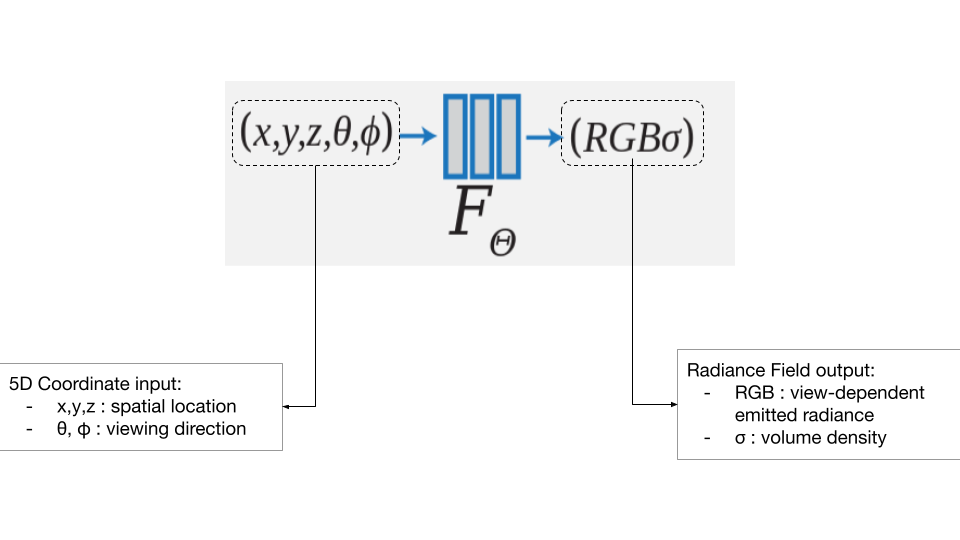

Neural Radiance Field is a functional representation that jointly models geometry and appearance, and is able to model view-dependent effects [6]. Fig.05 shows the Radiance field function. The function takes 5D cordinates (3 spacial coordinates + 2 viewing directions) as input and produces Radiance field (view-dependent emmitted radiance + Volume density) as output.

Fig.05 Radiance Field Function

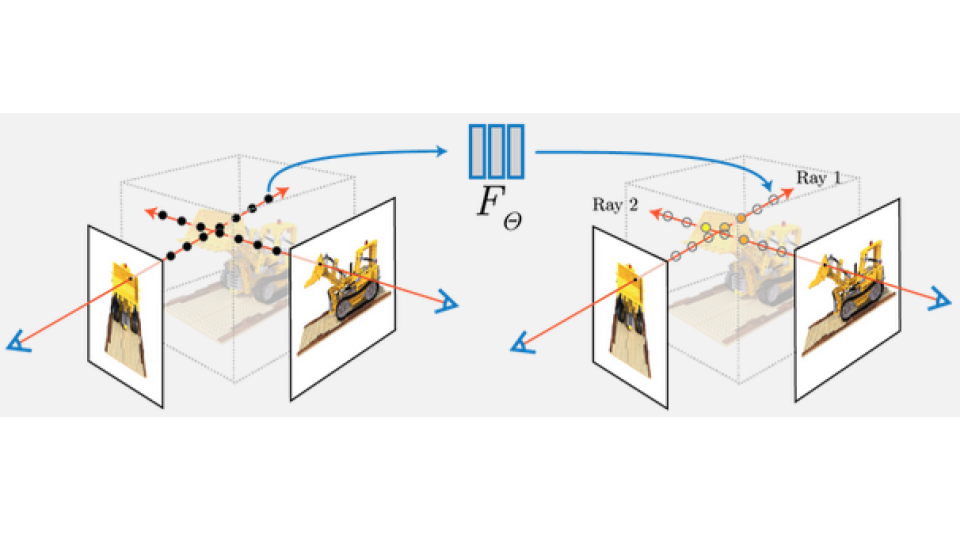

NeRF is a technique that introduced the use of volume rendering for reconstructing a 3D scene using Radiance Field to synthesize novel views (Fig.06).

Fig.06 NeRF

Generative Adversarial Networks - GANs

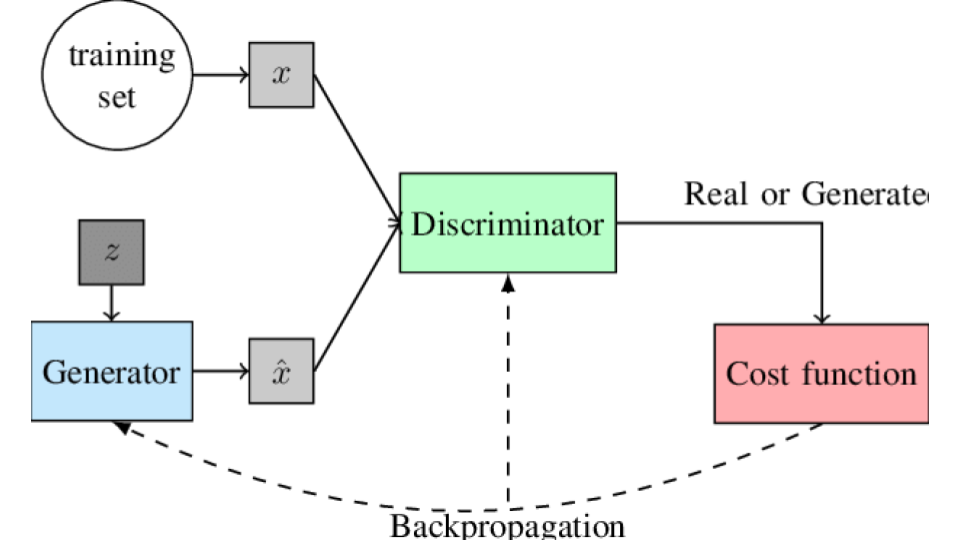

Generative Adversarial Networks, or GANs for short, are an approach to generative modeling using deep learning methods, such as convolutional neural networks (Fig.07). GANs can synthesize high-resolution RGB images that are practically indistinguishable from real images. You can learn more about GANs here.

Fig.07 GAN Architecture

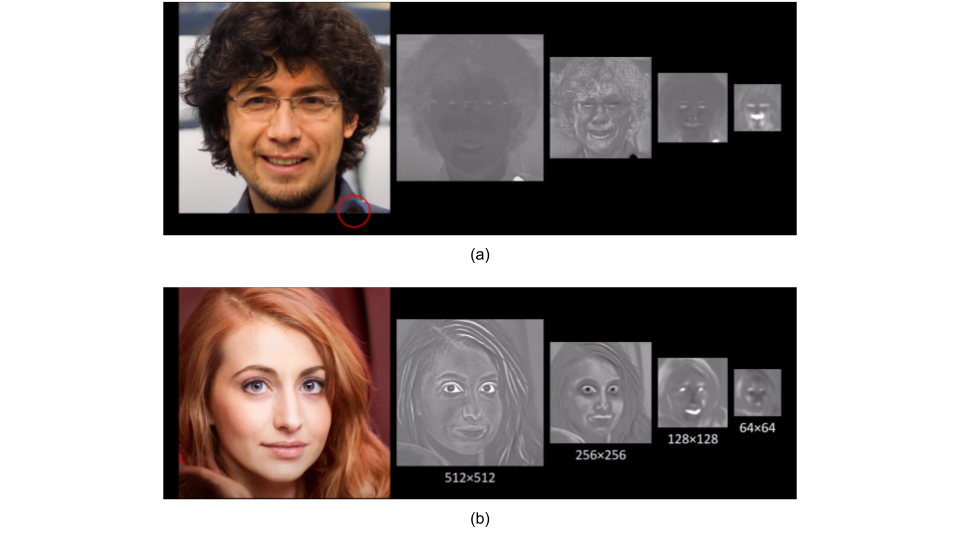

StyleGAN & StyleGAN2

StyleGAN [7] is the current state-of-the-art method for high-resolution image synthesis (Fig.08 a) and StyleGAN2 [8] extends the work of StyleGAN by focusing on fixing StyleGAN’s characteristic artifacts & improving the result quality further (Fig.08 b). We can observe from Fig.08 a that the output of StyleGAN contains some artifacts at bottom right corner, which are eliminated in the output of StyleGAN2 as we can see at Fig.08 b.

Fig.08 a) StyleGAN b) StyleGAN2

Related Works

Single-View Supervised 3D-Aware GANs

In the previous section, we had a brief introduction to what GANs are. Now let us look at 3D-Aware GANs. A GAN that generates images that are 3D-consistent are known as 3D-aware GANs. Extending on that concept, Single-View Supervised 3D-Aware GANs are those GANs, that are trained only on single-view RGB data. In contrast, NeRF is Multi-view supervised GAN, meaning that NeRF takes multiple views of same scene to train and generate 3D aware images. Some of the popular Single-View Supervised 3D-Aware GANs are:

- GRAF

- Pi-GAN

- HoloGAN

- StyleNeRF

Pi-GAN

Pi-GAN is one of the most advanced Single-View Supervised 3D-Aware GAN that achieves the same goal as StyleSDF. That is why Pi-GAN is a strong baseline for evaluating the results of StyleSDF. Pi-GAN, as NeRF, generates 3D shapes using radiance fields. Fig.09 describes Pi-GAN in a concise manner.

Fig.09 Pi-GAN

How StyleSDF works?

Back to the original topic, now let us discuss the algorithm of StyleSDF detailly in this section. Fig.10 shows the overall architecture of StyleSDF. The entire architecture can be mainly divided into 2 components: Mapping Networks, Volume renderer, and 2D Generator.

Fig.10 StyleSDF architecture

Mapping Networks

The components Volume Renderer and 2D generator has their own corresponding mapping networks which map the input latent vector into modulation signals for each layer. For simplicity, we ignore the mapping networks and concentrate on the other components in this discussion.

Volume Renderer

This component takes 5D cordinates (3 spacial coordinates: x + 2 viewing directions: v) as input and outputs SDF value at spatial location x, view-dependent color value at x with view v, and feature vector, represented by d(x), c(x,v) and f(x,v) respectively (Fig.11).

Fig.11 Volume Renderer

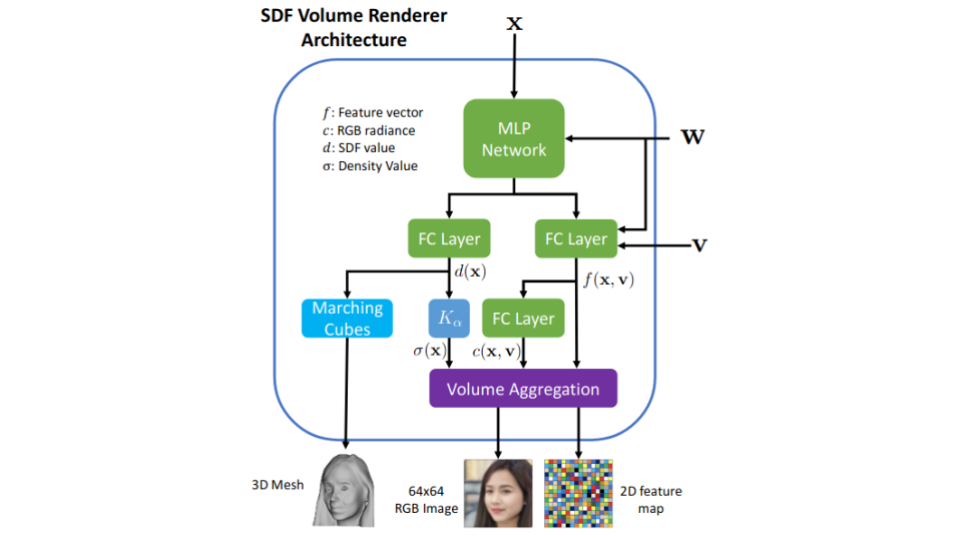

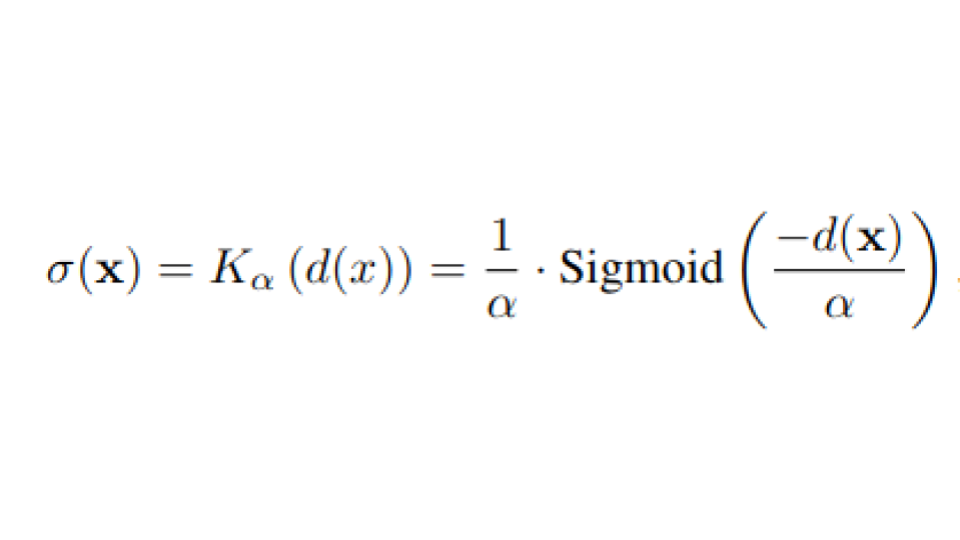

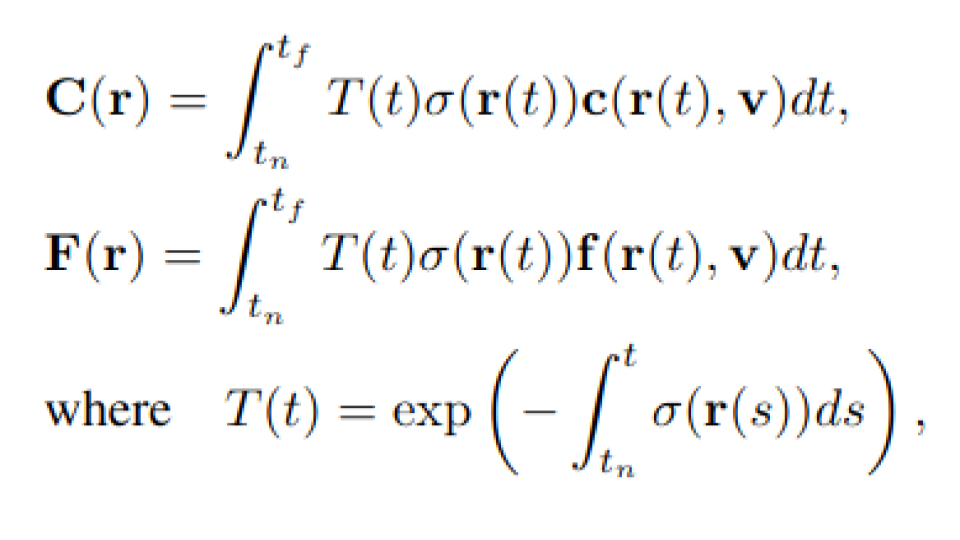

Now let us take a step further deep and look at the architecture of the volume renderer (Fig.12). With notations mentioned above, we can see from the figure that volume renderer has 3 FC layers. The first FC layer outputs d(x), SDF value at poisition x. We can use an algorithhm called marching cubes (learn more) to visualize the 3D shape represented by d(x). Further, the second layer outputs c(x,v) and third FC layer outputs f(x,v), which are view-dependent color value at x with view v, and its corresponding feature vector respectively. If we observe there are two more components in the architecure that require additional description. They are Density function (K-alpha) and Volume aggregation.

Fig.12 Volume Renderer Architecture

Fig.13 Density function

Fig.14 Volume aggreagation formulas

Formulas Description:

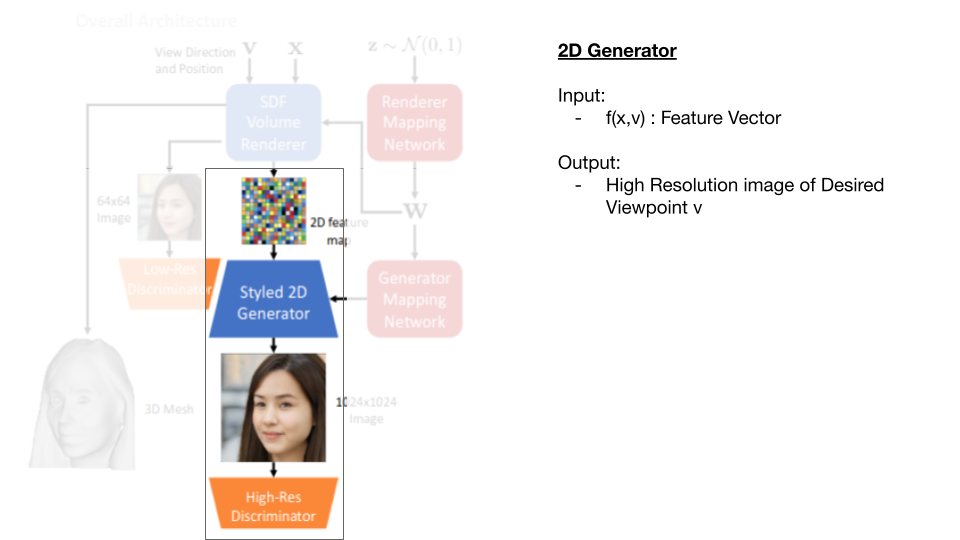

2D Generator

This component Aim is to generate High resolution Image at viewpoint v, given feature vector (Fig.15). We have already learned that state-of-the-art techniue to generate High resolution images is StyleGAN2, so StyleSDF uses StyleGAN2 as 2D generator, which takes the 64x64 RGB image and its corresponding feature vector as inputs and produces a High-resolution 1024x1024 RGB image as output.

Fig.15 2D Generator

StyleSDF Training

In this section, let us briefly discuss about various loss functions implemented to train both volume rendered and 2D generator.

Volume renderer loss functions

This loss differentiates between ground truth data and generated data by the GANs. (learn more).

This loss Makes sure that all the generated objects are globally aligned or not, i.e., it trains the network in such a way that it generates images with poses that are globally valid. (Fig.16 a)

This loss ensures that the learned SDF is physically valid, i.e., a valid SDF has unit 2 norm gradients and Eikonal loss makes sure that generated SDF follows this rule. (Fig.16 b)

This loss minimizes the number of zero-crossings to prevent formation of spurious and non-visible surfaces. (Fig.16 c)

Fig.16 Volume Generator loss functions; a) Pose alignment loss b) Eikonal loss c) Minimal surface loss

2D generator loss functions

As we discussed, the 2D generator is implemented using StyleGAN2 so the loss functions used to train this component are the same loss functions that are used to train StyleGAN2. They are: Non saturating adversarial loss + R1 regularization + path regulariza

Evaluation & Results

So far we have discussed, how StyleSDF works and various loss functions implemented. Now let us take a look at the results produced by StyleSDF and evaluate the results with out baseline model Pi-GAN. First, let us briefly take a look at the datasets used to train StyleSDF, then we will discuss the qualitative evaluation of the results and then finally discuss quantitaive evaluation of the results.

Datasets

StyleSDF is trained on two different datasets, namely FFHQ and AFHQ datasets.

- FFHQ

- AFHQ

This dataset contains 70,000 images of diverse human faces of resolution 1024x1024. All the images in this dataset are centered and aligned

Fig.17 FFHQ dataset

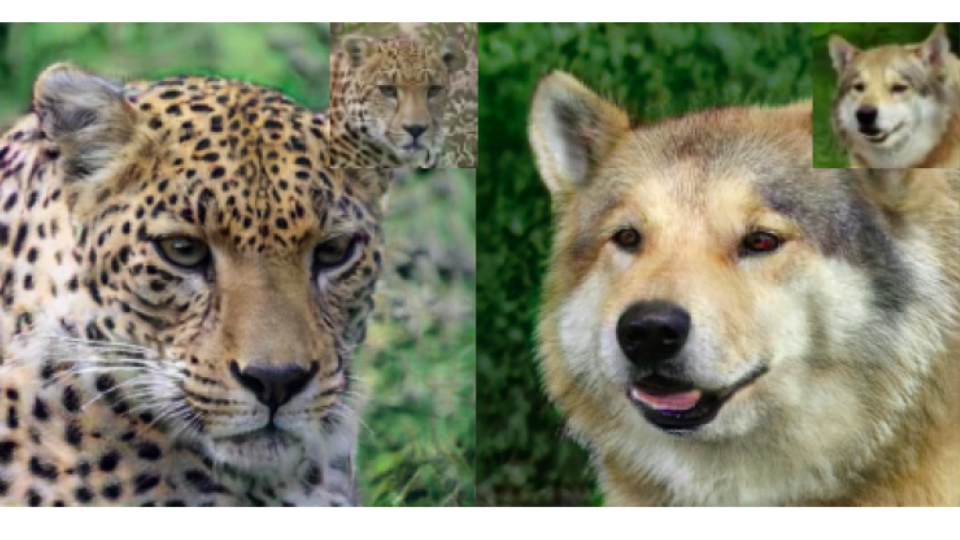

This dataset contains 15,630 images of wild animals of resolution 512x512. Images in this dataset are not aligned and contains images of diverse species.

Fig.18 AFHQ dataset

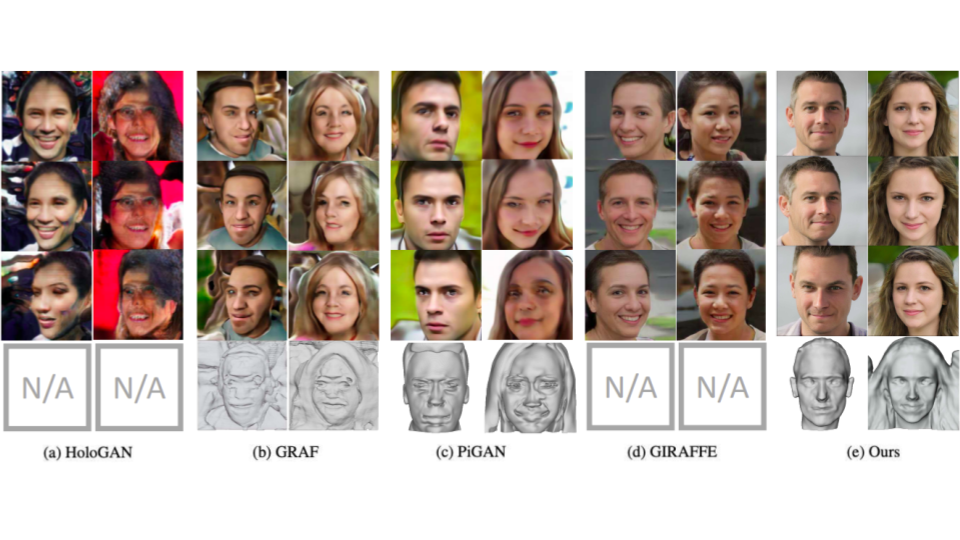

Qualitative Analysis

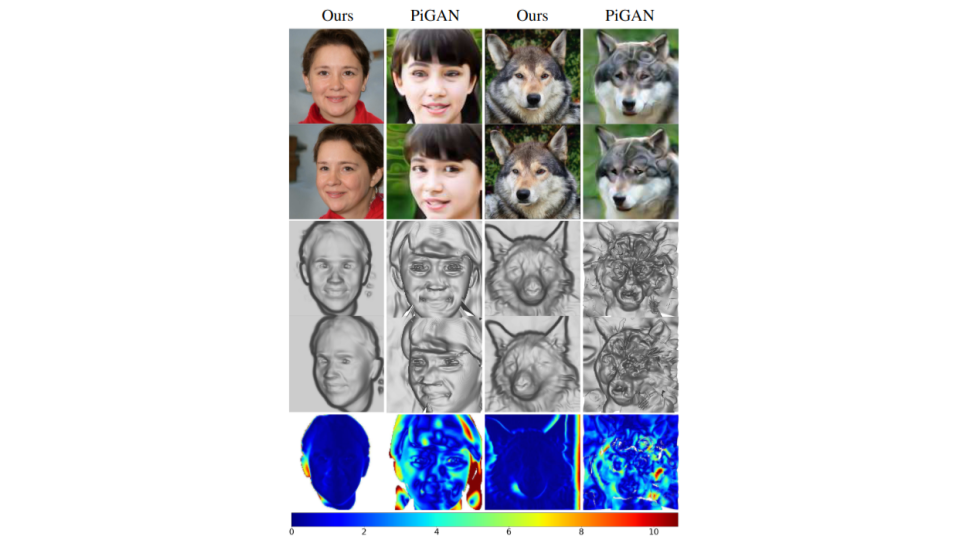

In this section, let us evaluate the quality of StyleSDF results with our main baseline model Pi-GAN and also with other related models. Fig 19 shows us the qualitative comparision of results of various models and we can clearly see that StyleSDF is winner in generating images that are view-consistent, artifact free and in generating 3D shapes that are clear and perfect.

Fig.19 Qualitative analysis

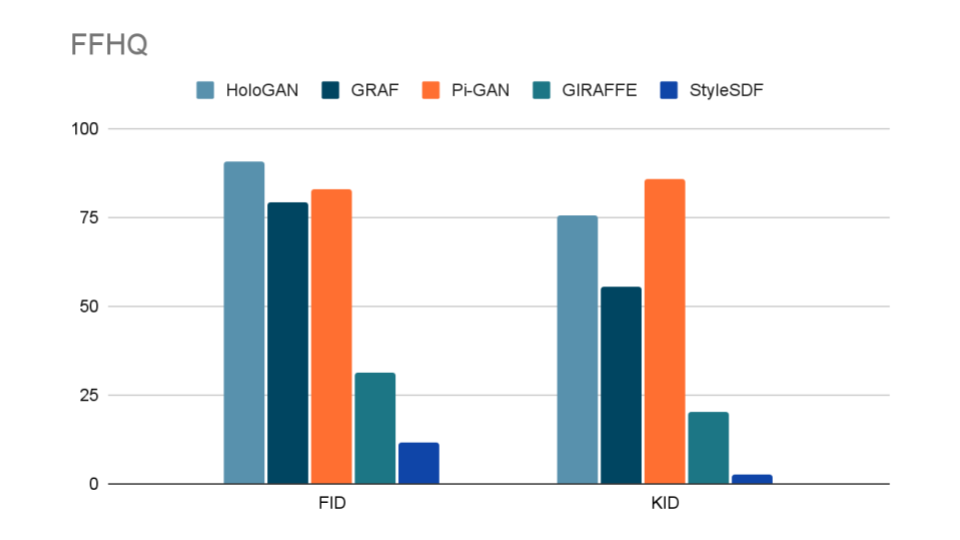

Quantitaive Analysis

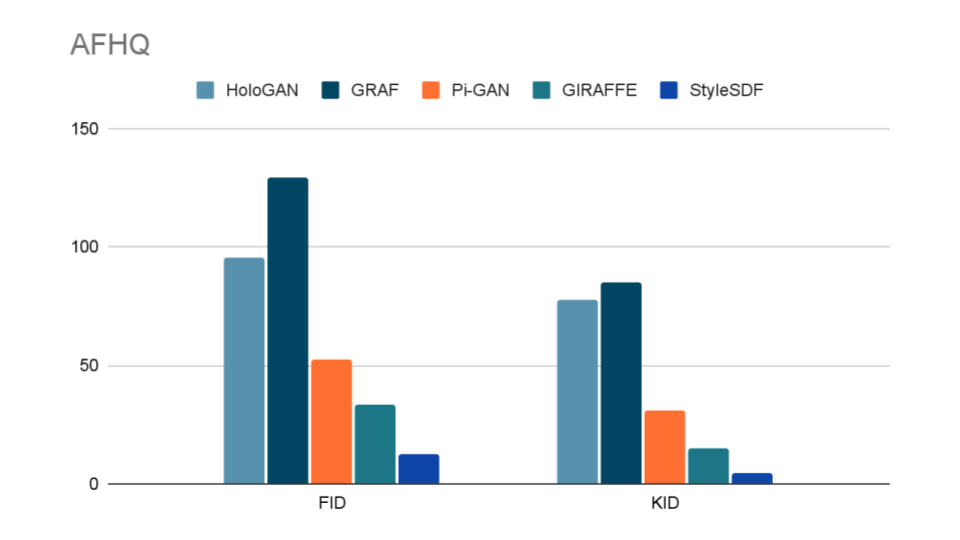

Now let us evaluate the results of StyleSDF quantitatively and for that the authors have used two metrics, Frechet Inception Distance (FID) and Kernel Inception Distance (KID). The lower the value of these metrics are, the better the quality of generated results. From Fig.20 and Fig.21 we can see that FID and KID values of StyleSDF results over datasets FFHQ and AFHQ respectively, surpasses all the other methods in comparision.

Fig.20 Quantitative comparision over FFHQ dataset

Fig.21 Quantitative comparision over AFHQ dataset

Depth Consistency Results

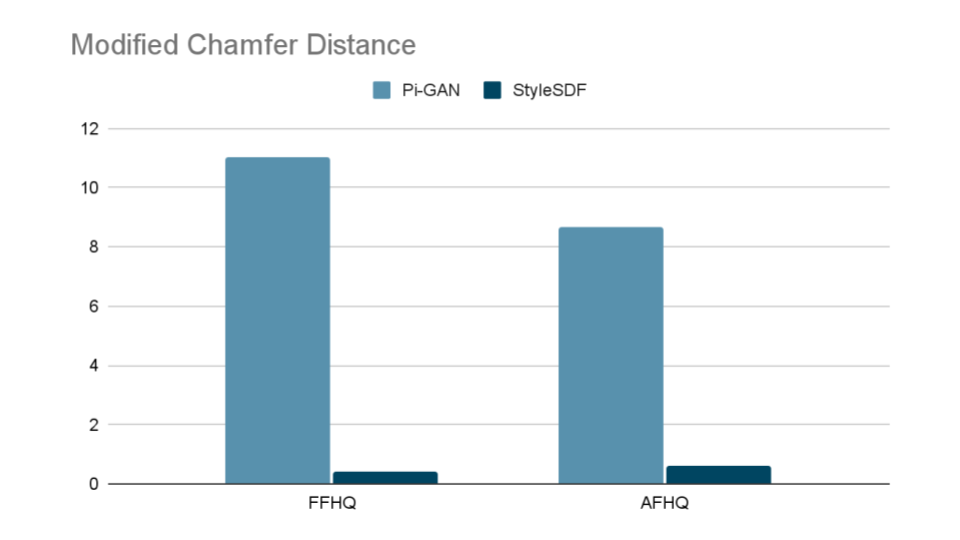

In this section we discuss the comparision of depth consistency of the generated images by StyleSDF and its baseline model Pi-GAN. We analyse how well the generated images are consistent in 3D world, i.e, we see how well the novel views align with each other globally. Fig.22 shows us the qualitative comparision of the results and we can see from that the generated poses by StyleSDF are aligned perfectly with little no error, which is not the case with the results of Pi-GAN. And in Fig.23, we can see the quantitative comparision of the depth consistency of results using metric Modified Chamfer Distance.

Fig.22 Quantitative comparision of depth consistency

Fig.23 Quantitative comparision of depth consistency

Limitations and Potential Solutions

StyleSDF is very novel and new technique, and as with any new model it do pose some limitations. In this section, let us discuss 3 limitations StyleSDF poses and potential solutions to those limitations.

Although StyleSDF poduces tremondously quality images with little to no artifacts, it do has some limitations in this area. For example; we can observe some artifacts in the area of teeth in Fig.24

Potential Solution: Can be corrected similarly to Mip-GAN [9] and Alias-Free StyleGAN [10]Fig.24 Potential Aliasing & Flickering Artifacts

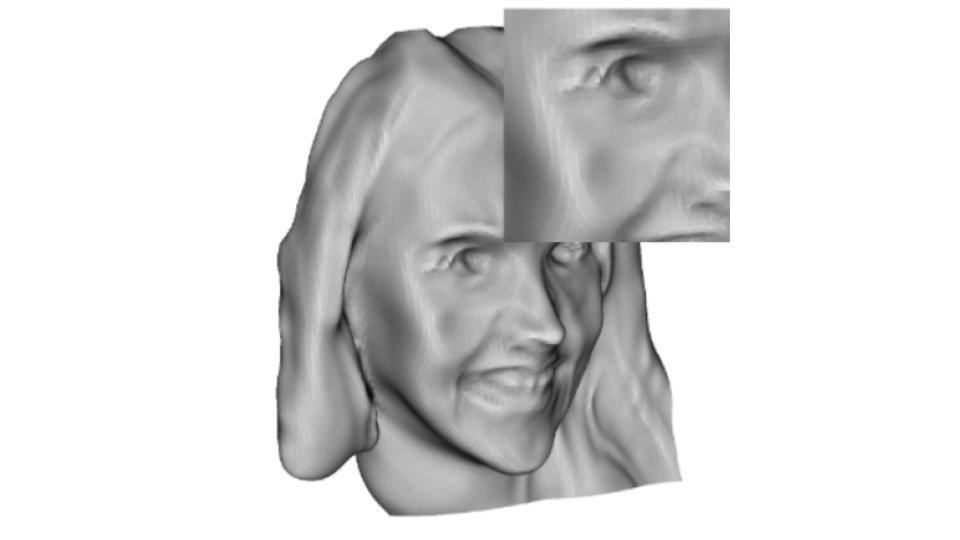

Since the technique is single-view supervised, it also poses some limitations while generating 3D shapes. It induces some artifacts such as dents, as we can see from Fig.25

Potential Solution: Adust the losses to eliminate the issue.Fig.25 Inducing Artifacts

StyleSDF does not distinguish between foreground and background of an image, hence although it generates images with very high quality, the foreground might be blurry, as we can see from Fig.26

Potential Solution: Add additional volume renderer to render bacground as suggested in Nerf++ [11]Fig.26 Inconsistent Foreground & Background

Future Works

Before concluding this article, It's time for us to discuss one more small topic regarding StyleSDF. That is the future work in this area. Since StyleSDF is very new and novel technique, there is a lot of room for improvement and we would discuss two of the future works that can be implemented in StyleSDF in this section.

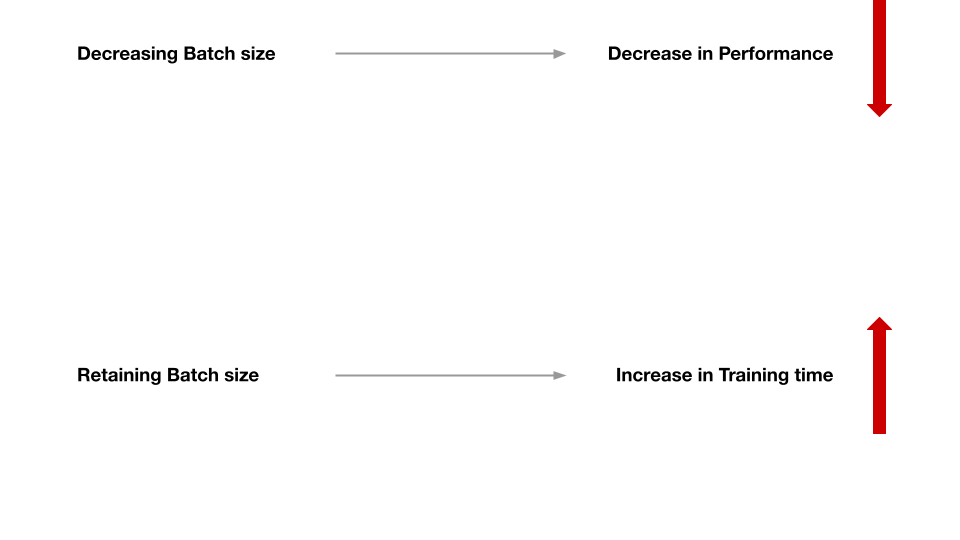

Currently, to train StyleSDF, one needs to train volume renderer and 2D Generator seperately. We can try to figure out a way to train both the models in an end-to-end fashion as a single network instead of two seperate networks. This leads to more refined geometry generation by StyleSDF. But sadly, this premise has a tradeoff between performance and training time.

If we try to perform End-to-End training of StyleSDF, this leads to increased consumption of GPU resources which in turn increases training time. We can decrease the batch size to reduce the consumption of GPU, but this leads to poor performance of the model. Hence, one need to balance between training time and Performance of the model.

Fig.27 Trade off between training time and performance

Another potential task that can be implemented is to eliminate 2D generator altogether from the architecture and figure out a way to generate high resolution image directly from generated SDF model, instead of using intermediate 64x64 low resolution images and its feature vector.

Fig.28 Elimination of 2D generator

Conclusion

In this article we have discussed about StyleSDF. What is it? How does it works? How well does it work? Its limitations and Future work. Also, we have discussed some important topics that are related to StyleSDF and also looked at few relevant works. With all the information that we have discussed and seen, we can conclued that StyleSDF outperforms Baseline Model Pi-GAN and other related architectures. Even though it has its own limitations, It is a promising active area in 3D Consistent Image generation and Novel View Synthesis and its main application areas lies in the field of Medical application, AR/VR, Robitic vision, etc...

References

[1] StyleSDF: High-Resolution 3D-Consistent Image and Geometry Generation. Roy Or-El, X.Luo, M.Shan, E.Shechtman, J.J.Park, I.K.Shlizerman. In CVPR, 2022.

[2] Katja Schwarz, Yiyi Liao, Michael Niemeyer, and Andreas Geiger. Graf: Generative radiance fields for 3d-aware image synthesis. In NeurIPS, 2020.

[3] Eric R. Chan, Marco Monteiro, Petr Kellnhofer, Jiajun Wu, and Gordon Wetzstein. pi-gan: Periodic implicit generative adversarial networks for 3d-aware image synthesis. In CVPR, pages 5799–5809, 2021.

[4] Thu Nguyen-Phuoc, Chuan Li, Lucas Theis, Christian Richardt, and Yong-Liang Yang. Hologan: Unsupervised learning of 3d representations from natural images. In ICCV, pages 7588–7597, 2019.

[5] Advances in Neural Rendering. Tewari, A. and Thies, J. and Mildenhall, B. and Srinivasan, P. and Tretschk, E. and Yifan, W. and Lassner, C. and Sitzmann, V. and Martin-Brualla, R. and Lombardi, S. and Simon, T. and Theobalt, C. and Niessner, M. and Barron, J. T. and Wetzstein, G. and Zollhofer, M. and Golyanik, V. Computer Graphics Forum (EG STAR 2022).

[6] Ben Mildenhall, Pratul P Srinivasan, Matthew Tancik, Jonathan T Barron, Ravi Ramamoorthi, and Ren Ng. Nerf: Representing scenes as neural radiance fields for view synthesis. In ECCV, pages 405–421. Springer, 2020.

[7] Tero Karras, Samuli Laine, and Timo Aila. A style-based generator architecture for generative adversarial networks. In CVPR, pages 4401–4410, 2019.

[8] Tero Karras, Samuli Laine, Miika Aittala, Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Analyzing and improving the image quality of stylegan. In CVPR, pages 8110–8119,v2020.

[9] Jonathan T. Barron, Ben Mildenhall, Matthew Tancik, Peter Hedman, Ricardo Martin-Brualla, and Pratul P. Srinivasan. Mip-nerf: A multiscale representation for anti-aliasing neural radiance fields. In ICCV, pages 5855–5864, October 2021

[10] Tero Karras, Miika Aittala, Samuli Laine, Erik Hark ¨ onen, ¨ Janne Hellsten, Jaakko Lehtinen, and Timo Aila. Aliasfree generative adversarial networks. arXiv preprint arXiv:2106.12423, 2021.

[11] Kai Zhang, Gernot Riegler, Noah Snavely, and Vladlen Koltun. Nerf++: Analyzing and improving neural radiance fields. arXiv preprint arXiv:2010.07492, 2020.