- Liu Shiru (A0187939A)

- Lim Yu Rong, Samuel (A0183921A)

- Yee Xun Wei (A0228597L)

- Python 3

- Computing power (high-end GPU) and memory space (both RAM/GPU's RAM) is extremely important if you'd like to train your own model.

- Required packages and their use are listed requirements.txt.

Data is collected from the Ted2srt webpage.

Run python3 scraper/preprocess.py from root directory to scrape and generate dataset.

The script will:

- Scrape data from website.

- Preprocess the data.

- Split the data to train-dev-test sets.

Scraped data is saved at scraper/data/, processed data will be saved to data/. Alternatively, download preprocessed data here.

To train each model:

- In the root directory, run the command

python3 main.py --config config/<dataset>/<config_file>.yaml --njobs 8.

To use our dataset, set <dataset> as ted to use scraped data, or libri to use public data from OpenSRL.

Configuration files are stored as:

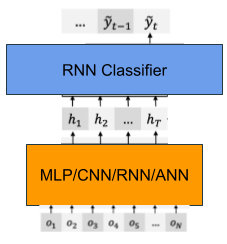

| Extractor | Classifier | Configuration file |

|---|---|---|

| MLP | RNN | mlp_rnn.yaml |

| CNN | RNN | cnn_rnn.yaml |

| ANN | RNN | ann_rnn.yaml |

| RNN | RNN | rnn_rnn.yaml |

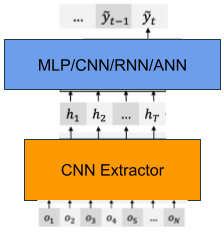

| Extractor | Classifier | Configuration file |

|---|---|---|

| CNN | MLP | cnn_mlp.yaml |

| CNN | CNN | cnn_cnn.yaml |

| CNN | ANN | cnn_ann.yaml |

Experiment results are stored at experiment_results.md.

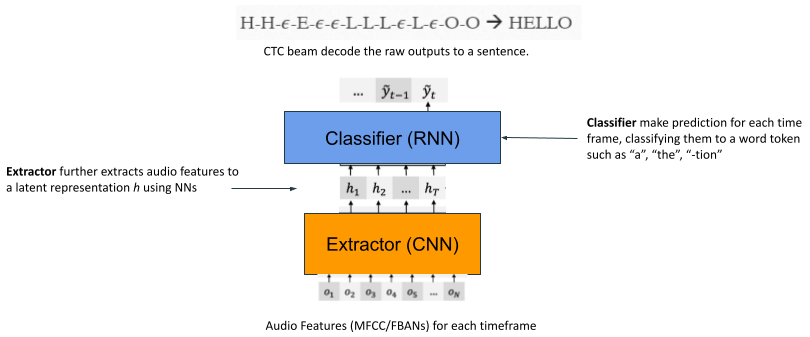

There are two main subcomponents. First is the extractor, the extractor further extracts the audio features for every frame into a latent representation

For our experimentation we firstly fix the classifier to be RNN, and compare how the 4 NN variants perform as the extractor.

Secondly, we fix the Extractor to be CNN. and replace the classifier with the 4 NN variants.

Original README can be accessed here.

-

Liu, A., Lee, H.-Y., & Lee, L.-S. (2019). Adversarial Training of End-to-end Speech Recognition Using a Criticizing Language Model. Acoustics, Speech and Signal Processing (ICASSP). IEEE.

-

Liu, A. H., Sung, T.-W., Chuang, S.-P., Lee, H.-Y., & Lee, L.-S. (2019). Sequence-to-sequence Automatic Speech Recognition with Word Embedding Regularization and Fused Decoding. arXiv [cs.CL]. Opgehaal van http://arxiv.org/abs/1910.12740