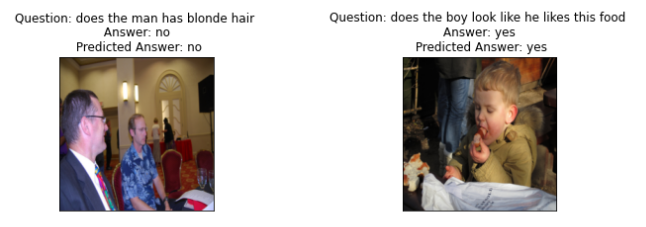

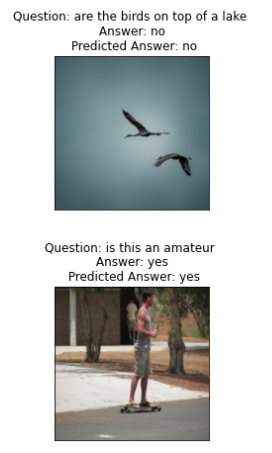

- Visual Question Answering (VQA) is a computer vision task where a system is given a text-based question about an image, and it must infer the answer.

- The model will give the answer in yes/no type format.

Dataset is hosted on Kaggle and one can download it from this site as well.

- Removed Punctuations, Stopwords, Special Characters, Numbers, and unnecessary spaces.

I have built 2 models for this task:

-

VQA Model 1 - This model is a combination of pretrained Resnet-52 and bi-layer LSTM with pretrained word embeddings from word2vec. The feature vector from both the model is then fused via late fusion. The model is trained on the VQA dataset. The model is trained for 10 epochs and the loss is 0.5. The model is saved in the resnet_word2vec_model folder in drive along with optimizers.

- The training results can be imporved by training the model for more epochs.

- Also using different samplers too can improve the results.

-

VQA Model 2 - This model is a combination of transformers model BeIT and BERT. The feature vector from both the model is then fused via late fusion. The model is trained on the VQA dataset. The model is trained for 10 epochs and the loss is 0.5. The model is hosted on hub.

- It didn't perform well as expected because late fusion is unable to capture different modalities.

| Model | Accuracy | F1 Score |

|---|---|---|

| VQA Model 1 | 0.51 | 0.61 |

| Model | Accuracy | F1 Score |

|---|---|---|

| VQA Model 2 | 0.53 | 0.61 |

- VQA: Visual Question Answering

- ViLT: Vision-and-Language Transformer Without Convolution or Region Supervision

- PyTorch

- Transformers

- Pandas

- Numpy

- NLTK

- Matplotlib

- Seaborn

- Torchvision

- Tqdm

- Scikit-learn

- Gensim

- Plotly