This is an implementation of the TD-VAE introduced in this ICLR 2019 paper, which is really well writen. TD-VAE is designed such that it have all following three features:

- It learns a state representation of observations and makes predictions on the state level.

- Based on observations, it learns a belief state that contains all the inforamtion required to make predictions about the future.

- It learns to make predictions multiple steps in the future directly instead of make predictions step by step. It learns by connecting states that are multiple steps apart.

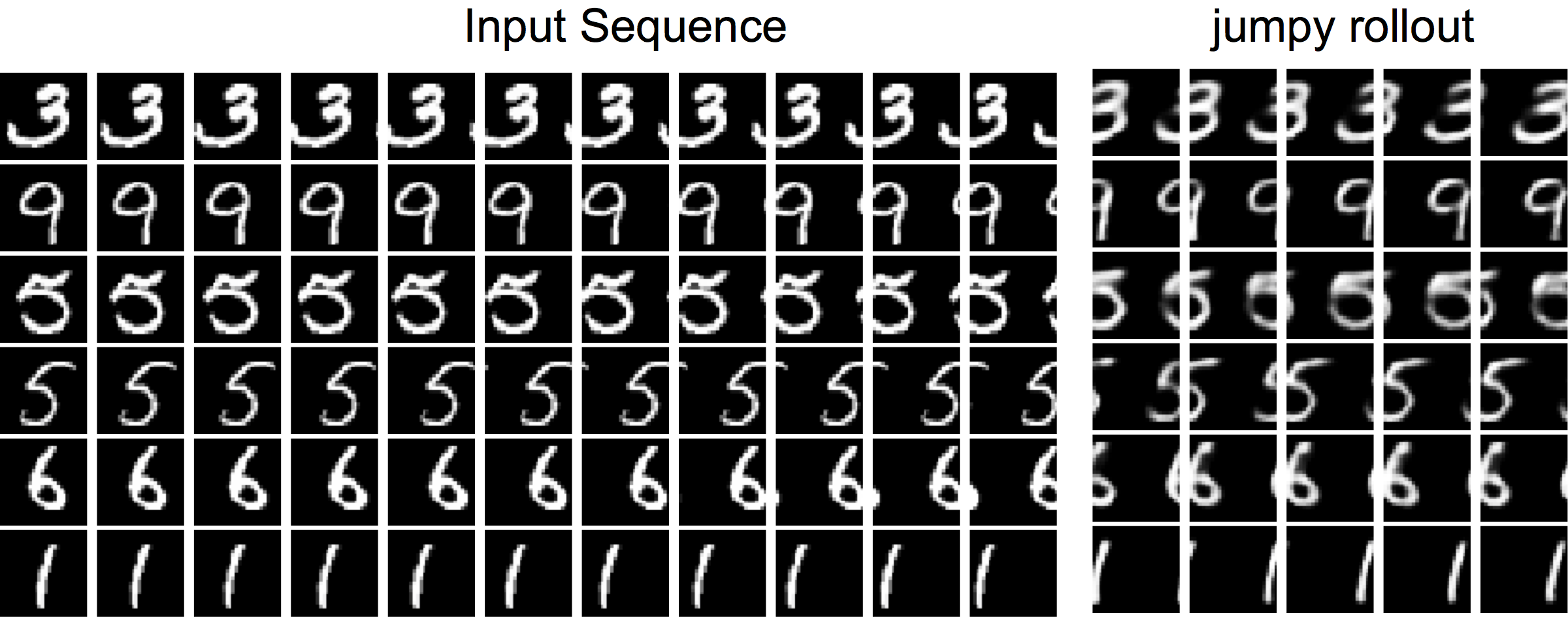

Here, based on the information disclosed in the paper, I try to reproduce the experiment about moving MNIST digits. In this experiment, a sequence of a MNIST digit moving to the left or the right direction is presented to the model. The model need to predict how the digit moves in the following steps. After training the model, we can feed a sequence of digit into the model and see how well it can predict the further. Here is the result.