EMRFlow is designed to simplify the process of running PySpark jobs on Amazon EMR (Elastic Map Reduce). It abstracts the complexities of interacting with EMR APIs and provides an intuitive command-line interface and python library to effortlessly submit, monitor, and list your EMR PySpark jobs.

EMRFlow serves as both a library and a command-line tool.

To install EMRFlow, please run:

pip install emrflowCreate an emr_serverless_config.json file containing the specified details and store it in your home directory

{

"application_id": "",

"job_role": "",

"region": ""

}Please read the GETTING STARTED to integrate EMRFlow into your project.

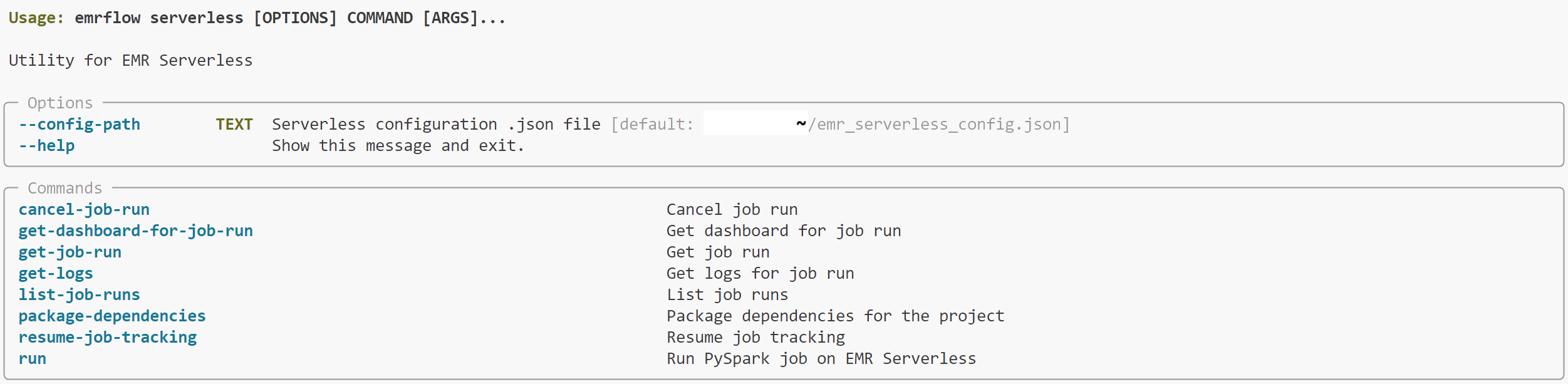

EMRFlow offers several commands to manage your Pypark jobs. Let's explore some key functionalities:

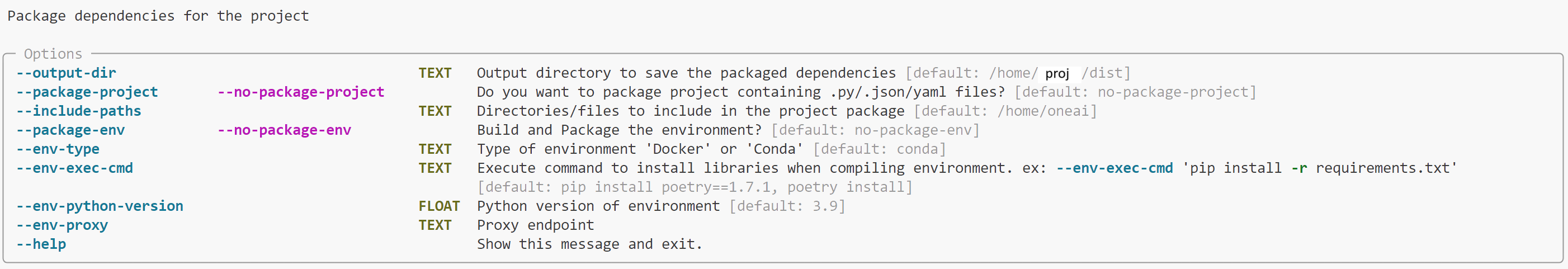

emrflow serverless --helpYou will need to package dependencies before running an EMR job if you have external libraries needing to be installed or local imports from your code base. See Scenario 2-4 in GETTING STARTED.

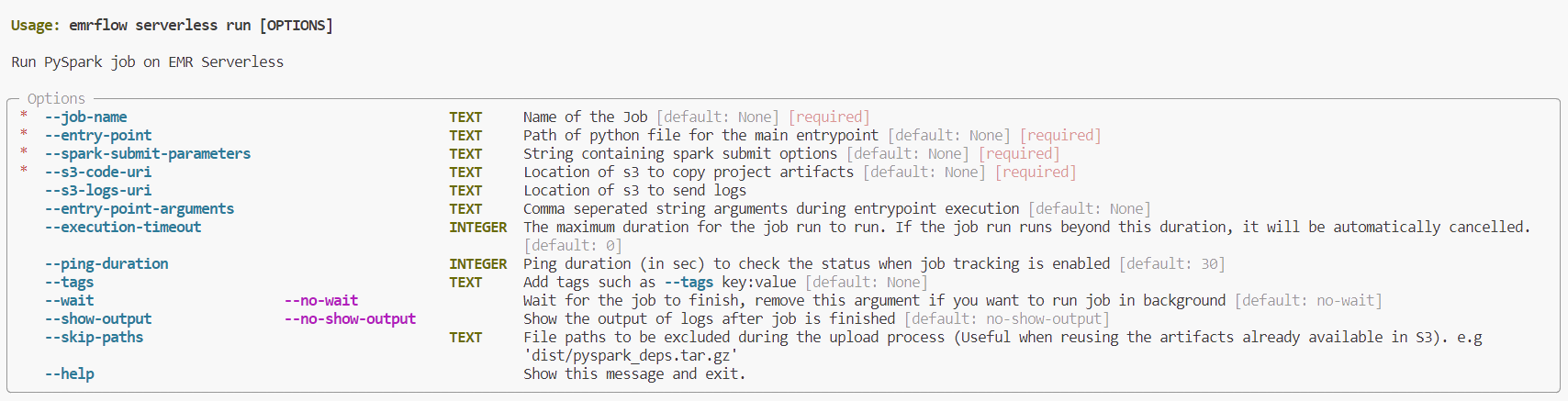

emrflow serverless package-dependencies --helpemrflow serverless run --helpemrflow serverless run \

--job-name "<job-name>" \

--entry-point "<location-of-main-python-file>" \

--spark-submit-parameters " --conf spark.executor.cores=8 \

--conf spark.executor.memory=32g \

--conf spark.driver.cores=8 \

--conf spark.driver.memory=32g \

--conf spark.dynamicAllocation.maxExecutors=100" \

--s3-code-uri "s3://<emr-s3-path>" \

--s3-logs-uri "s3://<emr-s3-path>/logs" \

--execution-timeout 0 \

--ping-duration 60 \

--wait \

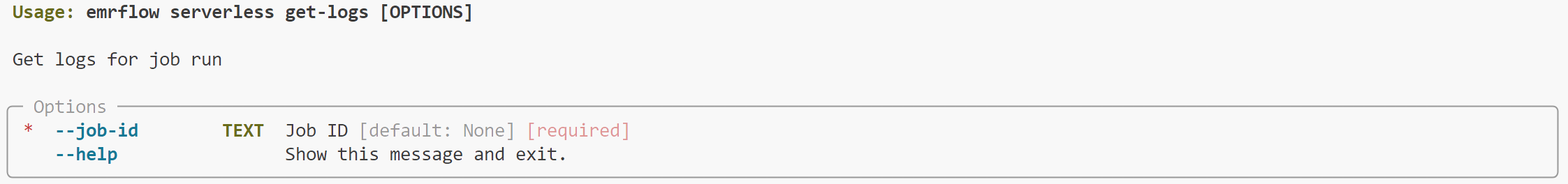

--show-outputemrflow serverless list-job-runs --helpemrflow serverless get-logs --helpimport os

from emrflow import emr_serverless

# initialize connection

emr_serverless.init_connection()

# submit job to EMR Serverless

emr_job_id = emr_serverless.run(

job_name="<job-name>",

entry_point="<location-of-main-python-file>",

spark_submit_parameters="--conf spark.executor.cores=8 \

--conf spark.executor.memory=32g \

--conf spark.driver.cores=8 \

--conf spark.driver.memory=32g \

--conf spark.dynamicAllocation.maxExecutors=100",

wait=True,

show_output=True,

s3_code_uri="s3://<emr-s3-path>",

s3_logs_uri="s3://<emr-s3-path>/logs",

execution_timeout=0,

ping_duration=60,

tags=["env:dev"],

)

print(emr_job_id)And so much more.......!!!

We welcome contributions to EMRFlow. Please open an issue discussing the change you would like to see. Create a feature branch to work on that issue and open a Pull Request once it is ready for review.

We use black as a code formatter. The easiest way to ensure your commits are always formatted with the correct version of black it is to use pre-commit: install it and then run pre-commit install once in your local copy of the repo.