Paper | Results | Dataset | Checkpoints | Acknowledgement | Colab

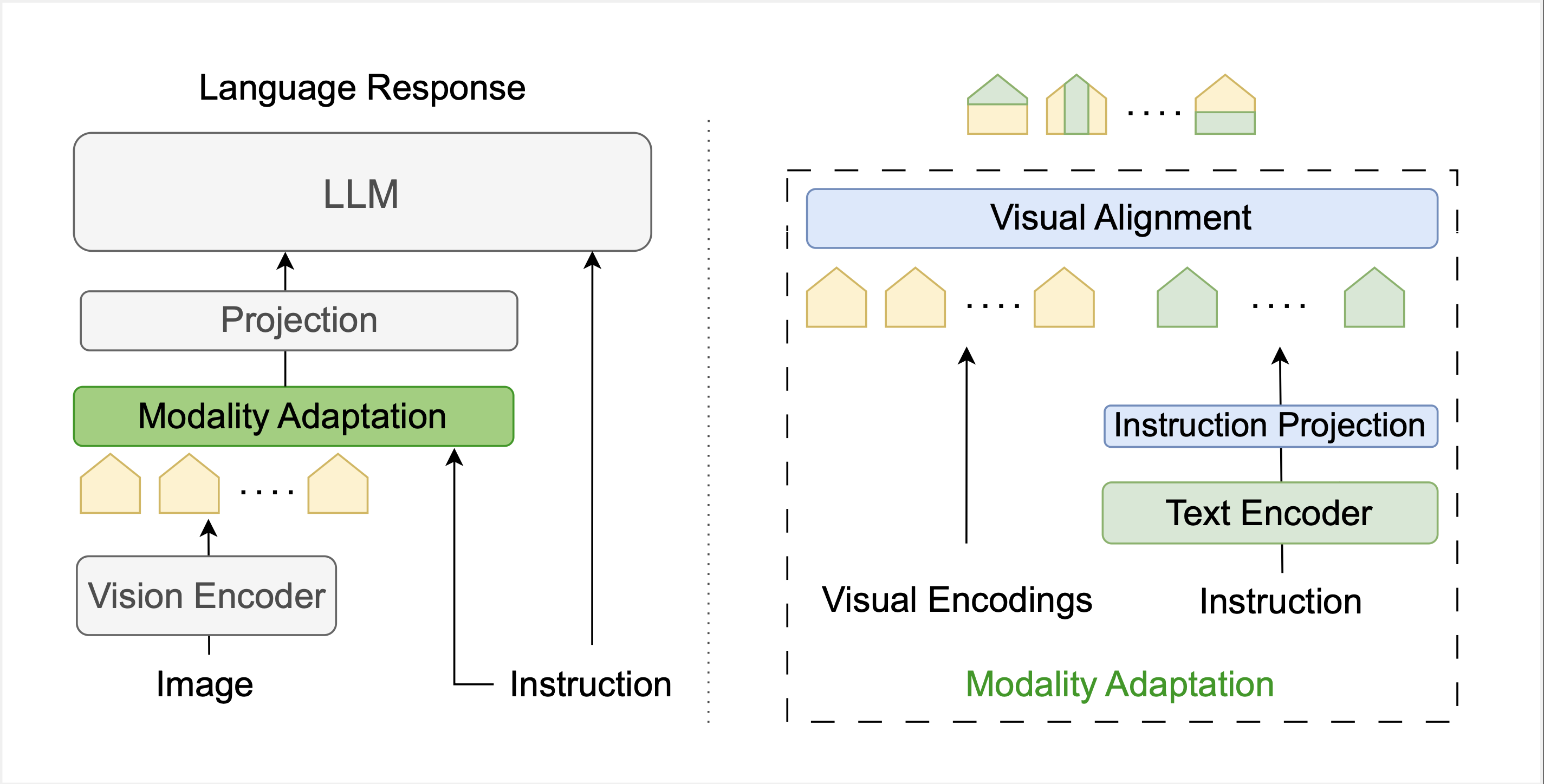

We introduce EMMA (Efficient Multi-Modal Adaptation), which performs modality fusion through a lightweight modality adaptation mechanism.

Our contributions can be summarized as follows:-

Efficient Modality Adaptation: We introduce a lightweight modality adaptation mechanism that refines visual representations with less than a 0.2% increase in model size, maintaining high efficiency without compromising performance.

-

Comprehensive Analysis of Visual Alignment: We conduct an in-depth investigation of the Visual Alignment module to provide (1) a detailed understanding of how visual and textual tokens are integrated and (2) an analysis of how effectively the aligned visual representations attend to instructions compared to the initial raw visual encodings.

-

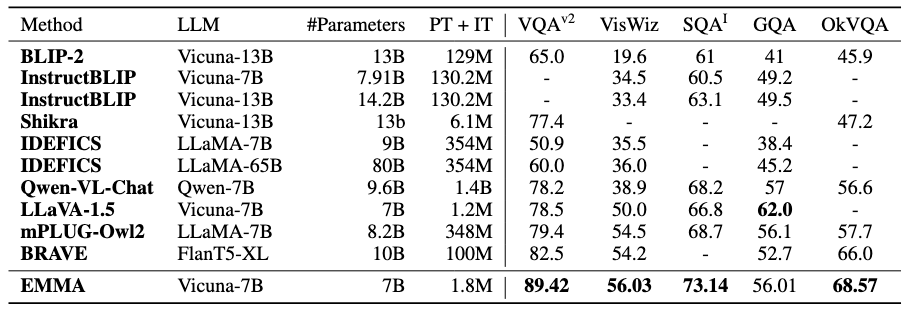

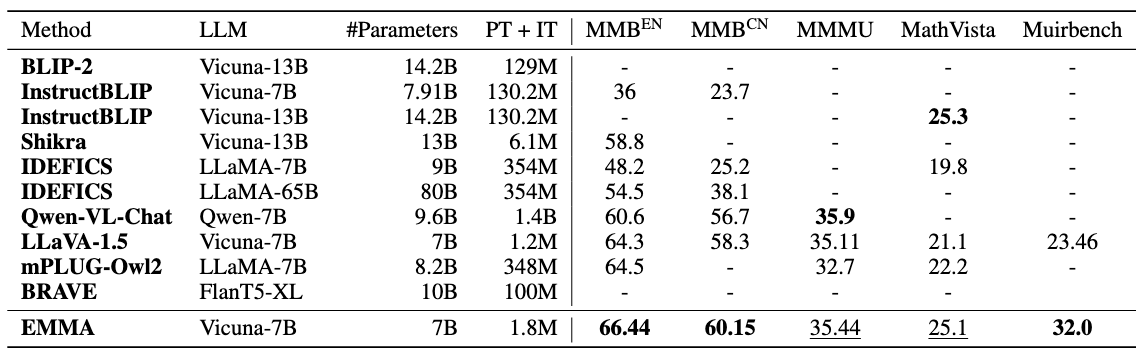

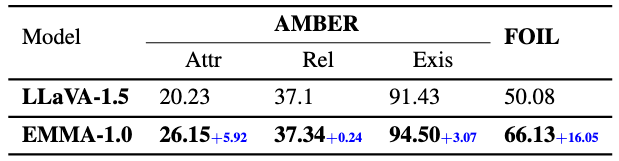

Extensive Empirical Evaluation: We perform a comprehensive evaluation on both general and MLLM-specialized benchmarks, demonstrating that EMMA significantly improves cross-modal alignment, boosts task performance, and enhances the robustness of multi-modal LLMs.

-

EMMA Outperforms Larger Models: Compared to mPLUG-Owl2 which has

$50\times$ larger modality adaptation module and is trained on$300\times$ more data, EMMA outperforms on 7 of 8 benchmarks. Additionally, compared with BRAVE which has$24\times$ larger vision encoder and is trained on$100\times$ more data EMMA outperforms on all benchmarks.

- General Benchmarks

- MLLM Specialized Benchmarks

- Hallucinations

- Pretraining: LLaVA PT data

- Instruction Tuning: LLaVA IT data + LVIS-Instruct4V + CLEVR + VizWiz + ScienceQA

- Clone the project

git clone https://github.com/SaraGhazanfari/EMMA.git

- Clone the checkpoint

cd EMMA

git clone https://huggingface.co/saraghznfri/emma-7b

mv emma-7b/bpe_simple_vocab_16e6.txt.gz llava/model/multimodal_encoder/

We build our project based on LLaVA: an amazing open-sourced project for vision language assistant