(Unofficial) Implementation of FSD50K [1] baselines for pytorch using pytorch-lightning

FSD50K is a human-labelled dataset for sound event recognition, with labels spanning the AudioSet [2] ontology.

Although AudioSet is significantly larger, their balanced subset which has sufficiently good labelling accuracy is smaller and is difficult to acquire since the official release only contains Youtube video ids.

More information on FSD50K can be found in the paper and the dataset page.

- Objective is to provide a quick starting point for training

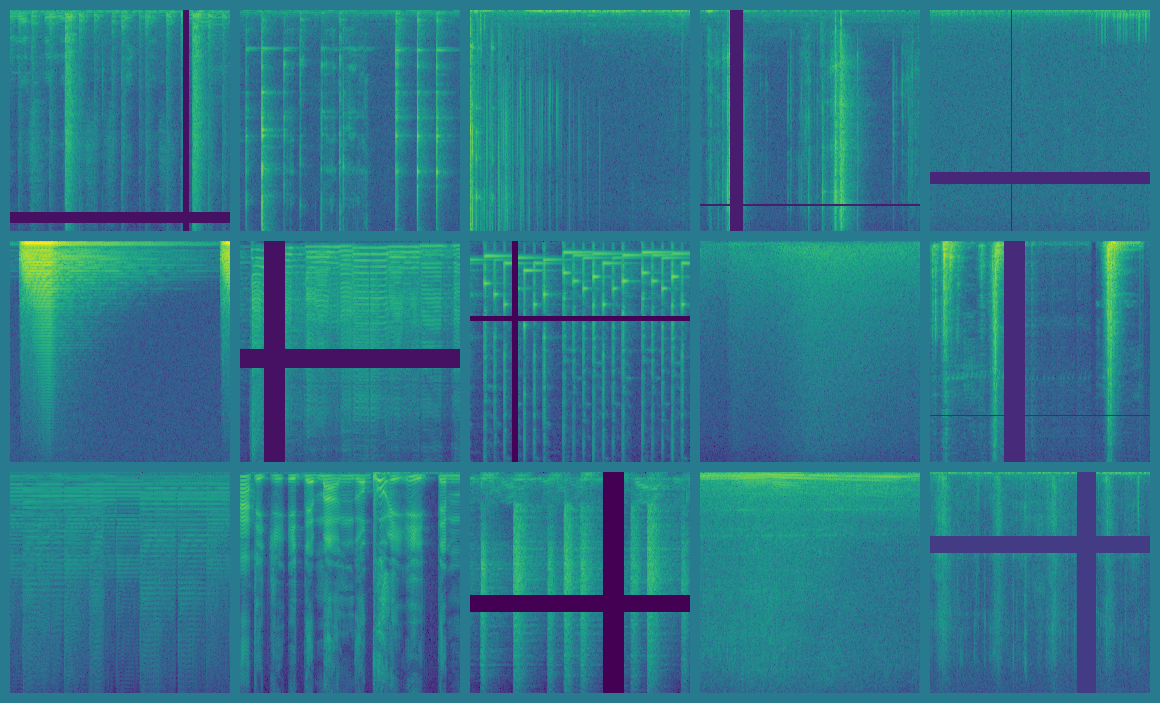

VGG-like,ResNet-18,CRNNandDenseNet-121baselines as given in the FSD50K paper. - Includes preprocessing steps following the paper's experimentation protocol, including patching methodology (Section 5.B)

- Support for both spectrograms and melspectrogram features (just change "feature" under "audio_config" in cfg file)

- melspectrogram setting has the exact parameters as given in the paper, spectrogram can be configured

- FP16, multi-gpu support

- SpecAugment, Background noise injection were used during training

- As opposed to the paper, Batch sizes

> 64were used for optimal GPU utilization. - For faster training, pytorch defaults for the Adam optimizer were used.

Learning rates given in the paper are much lower and will require you to train for much longer. - Per instance Zero-centering was used

| Model | features | cfg | Official mAP, d' |

This repo mAP, d' |

Link |

|---|---|---|---|---|---|

| CRNN | melspectrograms | crnn_chunks_melspec.cfg | 0.417, 2.068 | 0.40, 2.08 | checkpoint |

| ResNet-18 | melspectrograms | resnet_chunks_melspec.cfg | 0.373, 1.883 | 0.400, 1.905 | checkpoint |

| VGG-like | melspectrograms | vgglike_chunks_melspec.cfg | 0.434, 2.167 | 0.408, 2.055 | checkpoint |

| DenseNet-121 | melspectrograms | densenet121_chunks_melspec.cfg | 0.425, 2.112 | 0.432, 2.016 | checkpoint |

| ResNet-18 | spectrograms (length=0.02 ms, stride=0.01 ms) |

resnet_chunks.cfg | - | 0.420, 1.946 | checkpoint |

| VGG-like | spectrograms (length=0.02 ms, stride=0.01 ms) |

vgglike_chunks.cfg | - | 0.388, 2.021 | checkpoint |

- As previously stated, ideally you'd want to run on the exact batch size and learning rates if your goal is exact reproduction. This implementation intends to be a good starting point!

torch==1.7.1and correspondingtorchaudiofrom official pytorchlibsndfile-devfrom OS repos, for 'SoundFile==0.10.3'requirements.txt

- Download FSD50K dataset and extract files, separating

devandevalfiles into different subdirectories. - Preprocessing

multiproc_resample.pyto convert sample rate of audio files to 22050 Hzpython multiproc_resample.py --src_path <path_to_dev> --dst_path <path_to_dev_22050> python multiproc_resample.py --src_path <path_to_eval> --dst_path <path_to_eval_22050>chunkify_fsd50k.pyto make chunks from dev and eval files as per Section 5.B of the paper. One can do this on the fly as well.python chunkify_fsd50k.py --src_dir <path_to_dev_22050> --tgt_dir <path_to_dev_22050_chunks> python chunkify_fsd50k.py --src_dir <path_to_eval_22050> --tgt_dir <path_to_eval_22050_chunks>make_chunks_manifests.pyto make manifest files used for training.This will store absolute file paths in the generated tr, val and eval csv files.python make_chunks_manifests.py --dev_csv <FSD50k_DIR/FSD50K.ground_truth/dev.csv> --eval_csv <FSD50k_DIR/FSD50K.ground_truth/eval.csv> \ --dev_chunks_dir <path_to_dev_22050_chunks> --eval_chunks_dir <path_to_eval_22050_chunks> \ --output_dir <chunks_meta_dir>

- The

noisesubset of the Musan Corpus is used for background noise augmentation. Extract allnoisefiles into a single directory and resample them to 22050 Hz. Background noise augmentation can be switched off by removingbg_filesentry in the model cfg. - Training and validation

- For ResNet18 baseline, run

python train_fsd50k_chunks.py --cfg_file ./cfgs/resnet18_chunks_melspec.cfg -e <EXP_ROOT/resnet18_adam_256_bg_aug_melspec> --epochs 100 - For VGG-like, run

python train_fsd50k_chunks.py --cfg_file ./cfgs/vgglike_chunks_melspec.cfg -e <EXP_ROOT/vgglike_adam_256_bg_aug_melspec> --epochs 100

- For ResNet18 baseline, run

- Evaluation on Evaluation set

python eval_fsd50k.py --ckpt_path <path to .ckpt file> --eval_csv <chunks_meta_dir/eval.csv> --lbl_map <chunks_meta_dir/lbl_map.json>

- Try more augmentations, such as Random Resized cropping

- Try weighted BinaryCrossEntropy to help with the significant label imbalance.

- Use Focal loss [3] instead of BinaryCrossEntropy

Special thanks to Eduardo Fonseca for answering my queries and a more comprehensive explanation of the baseline protocol in [1].

[1] Fonseca, E., Favory, X., Pons, J., Font, F. and Serra, X., 2020. FSD50k: an open dataset of human-labeled sound events. arXiv preprint arXiv:2010.00475.

[2] Gemmeke, J.F., Ellis, D.P., Freedman, D., Jansen, A., Lawrence, W., Moore, R.C., Plakal, M. and Ritter, M., 2017, March. Audio set: An ontology and human-labeled dataset for audio events. In 2017 IEEE International Conference on Acoustics, Speech and Signal Processing (ICASSP) (pp. 776-780). IEEE.

[3] Lin, T.Y., Goyal, P., Girshick, R., He, K. and Dollár, P., 2017. Focal loss for dense object detection. In Proceedings of the IEEE international conference on computer vision (pp. 2980-2988).