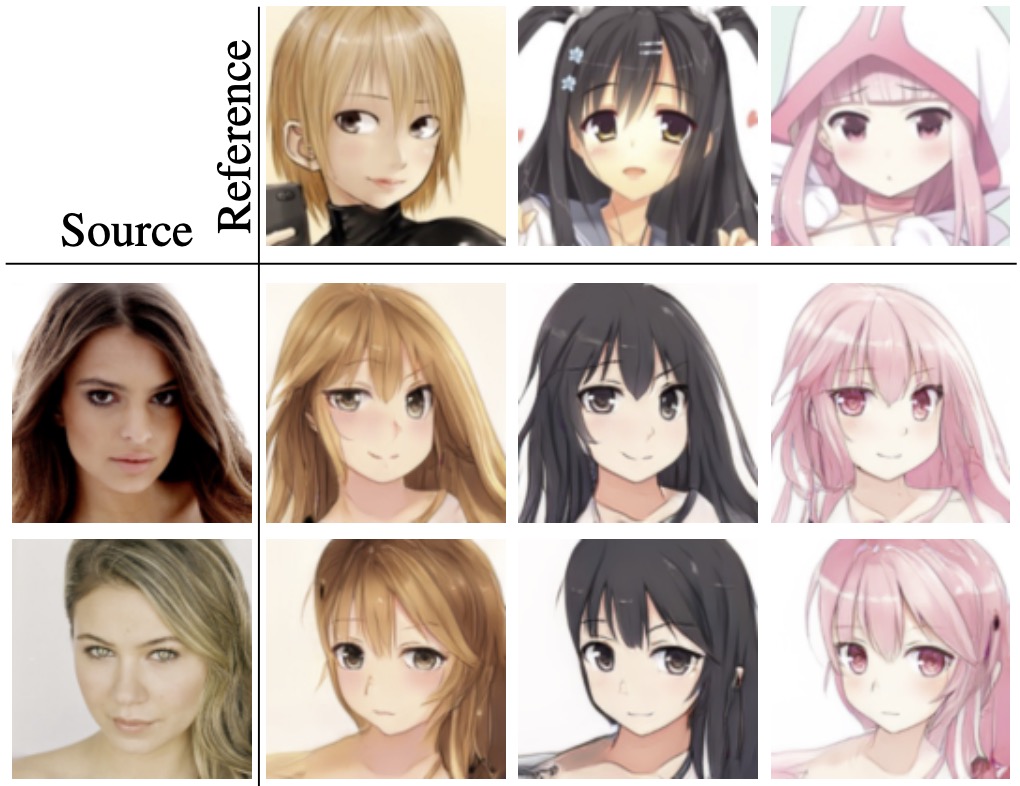

AniGAN: Style-Guided Generative Adversarial Networks for Unsupervised Anime Face Generation

Bing Li1,

Yuanlue Zhu2,

Yitong Wang2,

Chia-Wen Lin3,

Bernard Ghanem1,

Linlin Shen4

1Visual Computing Center, KAUST, Thuwal, Saudi Arabia

2ByteDance, Shenzhen, China

3Department of Electrical Engineering, National Tsing Hua University, Hsinchu, Taiwan

4Computer Science and Software Engineering, Shenzhen University, Shenzhen, China

We build a new dataset called face2anime, which is larger and contains more diverse anime styles (e.g., face poses, drawing styles, colors, hairstyles, eye shapes, strokes, facial contours) than selfie2anime. The face2anime dataset contains 17,796 images in total, where the number of both anime-faces and natural photo-faces is 8,898. The anime-faces are collected from the Danbooru2019 dataset, which contains many anime characters with various anime styles. We employ a pretrained cartoon face detector to select images containing anime-faces. For natural-faces, we randomly select 8,898 female faces from the CelebA-HQ dataset. All images are aligned with facial landmarks and are cropped to size 128 × 128. We separate images from each domain into a training set with 8,000 images and a test set with 898 images.

You can download the face2anime dataset from Google Drive.

If you find this work useful or use the face2anime dataset, please cite our paper:

@misc{li2021anigan,

title={AniGAN: Style-Guided Generative Adversarial Networks for Unsupervised Anime Face Generation},

author={Bing Li and Yuanlue Zhu and Yitong Wang and Chia-Wen Lin and Bernard Ghanem and Linlin Shen},

year={2021},

eprint={2102.12593},

archivePrefix={arXiv},

primaryClass={cs.CV}

}