This is the repository of benchmarks for text-to-image diffusion models (both community models and academic models). We used COCO Caption and DrawBench as prompt sets to evaluate the models' basic and advanced capabilities to generate images based on texts.

The metrics considered in this repo are:

- CLIPScore, which measures the text-image alignment

- Improved Aesthetic Predictor, which measures how good-looking an image is

- ImageReward, which measures the human rating of an image

- Human Preference Score, which measures the human preference of an image

- X-IQE, a comprehensive and explainable metric based on visual LLMs (MiniGPT-4)

The weighted average score is calculated as:

CLIPScore * 15% + AestheticPred * 15% + ImageReward * 20% + HPS * 20% + (X-IQE Fidelity + X-IQE Alignment + X-IQE Aesthetics) * 10%

where all scores are normalized into [0,1], so the weighted average score will be in [0,1], larger is better. We hope this project will help with your study and research.

We randomly sample 1000 captions from the COCO Caption dataset (2014). This prompt set mainly contains common human, animal, object, and scene descriptions. It can evaluate the diffusion models' basic generation ability.

Examples:

- A man standing in a snowy forest on his skis.

- A toilet is in a large room with a magazine rack, sink, and tub.

- Four large elephants are watching over their baby in the wilderness.

- A street vendor displaying various pieces of luggage for sale.

- White commuter train traveling in rural hillside area.

| Rank | Model | Weighted Avg. Score | CLIP | Aes. Pred. | Image Reward | HPS | X-IQE | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Fidelity | Alignment | Aesthetics | Overall | |||||||

| 1 | DeepFloyd-IF-XL | 0.865 | 0.828 | 5.26 | 0.703 | 0.1994 | 5.55 | 3.52 | 5.79 | 14.86 |

| 2 | Realistic VisionSD | 0.835 | 0.825 | 5.44 | 0.551 | 0.2023 | 5.36 | 3.39 | 5.87 | 14.62 |

| 3 | Dreamlike Photoreal 2.0SD | 0.830 | 0.824 | 5.47 | 0.399 | 0.2021 | 5.50 | 3.33 | 5.78 | 14.61 |

| 4 | Stable Diffusion 2.1 | 0.828 | 0.831 | 5.42 | 0.472 | 0.1988 | 5.52 | 3.45 | 5.77 | 14.74 |

| 5 | DeliberateSD | 0.813 | 0.827 | 5.41 | 0.517 | 0.2024 | 5.35 | 3.34 | 5.81 | 14.50 |

| 6 | MajicMix RealisticSD | 0.775 | 0.828 | 5.60 | 0.477 | 0.2015 | 5.27 | 3.10 | 5.86 | 14.23 |

| 7 | ChilloutMixSD | 0.754 | 0.820 | 5.46 | 0.433 | 0.2008 | 5.40 | 3.05 | 5.87 | 14.32 |

| 8 | OpenjourneySD | 0.718 | 0.806 | 5.38 | 0.244 | 0.1990 | 5.44 | 3.37 | 5.96 | 14.77 |

| 9 | Epic DiffusionSD | 0.691 | 0.810 | 5.30 | 0.265 | 0.1982 | 5.54 | 3.31 | 5.71 | 14.56 |

| 10 | Stable Diffusion 1.5 | 0.656 | 0.808 | 5.22 | 0.242 | 0.1974 | 5.48 | 3.31 | 5.79 | 14.58 |

| 11 | Stable Diffusion 1.4 | 0.599 | 0.803 | 5.22 | 0.104 | 0.1966 | 5.47 | 3.29 | 5.76 | 14.52 |

| 12 | DALL·E mini | 0.416 | 0.807 | 4.72 | -0.025 | 0.1901 | 5.52 | 2.96 | 5.78 | 14.26 |

| 13 | Versatile Diffusion | 0.410 | 0.779 | 5.19 | -0.245 | 0.1927 | 5.59 | 2.97 | 5.72 | 14.28 |

| 14 | Latent Diffusion | 0.131 | 0.795 | 4.40 | -0.587 | 0.1881 | 5.42 | 2.71 | 5.58 | 13.71 |

| 15 | VQ Diffusion | 0.103 | 0.782 | 4.62 | -0.618 | 0.1889 | 5.40 | 2.83 | 5.39 | 13.62 |

(

On COCO Captions, the evaluation methods reached similar conclusions, which reflects our intuition for these text-to-image algorithms (e.g., SD2.1>SD1.5>SD1.4). In addition, Openjourney performs the best among the methods fine-tuned on SD.

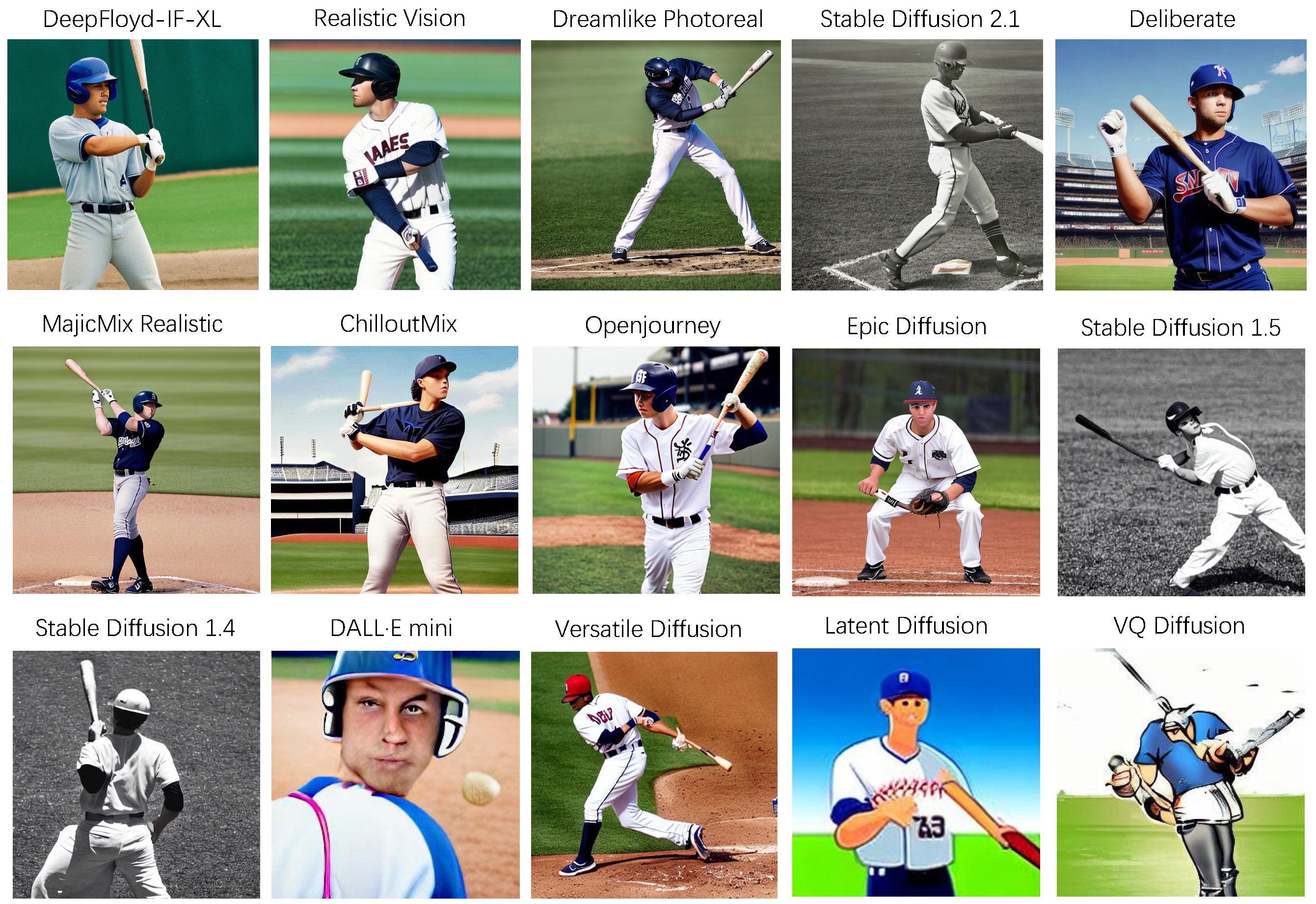

Prompt: A baseball player holding a bat on a field

We use all 200 prompts from the DrawBench (2022). This prompt set mainly contains challenging situations, and can evaluate the diffusion models' advanced generation capabilities.

Examples:

- A pink colored giraffe. (abnormal color)

- A bird scaring a scarecrow. (abnormal scene)

- Five cars on the street. (counting)

- A connection point by which firefighters can tap into a water supply. (ambiguous description)

- A banana on the left of an apple. (position)

- Matutinal. (rare words)

- A storefront with 'Google Research Pizza Cafe' written on it. (text)

| Rank | Model | Weighted Avg. Score | CLIP | Aes. Pred. | Image Reward | HPS | X-IQE | |||

|---|---|---|---|---|---|---|---|---|---|---|

| Fidelity | Alignment | Aesthetics | Overall | |||||||

| 1 | Realistic VisionSD | 0.891 | 0.813 | 5.34 | 0.370 | 0.2009 | 5.43 | 2.79 | 5.58 | 13.80 |

| 2 | DeepFloyd-IF-XL | 0.871 | 0.827 | 5.10 | 0.54 | 0.1977 | 5.32 | 2.96 | 5.64 | 13.92 |

| 3 | Dreamlike Photoreal 2.0SD | 0.821 | 0.815 | 5.40 | 0.260 | 0.2000 | 5.36 | 2.80 | 5.35 | 13.51 |

| 4 | DeliberateSD | 0.812 | 0.815 | 5.40 | 0.519 | 0.2016 | 5.21 | 2.75 | 5.28 | 13.24 |

| 5 | MajicMix RealisticSD | 0.762 | 0.802 | 5.49 | 0.258 | 0.2001 | 5.33 | 2.57 | 5.31 | 13.21 |

| 6 | ChilloutMixSD | 0.658 | 0.803 | 5.34 | 0.169 | 0.1987 | 5.07 | 2.60 | 5.37 | 13.04 |

| 7 | Stable Diffusion 1.4 | 0.624 | 0.793 | 5.09 | -0.029 | 0.1945 | 5.32 | 2.72 | 5.40 | 13.44 |

| 8 | OpenjourneySD | 0.595 | 0.787 | 5.35 | 0.056 | 0.1972 | 5.14 | 2.62 | 5.21 | 12.97 |

| 9 | Stable Diffusion 1.5 | 0.594 | 0.795 | 5.14 | 0.072 | 0.1954 | 5.18 | 2.61 | 5.35 | 13.14 |

| 10 | Stable Diffusion 2.1 | 0.576 | 0.817 | 5.31 | 0.163 | 0.1955 | 5.10 | 2.50 | 5.04 | 12.64 |

| 11 | Epic DiffusionSD | 0.574 | 0.792 | 5.16 | 0.069 | 0.1951 | 5.14 | 2.63 | 5.32 | 13.09 |

| 12 | Versatile Diffusion | 0.417 | 0.756 | 5.08 | -0.489 | 0.1901 | 5.31 | 2.52 | 5.42 | 13.25 |

| 13 | DALL·E mini | 0.390 | 0.770 | 4.65 | -0.250 | 0.1895 | 5.41 | 2.33 | 5.31 | 13.05 |

| 14 | Latent Diffusion | 0.247 | 0.777 | 4.32 | -0.476 | 0.1877 | 5.25 | 2.29 | 5.24 | 12.78 |

| 15 | VQ Diffusion | 0.067 | 0.741 | 4.41 | -1.084 | 0.1856 | 5.16 | 2.35 | 5.17 | 12.68 |

(

On DrawBench prompts, the results are not consistent with COCO. The resampling of the more common prompts during the fine-tuning stage (SD1.5, SD2.1, Openjourney) usually negatively affecting the models' ability on hard prompts.

Prompt: A large thick-skinned semiaquatic African mammal, with massive jaws and large tusks

Adding results of more awesome models on Huggingface.

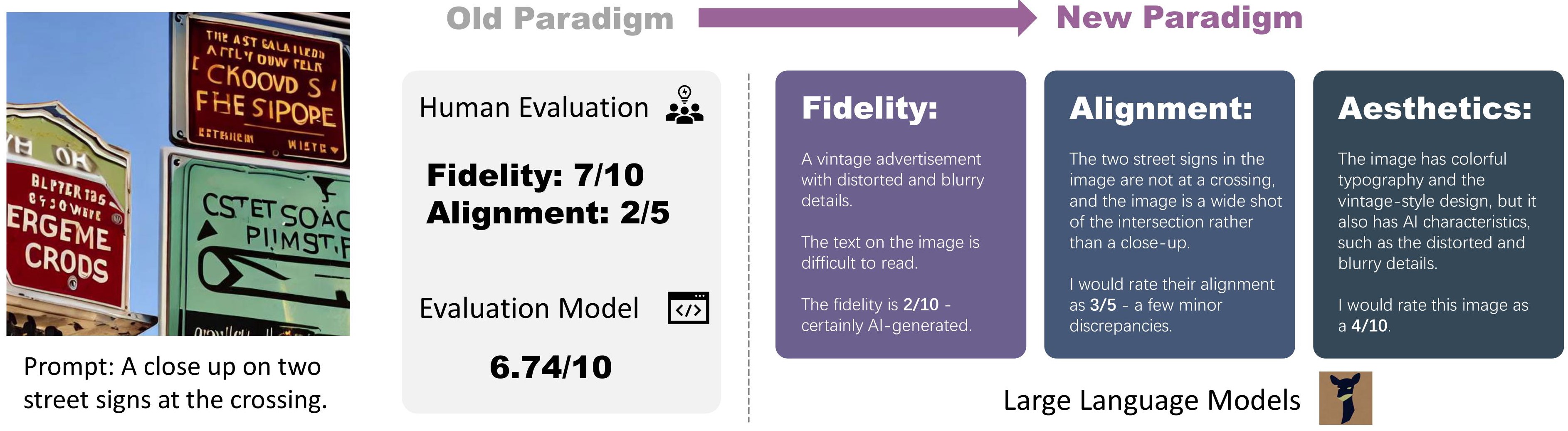

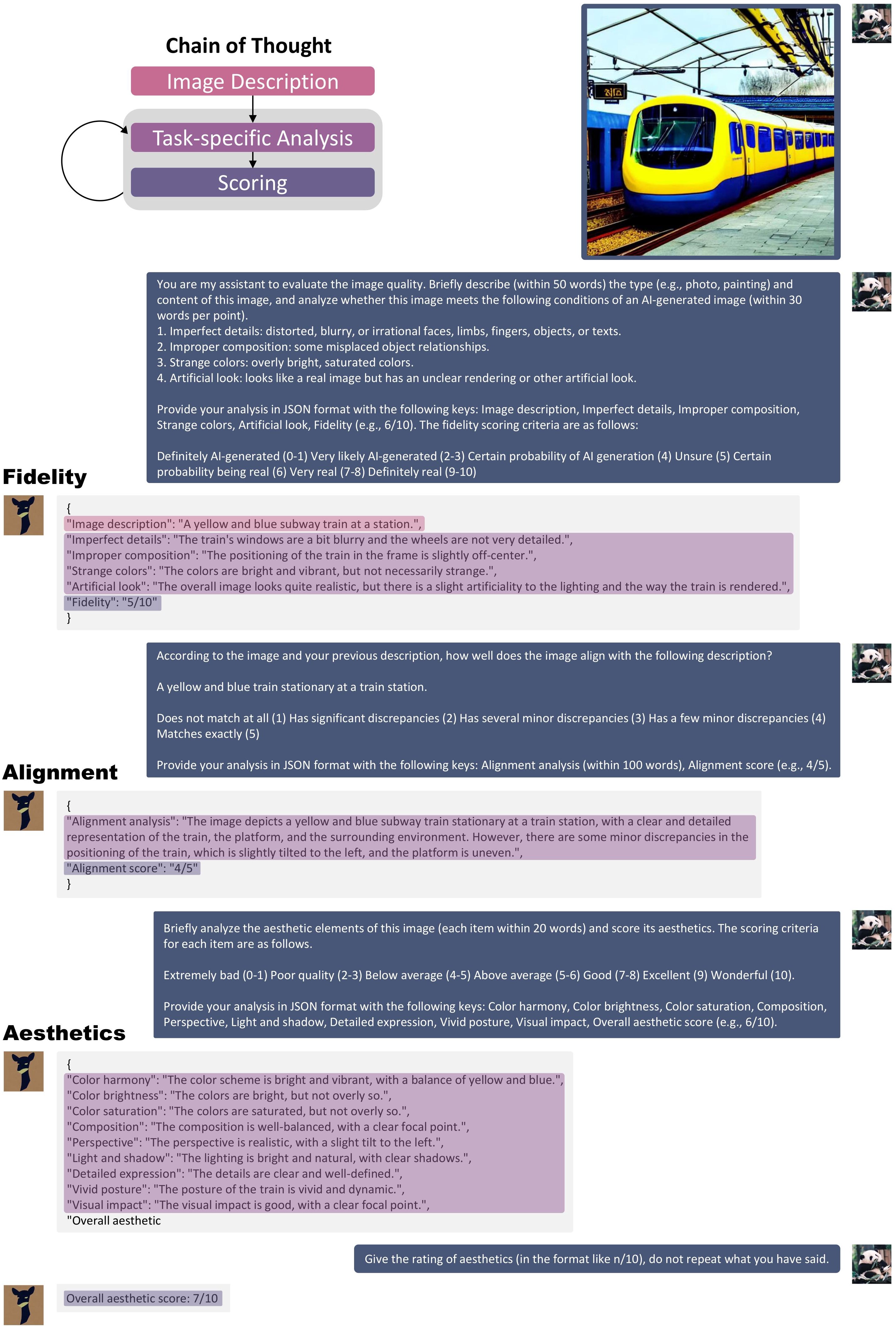

This method is proposed by the paper X-IQE: eXplainable Image Quality Evaluation for Text-to-Image Generation with Visual Large Language Models, which leverages MiniGPT-4 for explainable evaluation of images generated by text-to-image diffusion models. It brings us a new paradigm of meticulously designed step-by-step evaluation strategy: fidelity -> text-image alignment -> aesthetics.

A detailed process of evaluation is illustrated in the following:

For more details about the method, or running the demo on your own, please see this page of X-IQE.

If you find the benchmark method and results useful in your research, please consider citing:

@article{chen2023x,

title={X-IQE: eXplainable Image Quality Evaluation for Text-to-Image Generation with Visual Large Language Models},

author={Chen, Yixiong},

journal={arXiv preprint arXiv:2305.10843},

year={2023}

}