This repository contains the official implementation of our ICLR 2025 paper "MLLMs Know Where to Look: Training-free Perception of Small Visual Details with Multimodal LLMs". Our method enables multimodal large language models (MLLMs) to better perceive small visual details without any additional training. This repository provides the detailed implementation of applying our methods on multiple MLLMs and benchmark datasets.

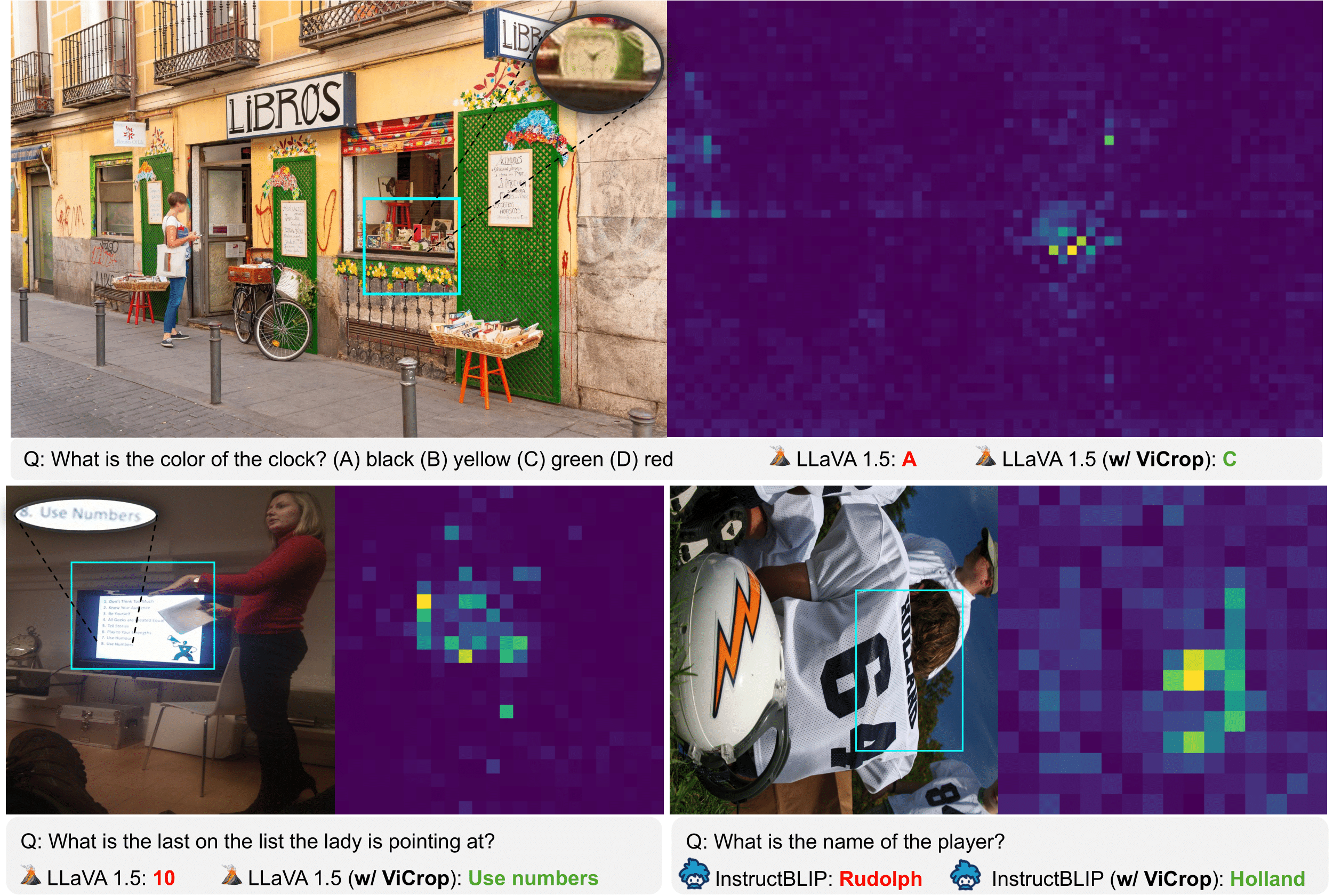

- 🔍 We find that MLLMs often know where to look, even if their answers are wrong.

- 📸 We propose a training-free method to significantly enhance MLLMs' visual perception on small visual details.

- 💪 Our method is flexible with different visual inputs formats, including high-resolution images (see below), multiple images, and video (to be explored in the future).

# Create and activate conda environment

conda create -n mllms_know python=3.10

conda activate mllms_know

# Install dependencies

pip install -r requirements.txt

# Install modified transformers library

cd transformers

pip install -e .

cd ..We provide a quick start notebook that demonstrates how to:

- Load and process images

- Apply our methods to enhance visual perception

- Visualize attention maps

- Download the benchmark datasets and corresponding images to your local directory

- Update the paths in

info.pywith your local directory paths

Example (textvqa)

Dataset preparation:

mkdir -p data/textvqa/images

wget https://dl.fbaipublicfiles.com/textvqa/images/train_val_images.zip -P data/textvqa/images

unzip data/textvqa/images/train_val_images.zip -d data/textvqa/images

rm data/textvqa/images/train_val_images.zip

mv data/textvqa/images/train_images/* data/textvqa/images

rm -r data/textvqa/images/train_images

wget https://dl.fbaipublicfiles.com/textvqa/data/TextVQA_0.5.1_val.json -P data/textvqaDataset processing (to a unified format):

import json

with open('data/textvqa/TextVQA_0.5.1_val.json') as f:

datas = json.load(f)

new_datas = []

for data_id, data in enumerate(datas['data']):

data_id = str(data_id).zfill(10)

question = data['question']

labels = data['answers']

image_path = f"{data['image_id']}.jpg"

new_data = {

'id': data_id,

'question': question,

'labels': labels,

'image_path': image_path

}

new_datas.append(new_data)

with open('data/textvqa/data.json', 'w') as f:

json.dump(new_datas, f, indent=4)To run our method on benchmark datasets, use the provided script:

# Format: bash run_all.sh [dataset] [model] [method]

bash run_all.sh textvqa llava rel_attGet the model's performance:

python get_score.py --data_dir ./data/results --save_path ./- LLaVA-1.5 (

llava) - InstructBLIP (

blip)

For implementation details, see llava_methods.py and blip_methods.py. Please feel free to explore other MLLMs!

Our approach leverages inherent attention mechanisms and gradients in MLLMs to identify regions of interest without additional training. The key methods include:

-

Relative Attention-based Visual Cropping: Computes relative attention (A_{rel}(x,q)) for each image-question pair and selects a target layer from TextVQA validation data to guide visual cropping.

-

Gradient-Weighted Attention-based Visual Cropping: Uses gradient information to refine attention maps, normalizing answer-to-token and token-to-image attention without requiring a second forward pass.

-

Input Gradient-based Visual Cropping: Directly computes the gradient of the model’s decision w.r.t. the input image. To mitigate noise in uniform regions, it applies Gaussian high-pass filtering, median filtering, and thresholding before spatial aggregation.

Bounding Box Selection for Visual Cropping.

We use a sliding window approach to extract bounding boxes from the importance map. Windows of different sizes, scaled by factors in

High-Resolution Visual Cropping.

For high-resolution images (

For implementation details, see llava_methods.py and blip_methods.py and utils.py.

Our method significantly improves MLLMs' performance on tasks requiring perception of small visual details, such as text recognition in images, fine-grained object recognition, and spatial reasoning. Please refer to the paper for more details and run the demo notebook for better understanding!

If you find our paper and code useful for your research and applications, please cite using this BibTeX:

@article{zhang2025mllms,

title={MLLMs Know Where to Look: Training-free Perception of Small Visual Details with Multimodal LLMs},

author={Zhang, Jiarui and Khayatkhoei, Mahyar and Chhikara, Prateek and Ilievski, Filip},

journal={arXiv preprint arXiv:2502.17422},

year={2025}

}This project is licensed under the MIT License - see the LICENSE file for details.