Benchmark setup for code already configured for ease of use, ready to be cloned and used by you!

Benchmarking can be hard, especially if you are new and don't know how to properly configure the JVM benchmark framework JMH.

It took me some time and a lot of reading to figure out exactly how to setup the framework, hence why I thought that others might also have struggled with this exact problem, and might have even given up because of the challenge that this task proved to be.

By providing this configured JMH setup, I hope it inspires more developers to enter the world of benchmarking, so they learn more about the code they write every day and are able to take intelligent decisions based on data rather than guessing (how many times have you thought "This other way the code might run faster" but you had no tools to verify your supposition?).

Execute jmh Gradle task by running

./gradlew jmhor directly on your IDE by executing the aforementioned Gradle task.

When executing the tasks on your IDE, do not run it on debug mode to avoid bottlenecking your tests by having your IDE set up a java agent on the code to enable debug functionalities like break points. When I tested this on IntelliJ IDEA 2022.2.3, the performance penalty by running the benchmark on debug mode was on the scale of ~10 times worse performance.

The benchmarks results are saved in three different places:

- Your terminal, when the execution ends.

- A copy of what is displayed on the terminal is saved to the file

build/results/jmh/humanResults.txt.

- A visual, graphic version is saved on

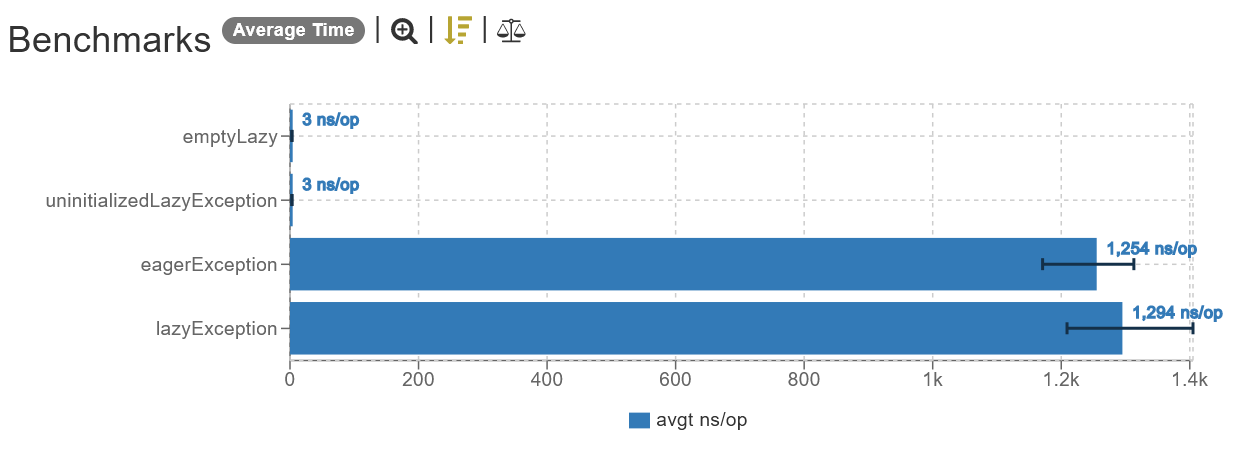

build/results/jmh/html/index.html, which generates a pretty bar graph that makes the task of comparing differences and visualizing the timings much easier. The generated graph look like this:

This project is licensed under MIT License.