If you get interested in this repository, I recommend you to see Nvidia's official Pytorch implementation.

https://nv-adlr.github.io/publication/partialconv-inpainting

- This repository is implemented by Chainer which is no longer supported now.

- Pytorch is a very similar framework to Chainer and is one of the major deep learning framework.

Reproduction of Nvidia image inpainting paper "Image Inpainting for Irregular Holes Using Partial Convolutions" https://arxiv.org/abs/1804.07723

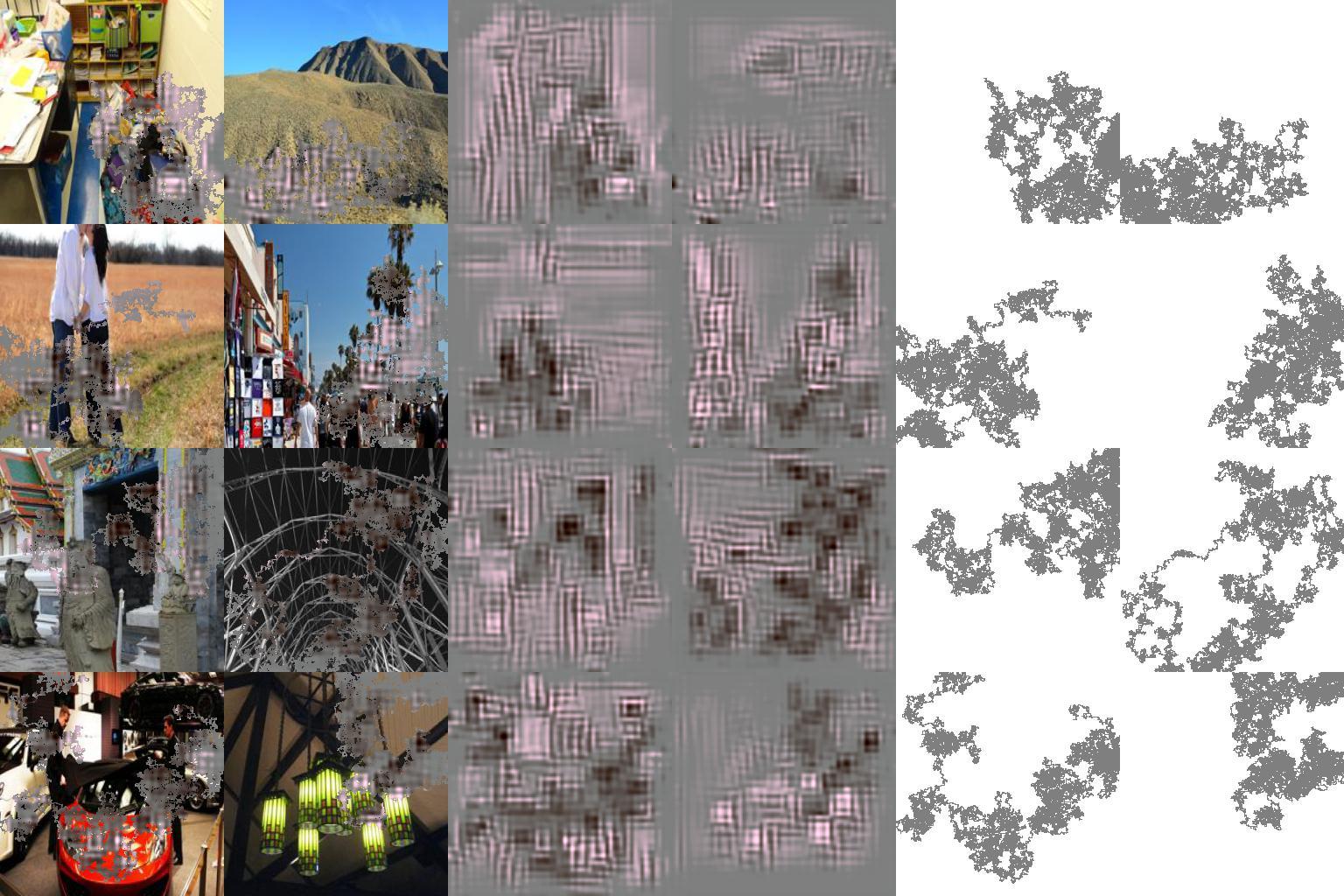

1,000 iteration results (completion, output, mask) "completion" represents the input images whose masked pixels are replaced with the corresonded pixels of the output images

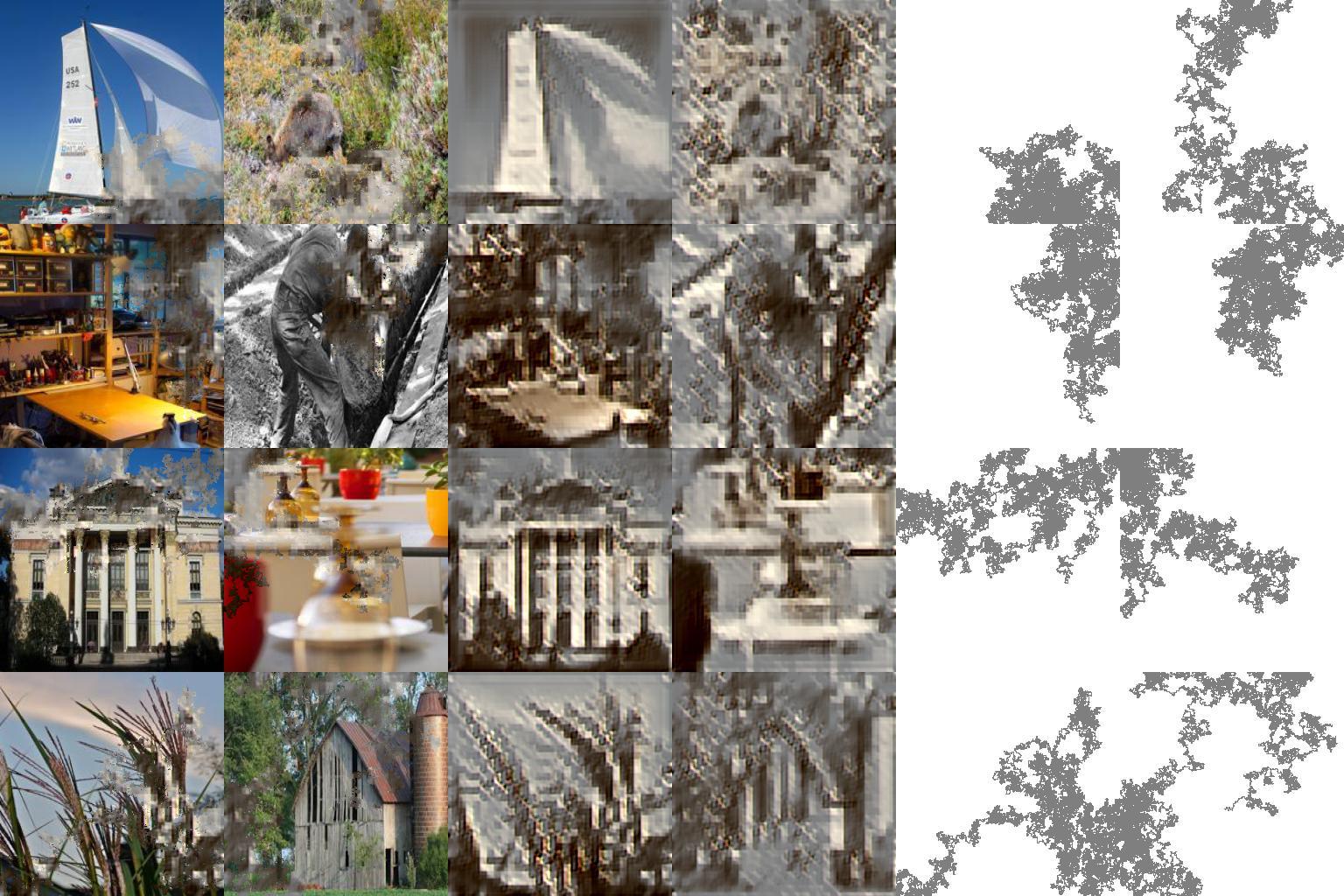

10,000 iteration results (completion, output, mask)

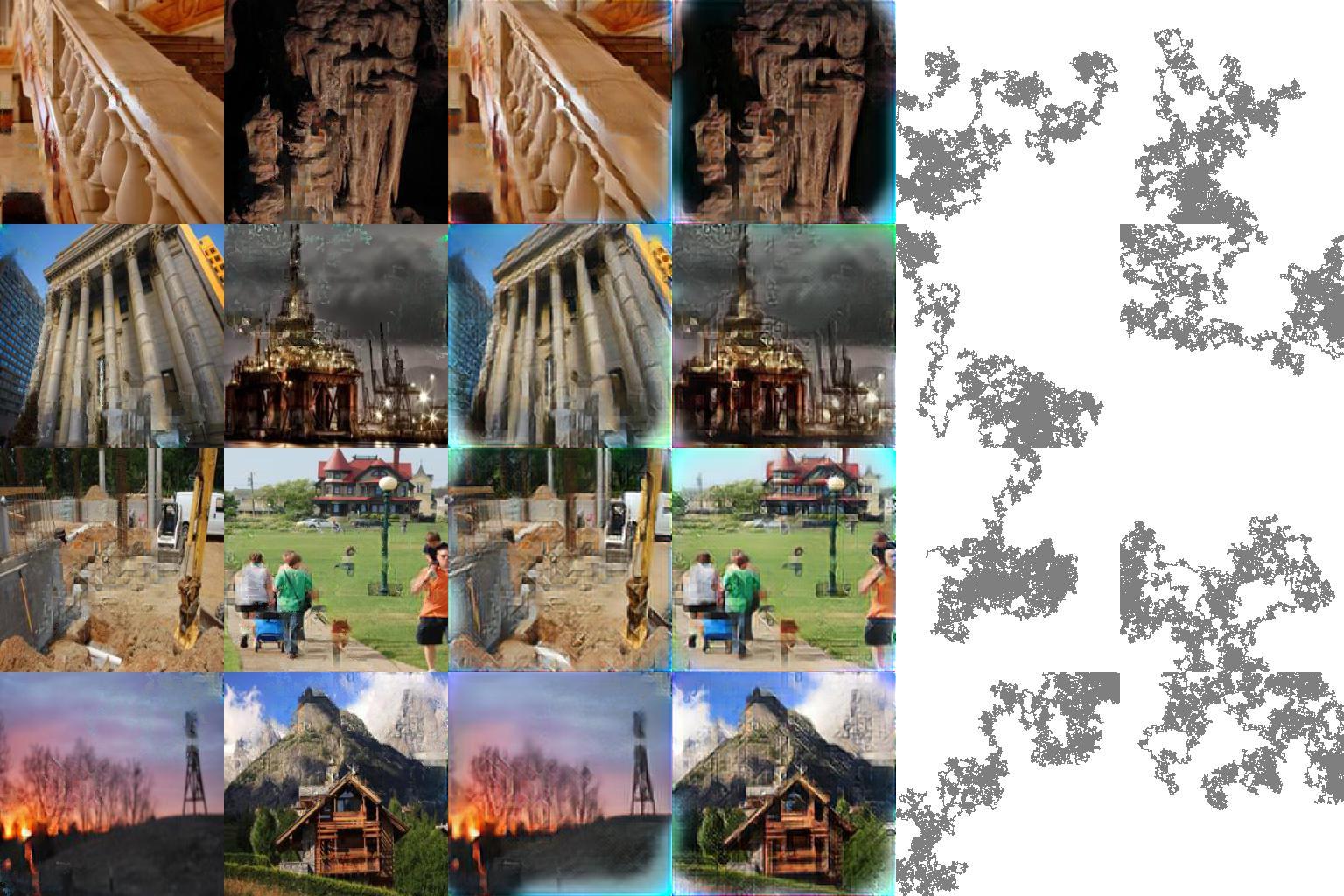

100,000 iteration results (completion, output, mask)

- python3.5.3

- chainer4.0alpha

- opencv (only for cv.imread, you can replace it with PIL)

- PIL

Edit common/paths.py

train_place2 = "/yourpath/place2/data_256"

val_place2 = "/yourpath/place2/val_256"

test_place2 = "/yourpath/test_256"In this implementation, masks are automatically generated in advance.

python generate_windows.py image_size generate_num"image_size" indicates image size of masks.

"generate_num" indicates the number of masks to generate.

Default implementation uses image_size=256 and generate_num=1000.

#To try default setting

python generate_windows.py 256 1000Note that original paper uses 512x512 image and generate mask with different way.

python train.py -g 0 -g represents gpu option.(utilize gpu of No.0)

Firstly, check implementation FAQ

- C(0)=0 in first implementation (already fix in latest version)

- Masks are generated using random walk by generate_window.py

- To use chainer VGG pre-traied model, I re-scaled input of the model. See updater.vgg_extract. It includes cropping, so styleloss in outside of crop box is ignored.)

- Padding is to make scale of height and width input:output=2:1 in encoder stage.

- I use chainer.functions.unpooling_2d for upsampling. (you can replace it with chainer.functions.upsampling_2d)

other differences:

- image_size=256x256 (original: 512x512)

This repository utilizes the codes of following impressive repositories