This repo is the official implementation of "Self-slimmed Vision Transformer".

07/20/2022

[Initial commits]:

- The supported code and models for LV-ViT are provided.

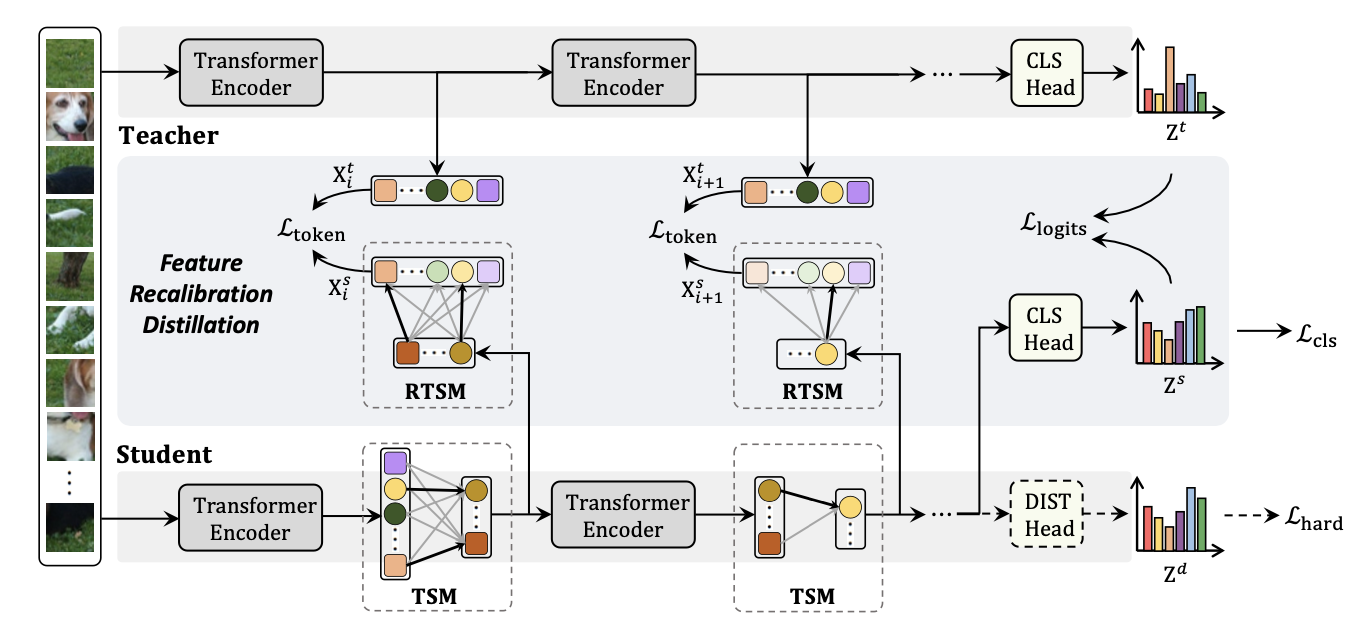

SiT (Self-slimmed Vision Transformer) is introduce in arxiv and serves as a generic self-slimmed learning method for vanilla vision transformers. Our concise TSM (Token Slimming Module) softly integrates redundant tokens into fewer informative ones. For stable and efficient training, we introduce a novel FRD framework to leverage structure knowledge, which can densely transfer token information in a flexible auto-encoder manner.

Our SiT can speed up ViTs by 1.7x with negligible accuracy drop, and even speed up ViTs by 3.6x while maintaining 97% of their performance. Surprisingly, by simply arming LV-ViT with our SiT, we achieve new state-of-the-art performance on ImageNet, surpassing all the recent CNNs and ViTs.

We follow the settings of LeViT for inference speed evaluation.

| Model | Teacher | Resolution | Top-1 | #Param. | FLOPs | Ckpt | Shell |

|---|---|---|---|---|---|---|---|

| SiT-T | LV-ViT-T | 224x224 | 80.1 | 15.9M | 1.0G | train.sh | |

| SiT-XS | LV-ViT-S | 224x224 | 81.2 | 25.6M | 1.5G | train.sh | |

| SiT-S | LV-ViT-S | 224x224 | 83.1 | 25.6M | 4.0G | train.sh | |

| SiT-M | LV-ViT-M | 224x224 | 84.2 | 55.6M | 8.1G | train.sh | |

| SiT-L | LV-ViT-L | 288x288 | 85.6 | 148.2M | 34.4G | train.sh |

The LV-ViT teacher models are trained with token-labeling and their checkpoints are provided.

| Model | Resolution | Top-1 | #Param. | FLOPs | Ckpt |

|---|---|---|---|---|---|

| LV-ViT-T | 224x224 | 81.8 | 15.7M | 3.5G | |

| LV-ViT-S | 224x224 | 83.1 | 25.4M | 5.5G | |

| LV-ViT-M | 224x224 | 84.0 | 55.2M | 11.9G | |

| LV-ViT-L | 288x288 | 85.3 | 147M | 56.1G |

If you find this repository useful, please use the following BibTeX entry for citation.

@misc{zong2021self,

title={Self-slimmed Vision Transformer},

author={Zhuofan Zong and Kunchang Li and Guanglu Song and Yali Wang and Yu Qiao and Biao Leng and Yu Liu},

year={2021},

eprint={2111.12624},

archivePrefix={arXiv},

primaryClass={cs.CV}

}This project is released under the MIT license. Please see the LICENSE file for more information.