by Zhongkun Liu, Pengjie Ren, Zhumin Chen, Zhaochun Ren, Maarten de Rijke, Ming Zhou

@inproceedings{liu2021learning, author = {Zhongkun Liu and Pengjie Ren and Zhumin Chen and Zhaochun Ren and Maarten de Rijke and Ming Zhou}, title = {Learning to Ask Conversational Questions by Optimizing Levenshtein Distance}, booktitle = {Proceedings of the 59th Annual Meeting of the Association for Computational Linguistics, {ACL} 2021}, year = {2021} }

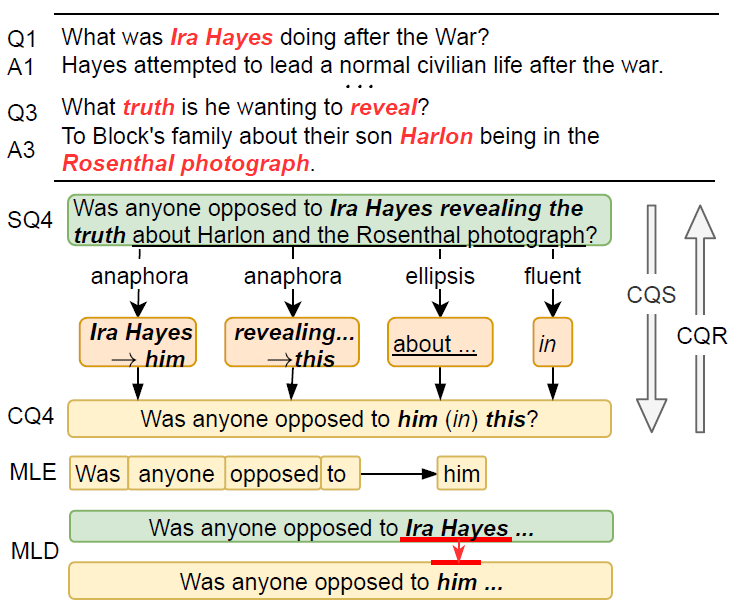

Conversational Question Simplification (CQS) aims to simplify self-contained questions (e.g., SQ4) into conversational ones (e.g., CQ4) by incorporating some conversational characteristics, e.g., anaphora and ellipsis.

The editing policy network is implemented by the encoder to predict combinatorial edits, and the phrasing policy network is implemented by the decoder to predict phrases.

-

In this paper, we have proposed a minimum Levenshtein distance (MLD) based Reinforcement Iterative Sequence Editing (RISE) framework for Conversational Question Simplification (CQS).

-

To train RISE, we have devised an Iterative Reinforce Training (IRT) algorithm with a novel Dynamic Programming based Sampling (DPS) process.

-

Extensive experiments show that RISE is more effective and robust than several state-of-the-art CQS methods.

python >= 3.6

pytorch >= 1.6

Transformers >= 3.3.0

Please setup https://github.com/Maluuba/nlg-eval for evaluation.

1. We use CANARD based on Quac and CAsT dataset for training and testing. And we rename the path as '../CANARD_Release', '../QuacN', '../CAsT'.

2. Please download pretrain model bert in path ../extra/bert/ or you can replace the tokenizer_path in Preprocess/prepare_mld.py and Preprocess/prepare_cast.py

3. run in command line

sh preprocess.sh you will obatin bert_iter_0.train.pkl, bert_iter_0.dev.pkl, bert_iter_0.test.pkl, bert_iter_0.CAsT_test.pkl in directory ../QuacN/Dataset/.

python3 run_bert_mld_rl.py You can modify the config_n.py for parameter modification or in command line by add argument.

python3 run_bert_mld_rl.py --train=2 When assgin argument 'train' as 2, it can generate the model results and try

python3 run_evaluation.py for evaluation.