This repo contains examples demonstrating the power of the ReZero architecture, see the paper.

The official ReZero repo is here.

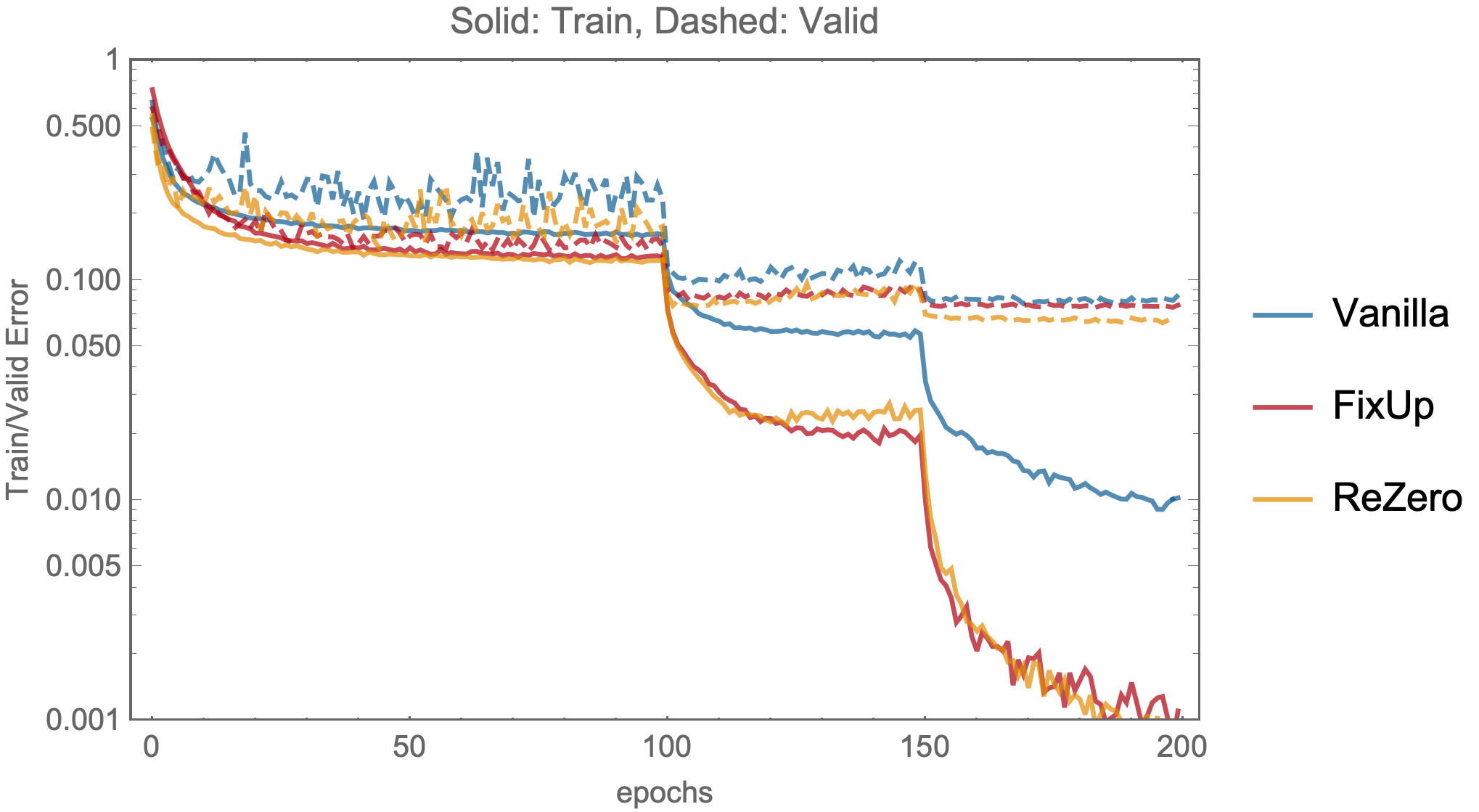

Final valid errors: Vanilla - 7.74. FixUp - 7.5. ReZero - 6.38, see .

- Training 128 layer ReZero Transformer on WikiText-2 language modeling

- Training 10,000 layer ReZero fully connected network on CIFAR-10

If you find ReZero or a similar architecture improves the performance of your application, you are invited to share a demonstration here.

To install ReZero via pip use pip install rezero

We provide custom ReZero Transformer layers (RZTX).

For example, this will create a Transformer encoder:

import torch

import torch.nn as nn

from rezero.transformer import RZTXEncoderLayer

encoder_layer = RZTXEncoderLayer(d_model=512, nhead=8)

transformer_encoder = nn.TransformerEncoder(encoder_layer, num_layers=6)

src = torch.rand(10, 32, 512)

out = transformer_encoder(src)If you find rezero useful for your research, please cite our paper:

@inproceedings{BacMajMaoCotMcA20,

title = "ReZero is All You Need: Fast Convergence at Large Depth",

author = "Bachlechner, Thomas and

Majumder, Bodhisattwa Prasad

Mao, Huanru Henry and

Cottrell, Garrison W. and

McAuley, Julian",

booktitle = "arXiv",

year = "2020",

url = "https://arxiv.org/abs/2003.04887"

}