- This code overwrites huggingface BART (

BartForConditionalGeneration) and GPT2 (GPT2LMHeadModel) for seq2seq, adding copy mechanism to these two models, and is compatible with the huggingface training and decoding pipeline. - Not yet support Out-Of-Vocabulary (OOV) words.

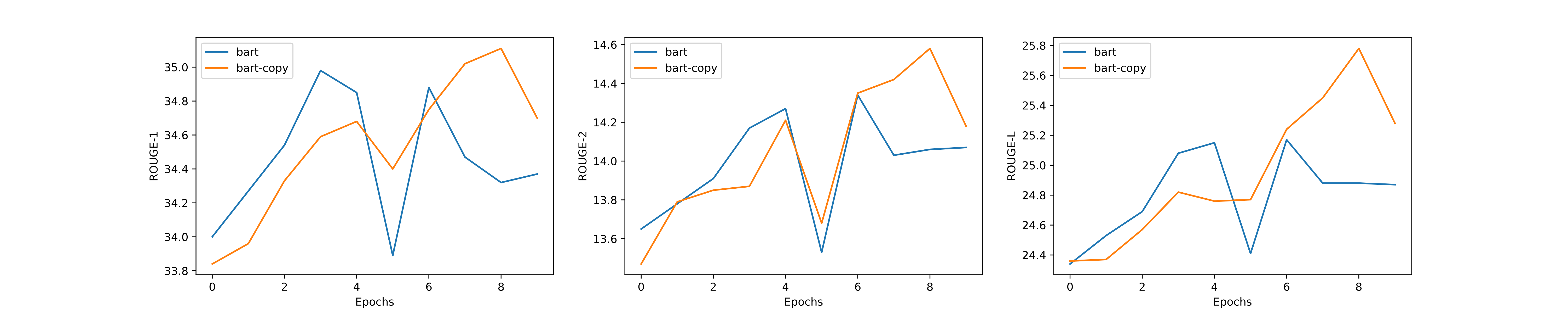

For summarization task, the code is experimented on a subset of CNN/DailyMail dataset, where 10k of the training set is used to train for 10 epochs and 500 of the test set is used for evaluation. The results are as follows:

| Model Type | ROUGE-1 | ROUGE-2 | ROUGE-L |

|---|---|---|---|

| bart | 34.88 | 14.34 | 25.17 |

| bart-copy | 35.11 | 14.58 | 25.78 |

From the following ROUGE score figure, we can see that bart converges to a lower bound of ROUGE score compared to bart-copy within the first 10 training epochs:

See also train_summary.sh and decode_summary.sh

pip install -r requirements.txtpython3 -m torch.distributed.launch --nproc_per_node 4 run_summary.py train \

--model_type bart-copy \

--model_name_or_path fnlp/bart-base \

--batch_size 16 \

--src_file cnndm-10k/training.article.10k \

--tgt_file cnndm-10k/training.summary.10k \

--max_src_len 768 \

--max_tgt_len 256 \

--seed 42 \

--output_dir ./output_dir \

--gradient_accumulation_steps 2 \

--lr 0.00003 \

--num_train_epochs 10 \

--fp16Options:

--model_typeshould bebart,bart-copy,gpt2orgpt2-copy--model_name_or_pathis the local or remote path of the huggingface pretrained model

python3 -u run_summary.py decode \

--model_type bart-copy \

--model_name_or_path fnlp/bart-base \

--model_recover_path ./output_dir/checkpoint-xxx/pytorch_model.bin \

--batch_size 16 \

--src_file cnndm-10k/test.article.500 \

--tgt_file cnndm-10k/test.summary.500 \

--max_src_len 768 \

--max_tgt_len 256 \

--seed 42 \

--beam_size 2 \

--output_candidates 1\

--do_decode \

--compute_rougeOptions:

--model_recover_pathis the path of the fine-tuned model--beam_sizeis the beam size of beam search--output_candidatesspecifies how many candidates of beam search to be output to file, which should be larger than 0 and no more than thebeam_size--do_decode: Whether to do decoding--compute_rouge: Whether to compute ROUGE score after decoding. Ifoutput_candidates > 1, the average ROUGE score of all candidates will be calculated.

P.S. If the model_recover_path is ./output_dir/checkpoint-xxx/pytorch_model.bin, the decoding output file will be ./output_dir/checkpoint-xxx/pytorch_model.bin.decode.txt

| Setting | Value |

|---|---|

| GPUs | 4 TITAN XP 12GB |

| Pretrained Model | fnlp/bart-base |

| Max Source Length | 768 |

| Max Target Length | 256 |

| Learning Rate | 3e-5 |

| Num Train Epochs | 10 |

| Train Batch Size | 16 |

| Gradient Accumulation Steps | 2 |

| Seed | 42 |

| Beam Size | 2 |

| Mixed Precision Training | yes |

P.S. The copy mechanism requires more CUDA memory during decoding.