A TensorFlow implementation of style transfer based on the paper A Neural Algortihm of Artistic Style by Gatys et. al.

See my related blog post for an overview of the style transfer algorithm.

The total loss used is the weighted sum of the style loss, the content loss and a total variation loss. This third component is not specfically mentioned in the original paper but leads to more cohesive images being generated.

- Python 2.7

- TensorFlow

- SciPy & NumPy

- Download the pre-trained VGG network and place it in the top level of the repository (~500MB)

python run.py --content <content image> --style <style image> --output <output image path>

The algorithm will run with the following settings:

ITERATIONS = 1000 # override with --iterations argument

LEARNING_RATE = 1e1 # override with --learning-rate argument

CONTENT_WEIGHT = 5e1 # override with --content-weight argument

STYLE_WEIGHT = 1e2 # override with --style-weight argument

TV_WEIGHT = 1e2 # override with --tv-weight argumentBy default the style transfer will start with a random noise image and optimise it to generate an output image. To start with a particular image (for example the content image) run with the --initial <initial image> argument.

To run the style transfer with a GPU run with the --use-gpu flag.

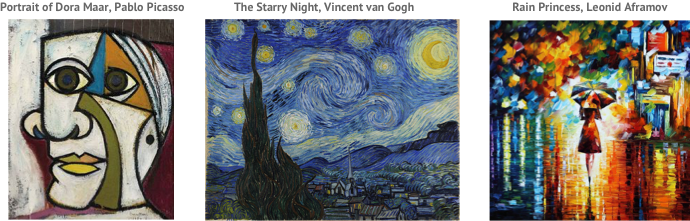

I generated style transfers using the following three style images:

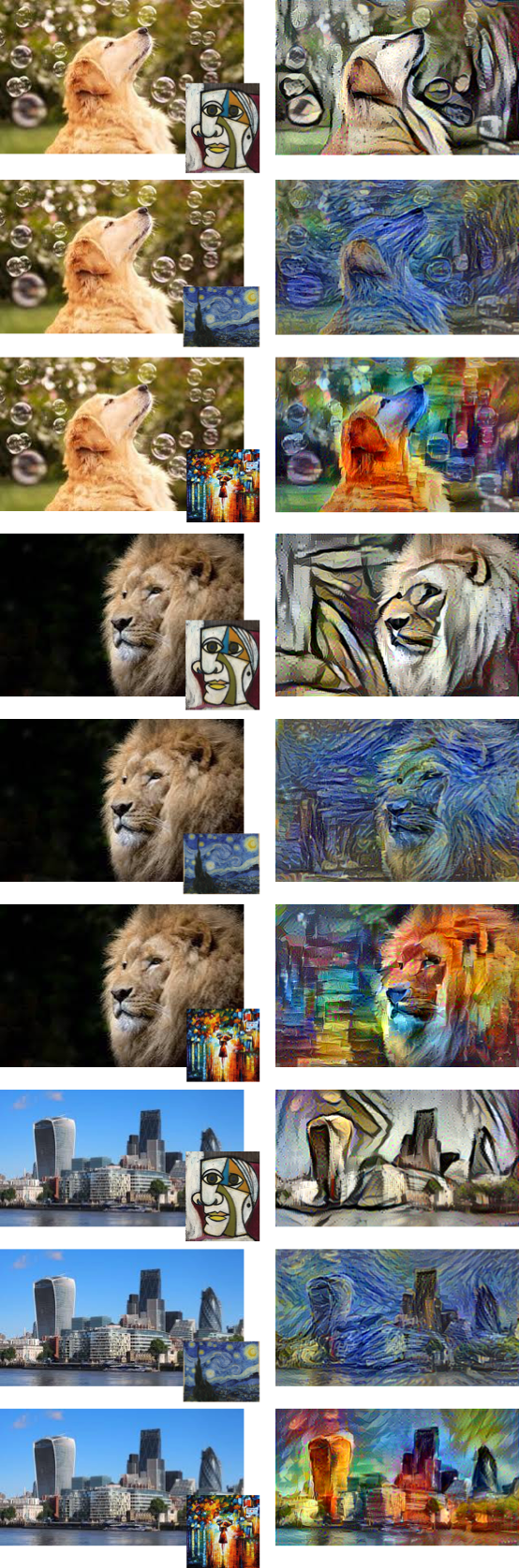

Each style transfer was run for 1000 iterations on a CPU and took approximately 2 hours. Here are some of the style transfers I was able to generate:

This code was inspired by an existing TensorFlow implementation by Anish Athalye, and I have re-used most of his VGG network code here.

Released under GPLv3, see LICENSE.txt