This is an official pytorch implementation of EZ-CLIP: Efficient Zero-Shot Video Action Recognition [arXiv]

- Trained model download link of google driver.

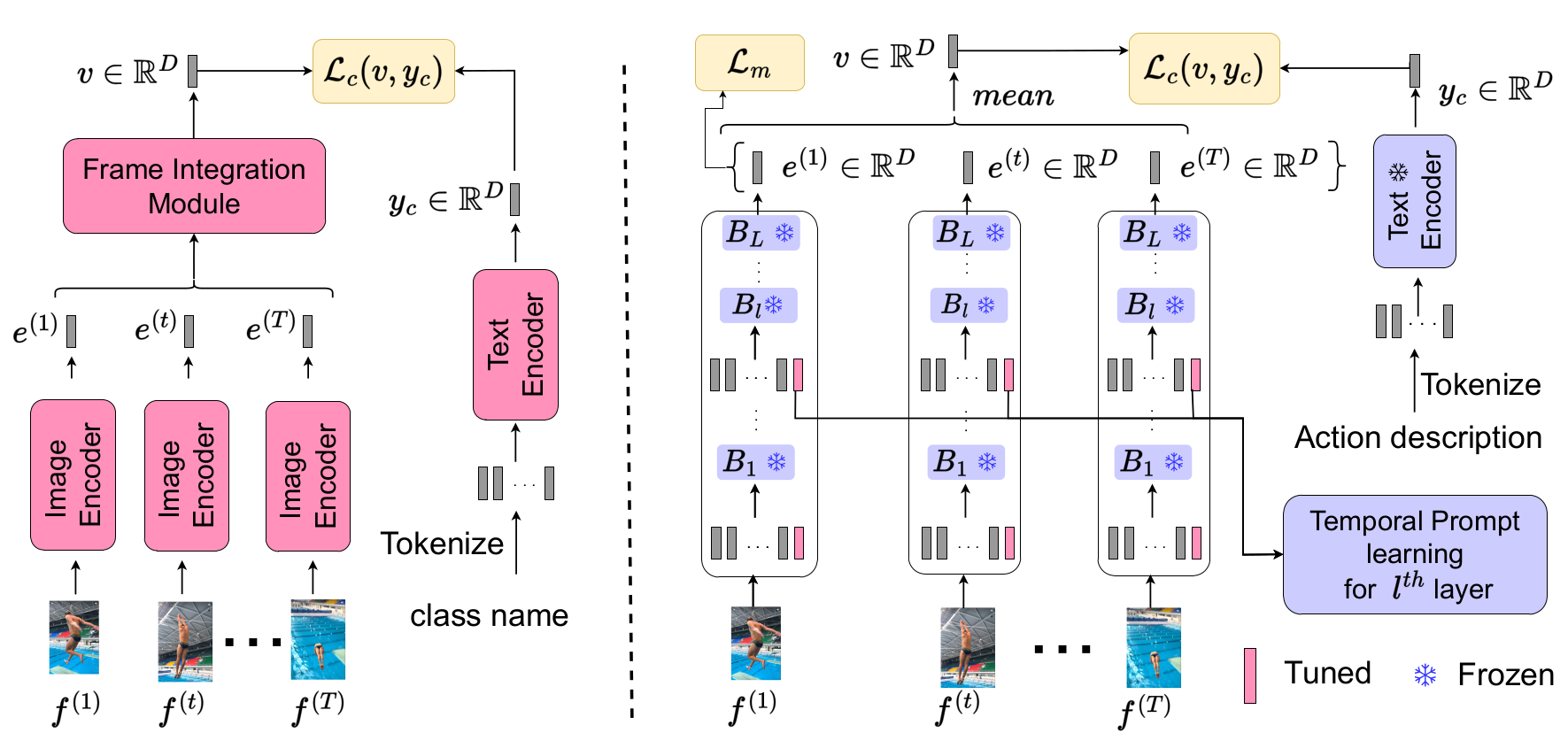

In this study, we present EZ-CLIP, a simple and efficient adaptation of CLIP that addresses these challenges. EZ-CLIP leverages temporal visual prompting for seamless temporal adaptation, requiring no fundamental alterations to the core CLIP architecture while preserving its remarkable generalization abilities. Moreover, we introduce a novel learning objective that guides the temporal visual prompts to focus on capturing motion, thereby enhancing its learning capabilities from video data.

We provide the conda requirements.txt to help you install these libraries. You can initialize environment by using pip install -r requirements.txt.

We need to first extract videos into frames for fast reading. Please refer 'Dataset_creation_scripts' data pre-processing. We have successfully trained on Kinetics, UCF101, HMDB51,

# Train

python train.py --config configs/K-400/k400_train.yaml

# Test

python test.py --config configs/ucf101/UCF_zero_shot_testing.yaml

If you find the code and pre-trained models useful for your research, please consider citing our paper:

@article{ez2022clip,

title={EZ-CLIP: Efficient Zeroshot Video Action Recognition},

author={Shahzad Ahmad, Sukalpa Chanda, Yogesh S Rawat},

journal={arXiv preprint arXiv:2312.08010},

year={2024}

}

Our code is based on ActionCLIP