This repository contains code for implementing the methods outlined in the research article, "Fairness in Collaborative-Filtering Recommender Systems". The article highlights the issue of fairness in collaborative-filtering recommender systems, which can be affected by historical data bias. Biased data can lead to unfair recommendations for users from minority groups.

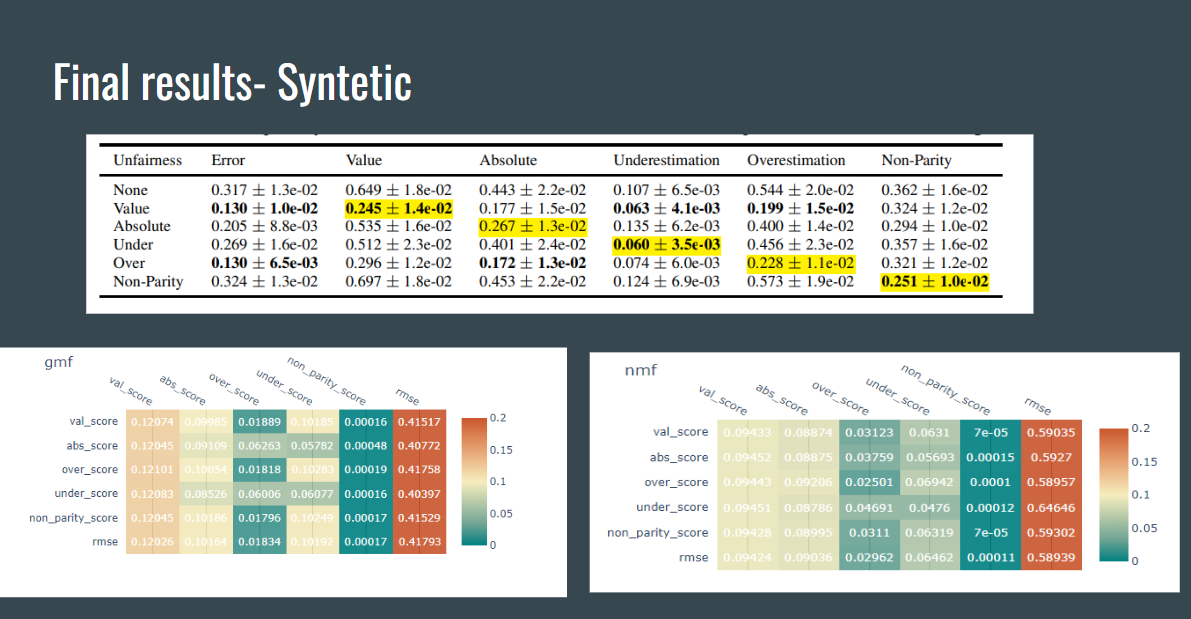

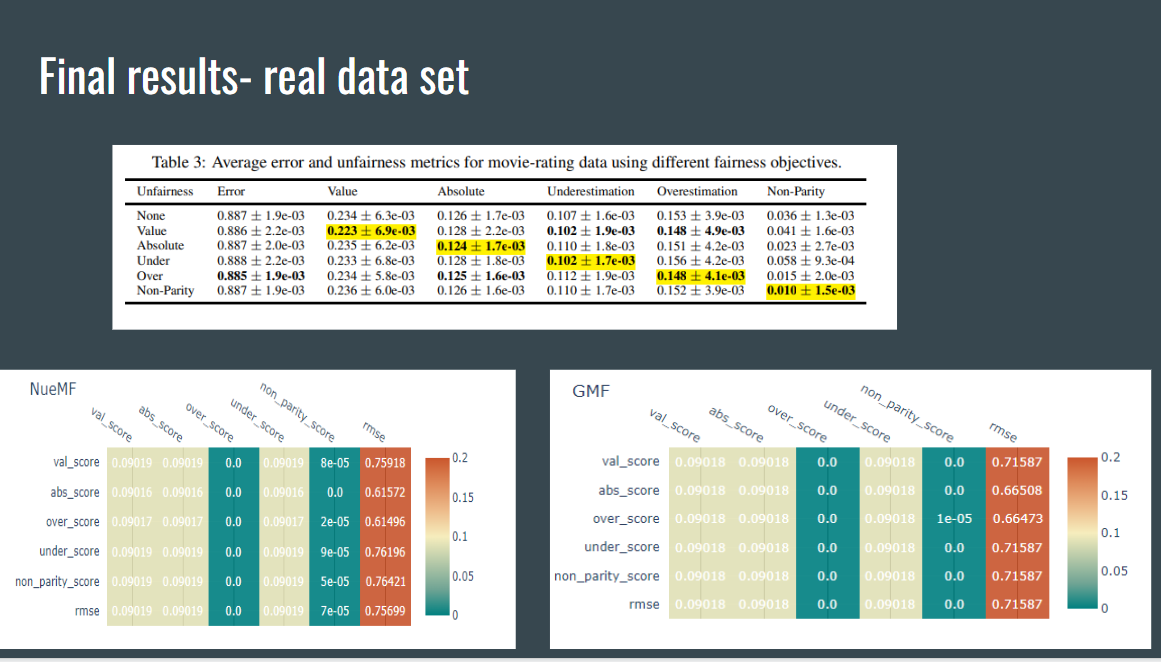

In this work, we propose four new fairness metrics that address various types of unfairness, as existing fairness metrics have proven to be insufficient. These new metrics can be optimized by adding fairness terms to the learning objective. We have conducted experiments on synthetic and real data, which show that our proposed metrics outperform the baseline, and the fairness objectives effectively help minimize unfairness.

The repository contains the following files:

fairness_metrics.py: This file contains the implementation of the four new fairness metrics proposed in the article.

models: This file contains the implementation of the new recommender system models that use fairness-optimization.

artifacts: This directory contains training artifacts (dictionaries with all the training meta-data)

methods.py: This file contains the code for each fairness metrics from the article.

article_recovery.ipynb: This jupyter notebook recovery the article results on movieLen data & synthetic data.

To use this repository, you can download or clone it to your local machine. The recommended way to run the code is to create a virtual environment using Python 3.9 or higher. Then, install the required dependencies by running pip install -r requirements.txt.

After installing the dependencies, you can run the article_recovery.ipynb. This script will train the basic model, optimize the fairness metrics, and evaluate the model on the test data.

We hope that this repository will be useful for researchers and practitioners who are interested in developing more fair collaborative-filtering recommender systems. Please feel free to reach out to us with any questions or feedback.

Article: "Beyond Parity:Fairness Objectives for Collaborative Filtering"

Explanation:

- val_score: "measures inconsistency in signed estimation error across the user types"- The concept of value unfairness refers to situations where one group of users consistently receives higher or lower recommendations than their actual preferences. If there is an equal balance of overestimation and underestimation, or if both groups of users experience errors in the same direction and magnitude, then the value unfairness is minimized. However, when one group is consistently overestimated and the other group is consistently underestimated, then the value unfairness becomes more significant. A practical example of value unfairness in a course recommendation system could be male students being recommended STEM courses even if they are not interested in such topics, while female students are not recommended STEM courses even if they are interested in them.

- abs_score: "measures inconsistency in absolute estimation error across user types"- Absolute unfairness is a measure of recommendation quality that is unsigned and provides a single statistic for each user type. It assesses the performance of the recommendation system for each group of users, and if one group has a small reconstruction error while the other group has a large one, then the first group is at an unfair advantage. Unlike value unfairness, absolute unfairness does not take into account the direction of error. For example, if female students are recommended courses that are 0.5 points below their true preferences, and male students are recommended courses that are 0.5 points above their true preferences, there is no absolute unfairness. However, if female students are recommended courses that are off by 2 points in either direction, while male students are recommended courses within 1 point of their true preferences, then absolute unfairness is high, even if value unfairness is low.

- under_score: "measures inconsistency in how much the predictions underestimate the true ratings"- The concept of underestimation unfairness is particularly relevant in situations where it is more important to avoid missing relevant recommendations than to provide additional recommendations. In cases where a highly capable student may not be recommended a course or subject in which they would excel, underestimation can have a significant impact. Therefore, the avoidance of underestimation can be considered a critical objective for recommendation systems in certain contexts.

- over_score: "measures inconsistency in how much the predictions overestimate the true ratings"- Overestimation unfairness is a relevant concept in situations where providing too many recommendations can be overwhelming for users. In cases where users must spend significant time evaluating each recommended item, overestimation can be especially detrimental, as it essentially wastes the user's time. Therefore, it is important to avoid overestimation in certain contexts. Furthermore, uneven amounts of overestimation can have different effects on different types of users, leading to unequal time costs for each group.

- par_score: Kamishima et al. proposed a non-parity unfairness measure that can be computed using a regularization term. This measure involves calculating the absolute difference between the average ratings of disadvantaged users and advantaged users. By comparing the overall average ratings of each group, this measure provides insight into the extent to which a recommendation system may be unfair in terms of rating predictions.

In the article ["Beyond Parity:Fairness Objectives for Collaborative Filtering"] we can found few experiments that divide to 2 different data type:

-

Syntetic Data

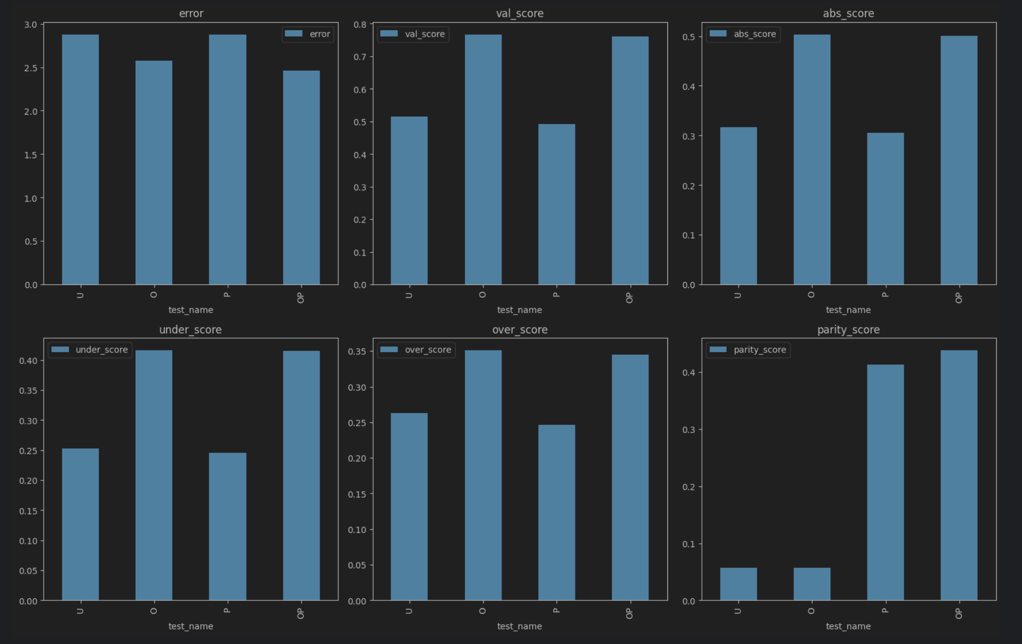

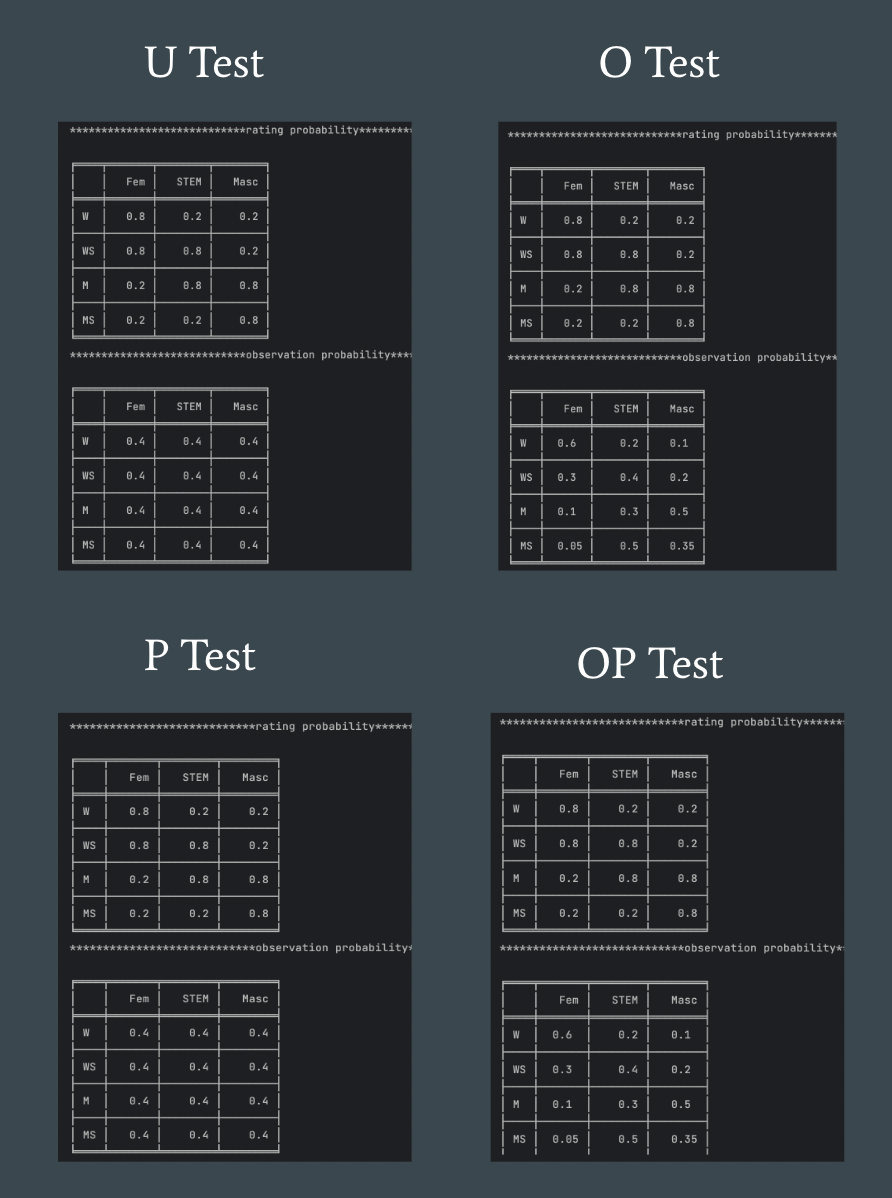

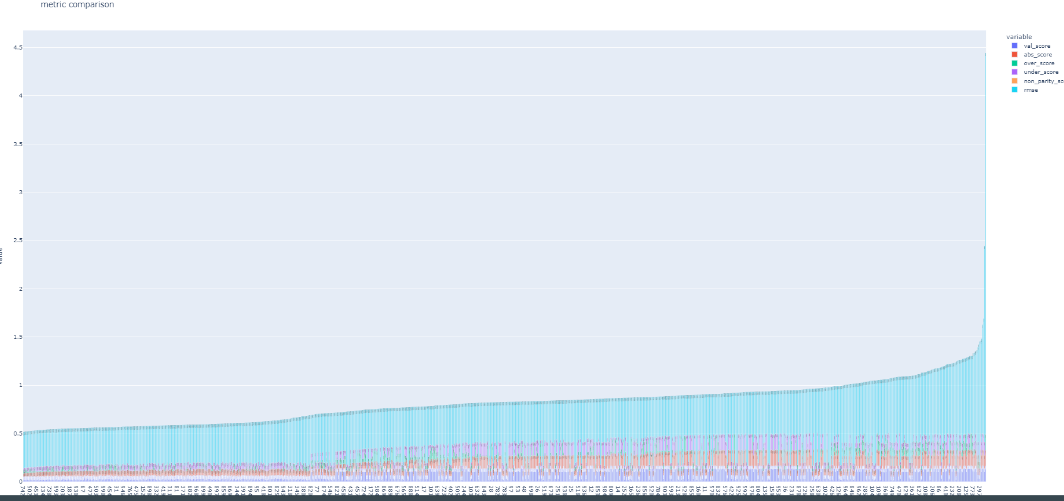

In this study, we evaluate different unfairness metrics using standard matrix factorization under various sampling conditions. We conduct five random trials and report the average scores in Fig. 1. The settings are labeled as U (uniform user groups and observation probabilities), O (uniform user groups and biased observation probabilities), P (biased user group populations and uniform observations), and B (biased populations and observations).

The statistics indicate that different forms of underrepresentation contribute to various types of unfairness. Uniform data is the most fair, biased observations is more fair, biased populations is worse, and biasing populations and observations is the most unfair, except for non-parity which is heavily amplified by biased observations but unaffected by biased populations. A high non-parity score does not necessarily indicate unfairness due to the expected imbalance in labeled ratings. The other unfairness metrics describe unfair behavior by the rating predictor. These tests show that unfairness can occur even when the ratings accurately represent user preferences, due to imbalanced populations or observations.

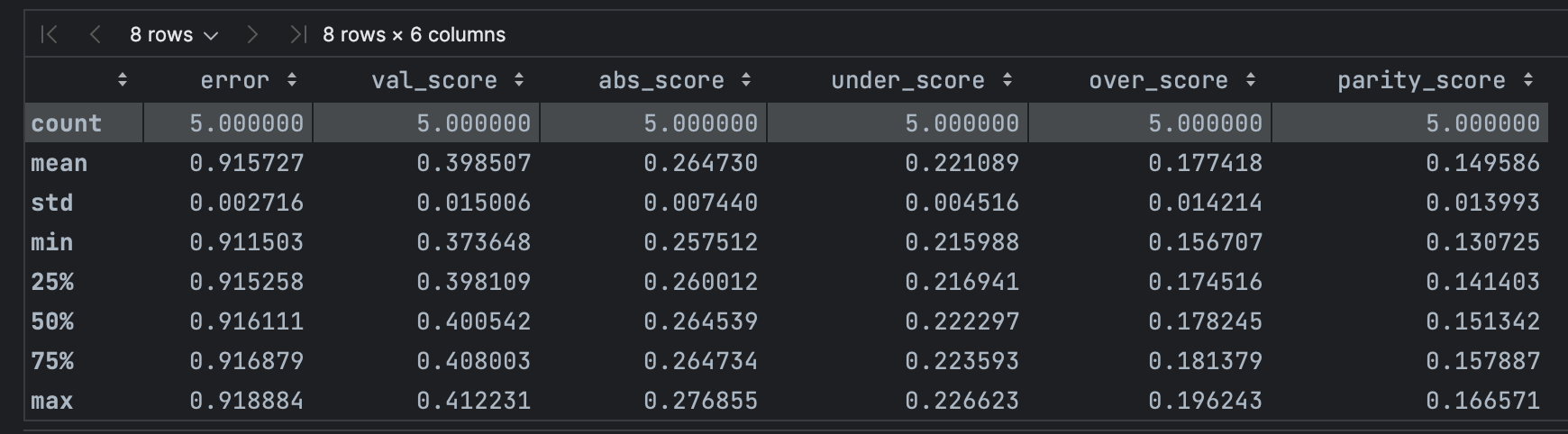

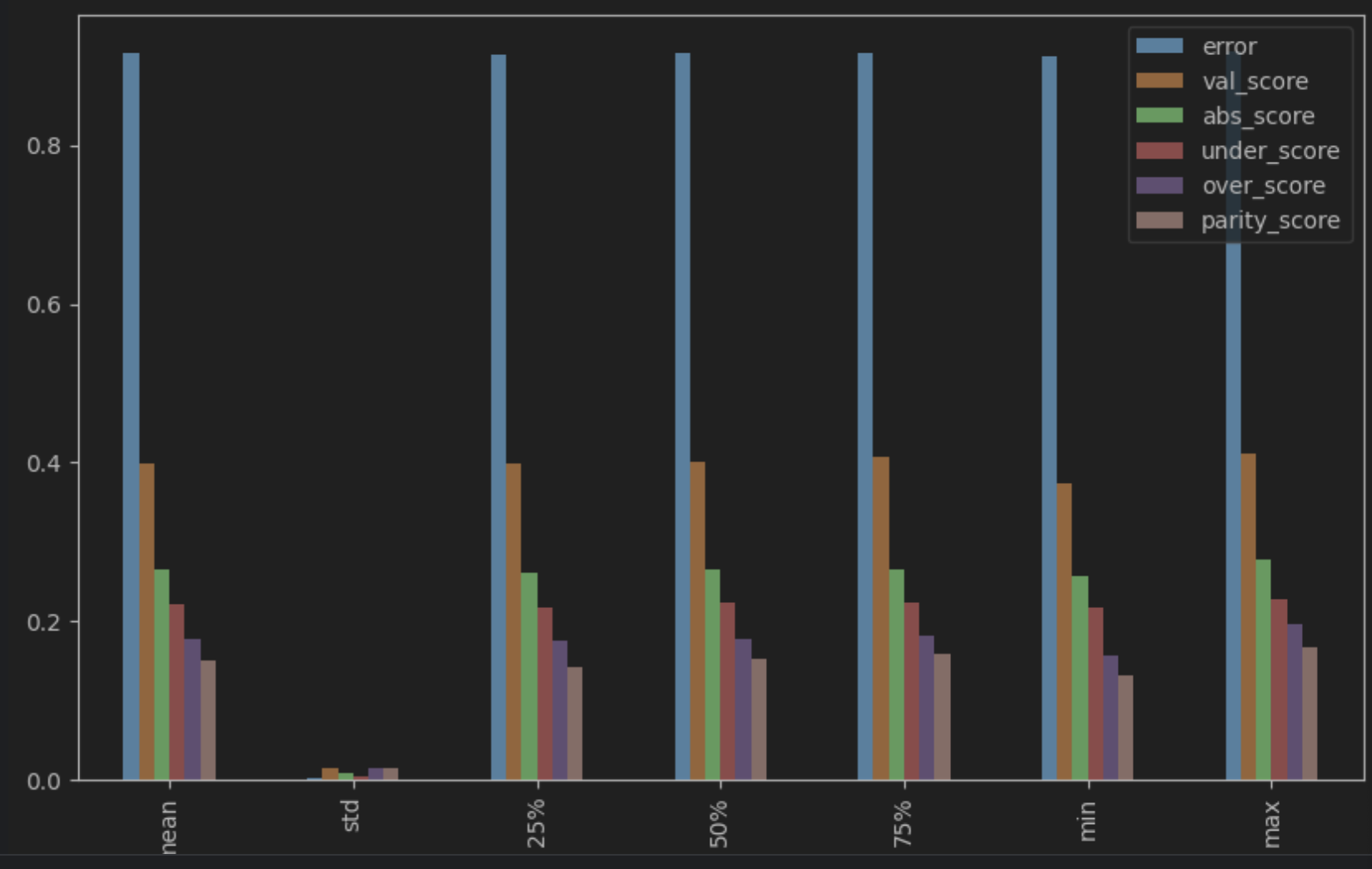

The study uses the Movielens Million Dataset, consisting of ratings by 6,040 users of 3,883 movies, annotated with demographic variables and genres. The study selects genres that exhibit gender imbalance and considers only users who rated at least 50 of these movies. After filtering, the dataset has 2,953 users and 1,006 movies. The study conducts five trials and evaluates each metric on the testing set. Results show that optimizing each unfairness metric leads to the best performance on that metric without a significant change in reconstruction error. Optimizing value unfairness leads to the most decrease in under- and overestimation, while optimizing non-parity leads to an increase or no change in almost all other unfairness metrics.

-

basif MF: It assumes a set of users and items, where each user has a variable representing their group, and each item has a variable representing its group. The preference score of each user for each item is represented by the entries in a rating matrix. The matrix-factorization learning algorithm seeks to learn the parameters from observed ratings by minimizing a regularized, squared reconstruction error. The algorithm uses the Adam optimizer, which combines adaptive learning rates with momentum, to minimize the non-convex objective.

-

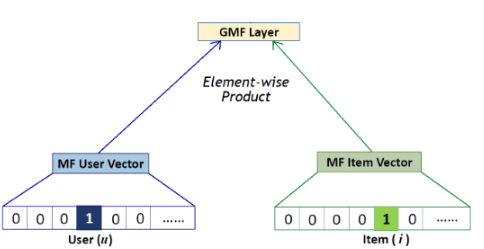

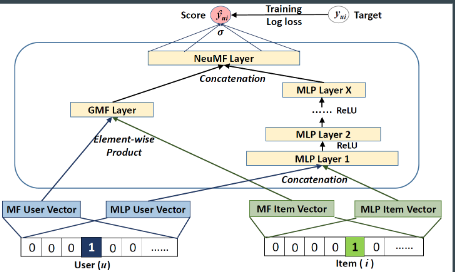

GMF: Model architecture: Flatten users and items vectors as input 2 embedding laters ( size is hyperparam) Element wise product Relu activation

- NeuMF: Input Layer: Flatten users and items vectors as input Embedding Layer: The embedding layer maps the user and item to latent space. Multi-Layer Perceptron : The MLP takes the embeddings as input and passes them through several fc layers with non-linear activation functions. Matrix Factorization: calculates the inner product of the user and item embeddings and produces a single output. Output Layer: relu activation

param optimiaztion using random search:

This project examines 3 models using 5 fairness metrics and RMSE as objective & evaluation functions In both real and synthetic data, we successfully improve the paper baseline models ( matrix factorization). Compared to the NueMF model, the GMF performs better in synthetic and real data (as shown in the final tables). Fairness can be improved dramatically without harming RMSE