Perform Part-of-Speech tagging using variations of LSTM models, on Universal Dependencies English Web Treebank (UDPOS) dataset. I used this project to implement these architectures from scratch. As a result, I am able to fully understand the inner workings of each architecture and how they are built on top of one another.

UPDOS is a parsed text corpus dataset that clarifies syntactic or semantic sentence structure.

Architectural diagrams are collected from Dive into Deep Learning book.

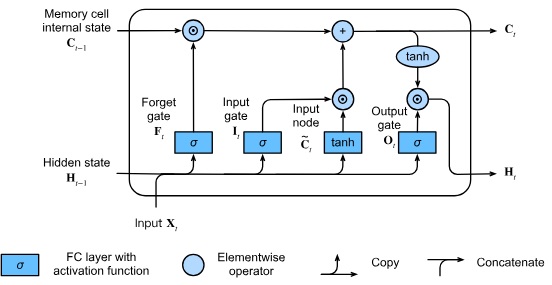

LSTM or Long Short Term Memory is a modern RNN architecture. It has 3 gates for performing it's inner operations. It uses memory cell states to keep track of context and uses the gates to update these memory cells.

The 3 gates are calculated separate weights and biases as below:

From current step, a temporary Input Node tensor is created

Then the gates and the input node is used, at time step t, to calculate the memory cell

and hidden state

Visually the operations form the following diagram:

Compared to vanilla RNNs, LSTM are able to capture and retain context for longer sequence of tokens. However, they require 4 times more memory to do so.

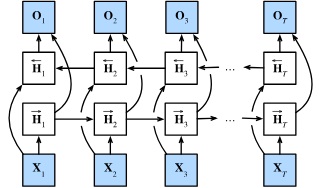

A bidirectional RNN architecture basically processes the sequence of data twice, once from left to right and another time from right to left. It uses two separate sets of parameters to keep track of the hidden states. Afterwards, the hidden states are concatenated.

Mathematically,

Visually,

The benefit of this architecture is that it captures information not only from the previous words, but also from the next words. The concept stays the same for a bidirectional LSTM model as well. Except the parameters and inner workings change, instead of using two separate RNN modules for forward and backward passes, we use two LSTM models.

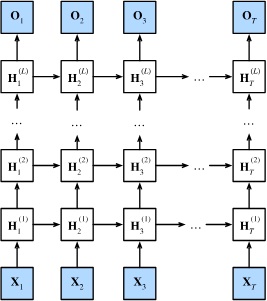

A multi-layer LSTM model takes the hidden states of the previous layer as input for the next layer. In the beginning, embeddings are used as the input for the first layer. Then the first layer produces the first set of hidden states. These are then treated as input for the second layer.

Mathematically,

Visually,

The idea is that like multi-layer perceptrons, multi-layer RNNs will learn something new in each layer and get progressively better at learning patterns in the underlying data.

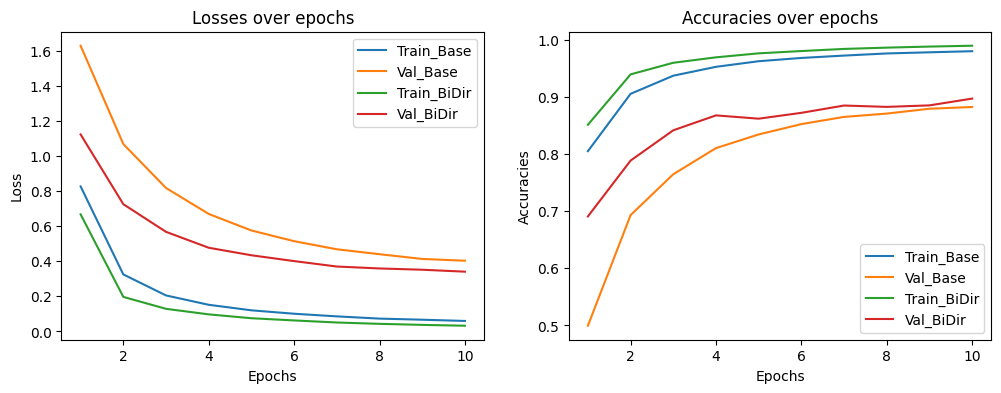

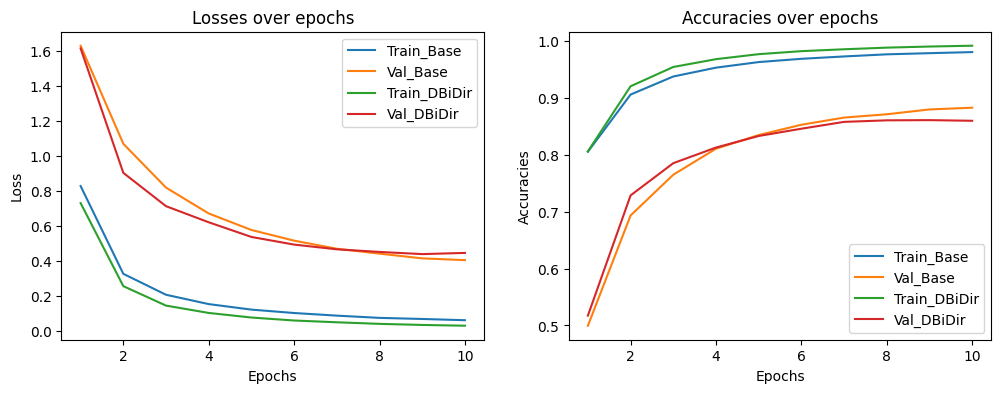

Bi-directionality adds a significant improvement to the base LSTM layer. This is evident in the following graph:

The bi-lstm model's inference time is nearly twice as long, but is worth the additional performance benefits. However, this is not true for the deep bi-lstm model. Because it doesn't achieve a significant performance improvement. Moreover, its inference time is nearly 4 times that of the base model.

- My Natural Language Processing course instructor Hangfeng He.

- Dive into Deep Learning authors and community