This is the official implementation of Focals Conv (CVPR 2022), a new sparse convolution design for 3D object detection (feasible for both lidar-only and multi-modal settings). For more details, please refer to:

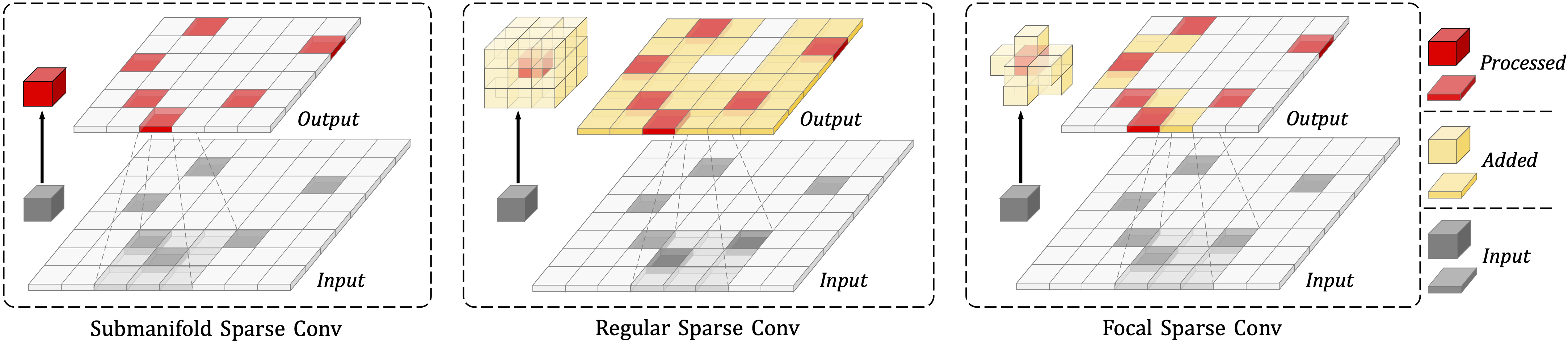

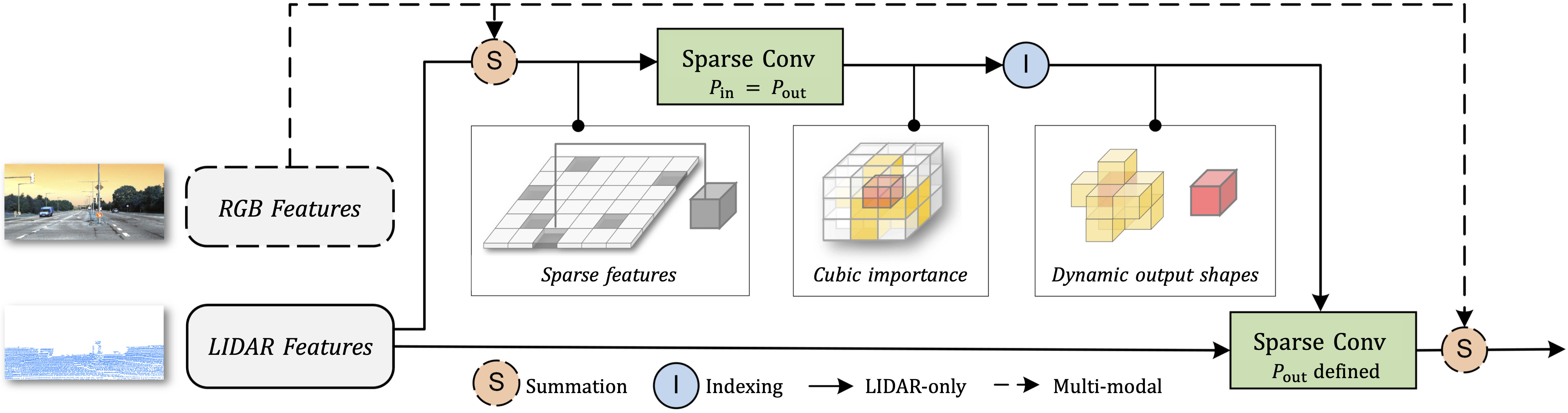

Focal Sparse Convolutional Networks for 3D Object Detection [Paper]

Yukang Chen, Yanwei Li, Xiangyu Zhang, Jian Sun, Jiaya Jia

| Car@R11 | Car@R40 | download | |

|---|---|---|---|

| PV-RCNN + Focals Conv | 83.91 | 85.20 | Google | Baidu (key: m15b) |

| PV-RCNN + Focals Conv (multimodal) | 84.58 | 85.34 | Google | Baidu (key: ie6n) |

| Voxel R-CNN (Car) + Focals Conv (multimodal) | 85.68 | 86.00 | Google | Baidu (key: tnw9) |

| mAP | NDS | download | |

|---|---|---|---|

| CenterPoint + Focals Conv (multi-modal) | 63.86 | 69.41 | Google | Baidu (key: 01jh) |

| CenterPoint + Focals Conv (multi-modal) - 1/4 data | 62.15 | 67.45 | Google | Baidu (key: 6qsc) |

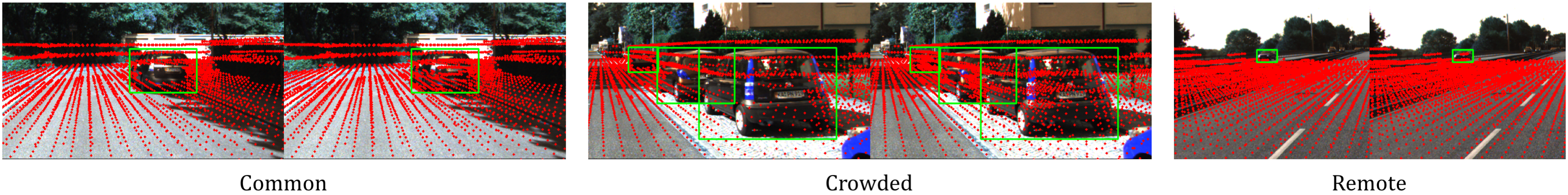

Visualization of voxel distribution of Focals Conv on KITTI val dataset:

a. Clone this repository

https://github.com/dvlab-research/FocalsConv && cd FocalsConvb. Install the environment

Following the install documents for OpenPCdet and CenterPoint codebases respectively, based on your preference.

*spconv 2.x is highly recommended instead of spconv 1.x version.

c. Prepare the datasets.

Download and organize the official KITTI and Waymo following the document in OpenPCdet, and nuScenes from the CenterPoint codebase.

*Note that for nuScenes dataset, we include image-level gt-sampling (copy-paste) to train the multi-modal setting. Please download this dbinfos_train_10sweeps_withvelo.pkl to replace the original one. (Google | Baidu (key:b466))

*Note that for nuScenes dataset, we conduct ablation studies on a 1/4 data training split. Please download infos_train_mini_1_4_10sweeps_withvelo_filter_True.pkl if you needed for training. (Google | Baidu (key:769e))

We provide the trained weight file so you can just run with that. You can also use the model you trained.

For models in OpenPCdet,

NUM_GPUS=8

cd tools

bash scripts/dist_test.sh ${NUM_GPUS} --cfg_file cfgs/kitti_models/voxel_rcnn_car_focal_multimodal.yaml --ckpt path/to/voxelrcnn_focal_multimodal.pth

bash scripts/dist_test.sh ${NUM_GPUS} --cfg_file cfgs/kitti_models/pv_rcnn_focal_multimodal.yaml --ckpt ../pvrcnn_focal_multimodal.pth

bash scripts/dist_test.sh ${NUM_GPUS} --cfg_file cfgs/kitti_models/pv_rcnn_focal_lidar.yaml --ckpt path/to/pvrcnn_focal_lidar.pthFor models in CenterPoint,

CONFIG="nusc_centerpoint_voxelnet_0075voxel_fix_bn_z_focal_multimodal"

python -m torch.distributed.launch --nproc_per_node=${NUM_GPUS} ./tools/dist_test.py configs/nusc/voxelnet/$CONFIG.py --work_dir ./work_dirs/$CONFIG --checkpoint centerpoint_focal_multimodal.pthFor configures in OpenPCdet,

bash scripts/dist_train.sh ${NUM_GPUS} --cfg_file cfgs/kitti_models/CONFIG.yamlFor configures in CenterPoint,

python -m torch.distributed.launch --nproc_per_node=${NUM_GPUS} ./tools/train.py configs/nusc/voxelnet/$CONFIG.py --work_dir ./work_dirs/CONFIG- Note that we use 8 GPUs to train OpenPCdet models and 4 GPUs to train CenterPoint models.

-

- Config files and trained models on the overall Waymo dataset.

-

- Config files and scripts for the test augs (double-flip and rotation) in nuScenes test submission.

-

- Results and models of Focals Conv Networks on 3D Segmentation datasets.

If you find this project useful in your research, please consider citing:

@inproceedings{focalsconv-chen,

title={Focal Sparse Convolutional Networks for 3D Object Detection},

author={Chen, Yukang and Li, Yanwei and Zhang, Xiangyu and Sun, Jian and Jia, Jiaya},

booktitle={Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition},

year={2022}

}

-

This work is built upon the

OpenPCDetandCenterPoint. Please refer to the official github repositories, OpenPCDet and CenterPoint for more information. -

This README follows the style of IA-SSD.

This project is released under the Apache 2.0 license.